the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Improving short-term sea ice concentration forecasts using deep learning

Thomas Lavergne

Jozef Rusin

Arne Melsom

Julien Brajard

Are Frode Kvanum

Atle Macdonald Sørensen

Laurent Bertino

Malte Müller

Reliable short-term sea ice forecasts are needed to support maritime operations in polar regions. While sea ice forecasts produced by physically based models still have limited accuracy, statistical post-processing techniques can be applied to reduce forecast errors. In this study, post-processing methods based on supervised machine learning have been developed for improving the skill of sea ice concentration forecasts from the TOPAZ4 prediction system for lead times from 1 to 10 d. The deep learning models use predictors from TOPAZ4 sea ice forecasts, weather forecasts, and sea ice concentration observations. Predicting the sea ice concentration for the next 10 d takes about 4 min (including data preparation), which is reasonable in an operational context. On average, the forecasts from the deep learning models have a root mean square error 41 % lower than TOPAZ4 forecasts and 29 % lower than forecasts based on persistence of sea ice concentration observations. They also significantly improve the forecasts for the location of the ice edges, with similar improvements as for the root mean square error. Furthermore, the impact of different types of predictors (observations, sea ice, and weather forecasts) on the predictions has been evaluated. Sea ice observations are the most important type of predictors, and the weather forecasts have a much stronger impact on the predictions than sea ice forecasts.

- Article

(6518 KB) - Full-text XML

-

Supplement

(2022 KB) - BibTeX

- EndNote

Due to increasing maritime traffic in the Arctic (Gunnarsson, 2021; Müller et al., 2023), there is a growing demand for reliable short-term sea ice forecasts that can support marine operations (Wagner et al., 2020). While short-term sea ice forecasts are operationally produced by several institutions using dynamical models (e.g., Sakov et al., 2012; Smith et al., 2016; Barton et al., 2021; Williams et al., 2021; Ponsoni et al., 2023; Röhrs et al., 2023), the usefulness of these forecasts in Arctic navigation is often limited by their inaccuracies (Veland et al., 2021). Melsom et al. (2019) reported that the location of the ice edge is predicted with a mean accuracy of 39 km in 5 d forecasts from the TOPAZ4 prediction system (Sakov et al., 2012), with larger errors during the summer when most of the maritime traffic occurs (Müller et al., 2023). Furthermore, the sea ice concentration (SIC) forecasts from the regional model Barents-2.5km v2.0 are, in most cases, not better than persistence of the SIC observations for short lead times (Röhrs et al., 2023).

It is common practice to post-process weather forecasts produced by dynamical (physically based) models in order to improve their skill. Statistical correction techniques have been applied to atmospheric forecasts at timescales ranging from hours to seasons (e.g., Wang et al., 2019; Vannitsem et al., 2021; Frnda et al., 2022; Roberts et al., 2023), particularly to essential variables for end users such as temperature, wind, and precipitation. In sea ice forecasting, most post-processing methods have been developed for subseasonal to seasonal timescales (e.g., Zhao et al., 2020; Director et al., 2021; Dirkson et al., 2019, 2022), but short-term sea ice forecasts produced by dynamical models are usually not post-processed despite their potential interests for end users (Wagner et al., 2020). Nevertheless, Palerme and Müller (2021) showed that the errors in short-term sea ice drift forecasts (up to 10 d) from the TOPAZ4 prediction system (Sakov et al., 2012) can be significantly reduced using random forest models (by 8 % and 7 % for the direction and speed of sea ice drift, respectively). These post-processed sea ice drift forecasts have been distributed on the IcySea commercial application from 2020 to 2024 (https://driftnoise.com/icysea.html (last access: 25 April 2024); von Schuckmann et al., 2021) and can be considered to be an exception in operational short-term sea ice forecasting.

Another approach consists of developing statistical sea ice forecasts without using dynamical sea ice model outputs. This has been used for sea ice forecasting at different timescales (e.g., Kim et al., 2020; Fritzner et al., 2020; Liu et al., 2021; Andersson et al., 2021; Grigoryev et al., 2022; Ren et al., 2022), with the advantage of greatly reducing the computational cost compared to dynamical models. Andersson et al. (2021) developed a deep learning seasonal forecasting system (IceNet) that predicts the probability that the SIC exceeds 15 %. IceNet significantly outperforms the European Centre for Medium-Range Weather Forecasts (ECMWF) SEAS5 dynamical seasonal prediction system (Johnson et al., 2019) for lead times from 2 to 6 months, and it runs over 2000 times faster on a laptop than SEAS5 on a supercomputer. While many studies have investigated such approaches for sea ice forecasting, most of them were not focused on operational short-term forecasting. Grigoryev et al. (2022) developed short-term (up to 10 d) data-driven SIC forecasts for several Arctic seas in an operational context with considering real-time availability of data. Their forecasts, based on U-Net convolutional neural networks (Ronneberger et al., 2015) with predictors from sea ice observations and weather forecasts, significantly outperformed persistence and linear trend forecasts.

Most of the short-term sea ice prediction systems based on machine learning do not use predictors from dynamical sea ice models (Fritzner et al., 2020; Liu et al., 2021; Grigoryev et al., 2022; Ren et al., 2022; Keller et al., 2023; Kvanum et al., 2024), and it is currently unclear whether adding such predictors would significantly improve forecast accuracy. This study aims at assessing the impact of using predictors from dynamical sea ice models in the development of the SIC forecasts from machine learning, as well as the impact of post-processing the SIC forecasts from a dynamical sea ice model for lead times from 1 to 10 d. The post-processing method developed is based on convolutional neural networks with a U-Net architecture (Ronneberger et al., 2015) and uses predictors from TOPAZ4 SIC forecasts, ECMWF weather forecasts, and SIC observations from the Advanced Microwave Scanning Radiometer 2 (AMSR2). It is evaluated by assessing the improvement compared to the raw TOPAZ4 forecasts and to the predictions from similar deep learning models without using predictors from TOPAZ4 sea ice forecasts. In Sect. 2, the data, the development of the deep learning models, and the methods used for evaluating the forecasts are presented. The results are then described in Sect. 3, followed by the discussions and conclusions in Sect. 4.

2.1 Sea ice observations

The AMSR2 sensor is a conically scanning, dual-polarized microwave radiometer that measures the microwave emissions emitted from the Earth's surface across several frequencies. AMSR2 SIC data are currently assimilated into sea ice prediction systems, such as the Barents-2.5km model (Röhrs et al., 2023; Durán Moro et al., 2024) due to its capability of daily coverage of the polar regions and its independence of solar illumination, enabling year-round observations. The AMSR2 SIC observations used in this study were produced using the resolution-enhancing (reSICCI3LF) algorithm, which was initially developed for the European Space Agency Climate Change Initiative (ESA CCI; Lavergne et al., 2021) and adapted for the AMSR2 mission in the Sea Ice Retrievals and data Assimilation in NOrway (SIRANO) project (Rusin et al., 2024a). This algorithm aims at producing high-resolution SIC fields with low measurement uncertainties by combining two retrievals. The 19 and 37 GHz channels are used to derive a coarse SIC field (15 km) with low measurement uncertainties, whereas the 89 GHz channels are used to derive a higher-resolution SIC field (∼ 5 km) with larger uncertainties. The high-resolution details derived from the 89 GHz channels are then added to the coarse SIC field, enabling the production of a SIC field with low measurement uncertainties at a higher spatial resolution (∼ 5 km). Using this algorithm, daily averaged pan-Arctic SIC fields were produced for the period 2012–2022 on a 5 km Equal-Area Scalable Earth 2.0 (EASE2) grid. In this study, these new observations are used as a reference for evaluating the SIC forecasts as well as for some predictors and the target variable of deep learning models.

In addition, the ice charts produced by the Ice Service of the Norwegian Meteorological Institute (https://cryo.met.no/en/latest-ice-charts (last access: 25 April 2024); JCOMM Expert Team on sea ice, 2017) are used as an independent data set for evaluating the AMSR2 SIC observations and the forecasts developed in this study. The ice charts are manually drawn by ice analysts using several types of remote sensing data. Due to their high spatial resolution, synthetic-aperture radar (SAR) images constitute the main source of information where they are available. Elsewhere, visible and infrared observations are given priority, while passive microwave retrievals are used where no other observations are available. For evaluating the SIC forecasts, the ice charts were interpolated on the grid used for the deep learning models using nearest-neighbor interpolation. It is worth noting that the ice charts provide SIC categories and are not produced during weekends. Therefore, the number of ice charts available in 2022 for evaluating the SIC forecasts varies depending on lead time (between 144 and 243) and is considerably lower than the number of AMSR2 SIC observations available.

2.2 Predictors and data sets used for the deep learning models

The post-processing method developed in this study is applied to TOPAZ4 sea ice forecasts. TOPAZ4 is a numerical prediction system producing 10 d forecasts at a 12.5 km resolution for the Arctic and the North Atlantic with hourly time steps (Sakov et al., 2012). It consists of a sea ice model with one thickness category and an elastic–viscous–plastic rheology (Hunke and Dukowicz, 1997) coupled with the version 2.2 of the Hybrid Coordinate Ocean Model (HYCOM; Bleck, 2002; Chassignet et al., 2006). Sea ice and oceanic observations are assimilated weekly using an ensemble Kalman filter, and the ocean surface is forced by ECMWF high-resolution weather forecasts.

Wind and temperature high-resolution forecasts (HRES) from the ECMWF Integrated Forecasting System (IFS) are also used as predictors. These forecasts have lead times of up to 10 d and are produced four times per day, but only the forecasts starting at 00:00 UTC are used in this study. Due to the developments of IFS HRES over time, forecasts produced by different model cycles have been used, and it is worth noting that the spatial resolution changed from about 16 to 9 km in March 2016 (https://www.ecmwf.int/en/forecasts/documentation-and-support/changes-ecmwf-model, last access: 25 April 2024).

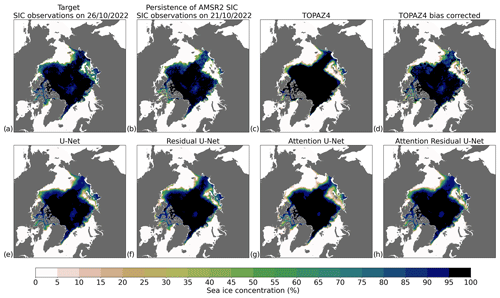

In this work, the deep learning models have been developed using eight predictors that can be divided into three categories (Table 1). First, two predictors are derived from AMSR2 SIC observations acquired before the forecast start date and consist of the SIC observations from the day preceding the forecast start date and the SIC trend calculated over the 5 d preceding the forecast start date (in % d−1). The second category consists of weather forecasts from ECMWF that have been averaged between the forecast start date and the predicted lead time. These predictors are the 2 m temperature, as well as the x and y components of the 10 m wind, on the grid used for the deep learning models. Then, predictors from the TOPAZ4 ocean model can be considered to be the last category. These variables are the SIC forecasts for the predicted lead time, the difference between TOPAZ4 SIC during the first daily time step and the SIC observed the day before (hereafter referred to as “TOPAZ4 initial errors”), and the land–sea mask (constant predictor). The predictors from weather and sea ice forecasts vary depending on lead time. Therefore, different deep learning regression models were developed for each lead time from 1 to 10 d. Before developing the deep learning models, all the predictors and the SIC observations used for the target variable were projected onto a common grid using nearest-neighbor interpolation. This grid has the same projection and spatial resolution (12.5 km) as the TOPAZ4 prediction system but is smaller (544 × 544) due to the constraints related to the U-Net architecture (the x and y axes must be divided by 2 several times). Nevertheless, this grid includes all the grid points that can potentially be covered by sea ice from the TOPAZ4 prediction system. When providing the predictors to the neural networks, all the grid points must contain valid values, meaning that the land grid points must be filled with valid values for oceanic variables. In this study, the land grid points were considered to be ice-free ocean in the predictors. Furthermore, all the predictors and the target variable have been normalized (resulting in values ranging from 0 to 1) before providing them to the neural networks. The training data set was used to compute the minimum and maximum values of the variables, which were then used for the normalization.

Though TOPAZ4 produces daily 10 d forecasts, only the forecasts starting on Thursdays (when data assimilation is performed) are stored in the long-term archive. Therefore, weekly data during the period 2013–2020 were used for training the deep learning models, resulting in about 400 forecasts for each lead time. However, we stored daily TOPAZ4 forecasts from 2021, and we therefore used daily data for the validation and test data sets, which consist of the forecasts from 2021 and 2022, respectively.

2.3 Development of the deep learning models

U-Net neural networks are designed to perform image segmentation tasks using an encoder–decoder architecture (Ronneberger et al., 2015) and have been successfully used in earlier studies for sea ice forecasting (Andersson et al., 2021; Grigoryev et al., 2022; Keller et al., 2023; Kvanum et al., 2024). Several variations from the original U-Net architecture of Ronneberger et al. (2015) are tested in our study. First, some models were developed using residual connections (He et al., 2016) in the convolutional blocks (meaning that the residual was learned at each block), which was shown to ease neural network training (He et al., 2016). It is worth noting that the residual U-Net architecture was used by Keller et al. (2023) for predicting the sea ice extent in the Beaufort Sea. Furthermore, the impact of using attention blocks introduced by Oktay et al. (2018) in the decoder, and designed to give more weight (attention) to areas that are challenging to predict (these regions are identified by the attention blocks during training), is also evaluated. The benefit of using attention blocks for sea ice forecasting was already shown by Ren et al. (2022), who developed an attention block (different from the one used in this study) for sea ice prediction with a fully convolutional network. Finally, average pooling was used in the downsampling blocks of the encoder instead of max pooling due to slightly better performances observed during the tuning phase (see the Supplement).

In the original U-Net architecture (Ronneberger et al., 2015), the number of convolutional filters is doubled (divided by 2) at every layer in the encoder (decoder). We used the same strategy with 32 convolutional filters in the first layer and with the He weight initialization technique (He et al., 2015). Five downsampling and five upsampling operations were used in the neural networks, resulting in feature maps with a size of 17 × 17 grid points in the bottleneck (compared to 544 × 544 grid points in the predictors). The models were trained using 100 epochs and a batch size of 4. An Adam optimizer was used with an initial learning rate of 0.005, which was then divided by 2 every 25 epochs. The mean squared error was used as a loss function, and the model version with the best validation loss was selected during training in order to avoid overfitting. Training the models, which contain between 31 and 39 million parameters, takes about 3 h on a 12 GB GPU (NVIDIA Tesla P100 PCIe). For further details regarding the model architectures, note that the codes used for creating the deep learning models are publicly available in a GitHub directory (see the “Code availability” section).

2.4 Verification scores

The forecasts are evaluated using two verification scores in this study. In order to analyze the full range of SIC values in the forecasts, as well as to strongly penalize large errors, the root mean square error (RMSE) is calculated over all oceanic grid points. In addition, the sea ice edge position is also evaluated. While the ice edge is defined here by the 15 % SIC contour (excluding coastlines) when the AMSR2 SIC observations are used as reference, the 10 % SIC contour is used when the forecasts are compared to the ice charts from the Norwegian Meteorological Institute (the 10 % SIC contour separates two sea ice categories). The integrated ice edge error (IIEE; Goessling et al., 2016) divided by the observed ice edge length (hereafter referred to as the “ice edge distance error”) is used for evaluating the ice edge positions, and the ice edge length is assessed using the method introduced by Melsom et al. (2019). While the IIEE measures the area of mismatch between two data sets, the ice edge distance error (Melsom et al., 2019) assesses the mean distance between two ice edges. The ice edge distance error also has the advantage of being less seasonally dependent than the IIEE, which is greatly influenced by the ice edge length (Goessling et al., 2016; Palerme et al., 2019). Therefore, it is more suitable than the IIEE for comparing and averaging forecast scores from different seasons. Furthermore, the Wilcoxon signed-rank test is used in this study to analyze the statistical significance of the differences between the forecast scores due to its relevance for paired observations (the same observations are used for evaluating different forecasts) and for non-parametric data (the errors are not normally distributed for the SIC). This analysis was performed using the two-tailed hypothesis with a significance level of 0.05. It is worth noting that the Wilcoxon signed-rank test assesses the statistical significance between the differences in the distribution of the errors (and not between the mean errors).

2.5 Benchmark forecasts

The performances of the deep learning models are evaluated by assessing the improvement compared to the raw TOPAZ4 forecasts. In addition, several benchmark forecasts are used as reference. First, persistence of the AMSR2 SIC observations from the day preceding the forecast start date (hereafter referred to as “persistence of AMSR2 SIC”) is used and can be considered to be the limit from which the forecasts are skillful. When the forecasts are evaluated using the ice charts as reference, a similar benchmark forecast consisting of persistence of the ice charts from the day preceding the forecast start date is also used (hereafter referred as “persistence of the ice charts”). The second benchmark forecast (hereafter referred to as “anomaly persistence”) consists of calculating the SIC anomalies from AMSR2 observations compared to a climatological reference the day before the forecast start date and adding these initial anomalies to the climatology during the target date. Then, the values lower than 0 % and higher than 100 % are assigned to 0 % and 100 %, respectively. All the full years between the launch of AMSR2 (May 2012) and the test period (2022) were used for calculating the climatology, resulting in a 9-year period (2013–2021). The last benchmark forecast consists of calculating the difference between TOPAZ4 SIC during the first daily time step and the SIC observed the day before (in order to only use observations available at the forecast start date) and then subtracting this difference from the TOPAZ4 forecasts for each lead time (hereafter referred to as the “TOPAZ4 bias-corrected”). The resulting values lower than 0 % and higher than 100 % are then assigned to 0 % and 100 %, respectively. Note that this forecast is equal to persistence of AMSR2 SIC for a 1 d lead time.

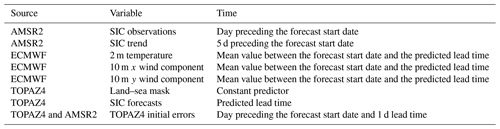

Figure 1Evaluation of the ice edge positions from the new AMSR2 sea ice concentration observations used in this study and the product OSI-408-a from the Ocean and Sea Ice Satellite Application Facility (OSI SAF) during the period 2017–2022. The ice charts produced by the Ice Service of the Norwegian Meteorological Institute are used as reference, and the analysis has therefore been done in the area covered by the ice charts (European Arctic). The ice edge distance error (see Sect. 2.4) is used for calculating the mean distance between the ice edges, and the monthly mean distances are reported in this figure. The red and blue lines correspond to the ice edge distance errors after all products were integrated onto the 10 km OSI-408-a grid. The black line shows the ice edge distance error for the new AMSR2 SIC product on its 5 km grid, thus retaining information on the finer resolution.

3.1 Sea ice concentration observations

The new AMSR2 observations were evaluated and compared to the Ocean and Sea Ice Satellite Application Facility (OSI-SAF) product OSI-408-a, which is also based on AMSR2 retrievals but with a spatial resolution of 10 km. The position of the ice edge (defined here by the 10 % SIC contour) was evaluated during the period from 2017 to 2022 using the ice charts from the Norwegian Meteorological Institute (JCOMM Expert Team on sea ice, 2017) as reference. All the data sets were projected onto the grid of the OSI-408-a product using nearest-neighbor interpolation, but only the area covered by the ice charts (European Arctic) was taken into account for this evaluation. The mean distances between the ice edges from the AMSR2 products and from the ice charts were assessed using the ice edge distance error. Overall, the new AMSR2 data set outperforms the OSI-408-a product (Fig. 1), with mean values of 16.8 km and 20.6 km for the new AMSR2 observations and the OSI-408-a product, respectively. Moreover, the new AMSR2 observations particularly outperform the OSI-408-a product close to the sea ice minimum (in August, September, and October) compared to the rest of the year. In order to assess the impact of the resolution, a supplementary analysis was performed on the 5 km grid from the new AMSR2 SIC observations when interpolating the ice charts onto this grid. On the 5 km grid, the mean distance between the ice edges from the new AMSR2 observations and the ice charts is 15.4 km, adding further confidence in the quality of the new product.

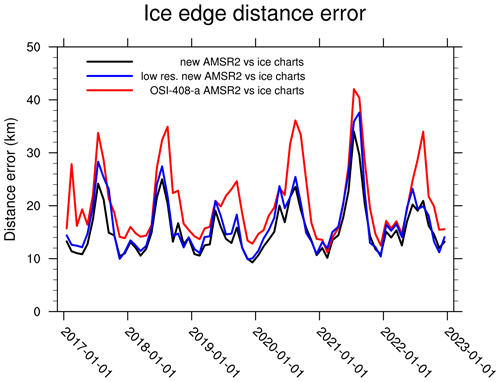

Figure 2Sea ice concentration forecasts over 5 d from different forecasting systems initialized on 22 October 2022. (a) AMSR2 sea ice concentration observations on 26 October 2022 (target date). (b) AMSR2 sea ice concentration observations during the day preceding the forecast start date (21 October 2022). What follows are 5 d sea ice concentration forecasts from different systems: TOPAZ4 (c), TOPAZ4 bias corrected (d), the deep learning model with the U-Net architecture (e), the deep learning model with the Residual U-Net architecture (f), the deep learning model with the Attention U-Net architecture (g), and the deep learning model with the Attention Residual U-Net architecture (h).

Figure 3Comparison of the performances of the deep learning models with different architectures during 2021 (validation period). (a) The root mean square error (RMSE) of the sea ice concentration. (b) The mean error for the sea ice edge position defined by the 15 % sea ice concentration contour (the ice edge distance error). AMSR2 sea ice concentration observations are used as reference.

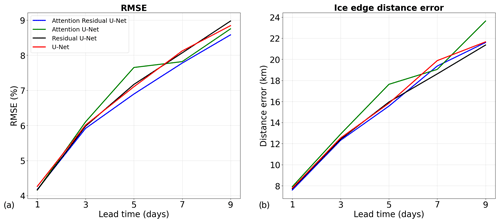

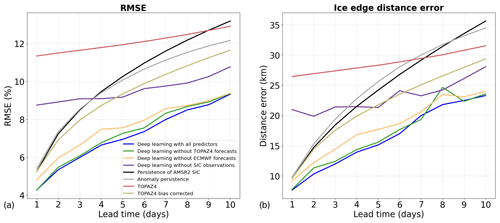

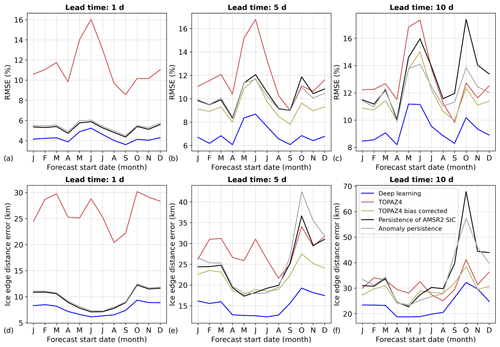

Figure 4Performances of the deep learning models with the Attention Residual U-Net architecture during 2022 (test period) using the AMSR2 sea ice concentration observations as reference. The deep learning models using all predictors are shown by the blue curves. The models which do not use predictors from TOPAZ4 sea ice forecasts (sea ice concentration forecasts and initial errors) are shown by the green curves. The models which do not use predictors from ECMWF weather forecasts (2 m temperature and wind) are shown by the yellow curves. The models which do not use predictors from sea ice observations (AMSR2 sea ice concentration, AMSR2 sea ice concentration trend, and TOPAZ4 initial errors) are shown by the purple curves.

3.2 Model architectures

The original U-Net architecture (with average pooling instead of max pooling) is compared to architectures including residual and attention blocks in Figs. 2 and 3. It is worth noting that the architecture influences the number of model parameters, which can also influence the performances. The number of parameters varies from 31 million for the U-Net models to 39 million for the Attention Residual U-Net models, and the models with the Residual U-Net and Attention U-Net architectures contain about 33 and 37 million parameters, respectively. Figure 2 shows 5 d forecasts initialized on 22 October 2022 from TOPAZ4, TOPAZ4 bias corrected, and the deep learning models developed with different architectures. Between the day preceding the forecast start date (21 October 2022) and the target date (26 October 2022), the sea ice cover increased in the Laptev and East Siberian seas, as well as in Baffin Bay. Moreover, a few large polynyas were located around the New Siberian Islands during the target date in an area not covered by sea ice during the day preceding the forecast start date. While all the deep learning models, as well as TOPAZ4 and TOPAZ4 bias corrected, reproduce an increase in sea ice cover in the Laptev and East Siberian seas, only the deep learning models predicted an increase in sea ice cover in Baffin Bay. The model with the Attention U-Net architecture produces a very small positive SIC (often lower than 2 %) in large areas where no sea ice is observed during the target date, which is a pattern often observed with this model for other dates as well. Nevertheless, it seems that adding residual blocks to this model (resulting in the Attention Residual U-Net architecture) consistently helps to better predict these areas. Furthermore, the model with the Attention Residual U-Net architecture produces the most realistic forecasts of the polynyas among the deep learning models.

In Fig. 3, the performances of the deep models with different architectures are evaluated during the validation period (2021). For a 1 d lead time, the different architectures produce forecasts with similar performances, except the U-Net architecture for which the forecasts have an RMSE about 2 % larger. The models with the Attention Residual U-Net architecture have the lowest RMSE for longer lead times and the lowest errors for the position of the ice edge for lead times up to 5 d. Therefore, the Attention Residual U-Net architecture has been selected for the rest of this study despite the higher errors for the position of the ice edge for 7 and 9 d lead times compared to the forecasts produced using the Residual U-Net architecture. Furthermore, it is worth noting that the forecasts produced using the Attention Residual U-Net architecture have a lower RMSE and lower errors for the position of the ice edge than the forecasts from the models with the U-Net architecture for all lead times. These differences are statistically significant (p value from the Wilcoxon signed-rank test < 0.05) for all lead times and metrics, except for the ice edge distance error for a 9 d lead time.

3.3 Performances of the deep learning models

In Fig. 4, the predictions from the models with the Attention Residual U-Net architecture are compared to the benchmark forecasts during the test period (2022) using AMSR2 SIC observations as reference. They significantly outperform all the benchmark forecasts for all lead times. The RMSE is improved on average by 41 % compared to TOPAZ4 (between 28 % and 62 % depending on lead times), by 29 % compared to persistence of AMSR2 SIC (between 19 % and 33 %), by 23 % compared to TOPAZ4 bias corrected (between 19 % and 26 %), and by 27 % compared to anomaly persistence (between 21 % and 31 %). Furthermore, the ice edge distance error is reduced on average by 44 % compared to TOPAZ4, by 25 % compared to TOPAZ4 bias corrected, by 32 % compared to persistence of AMSR2 SIC, and by 34 % compared to anomaly persistence.

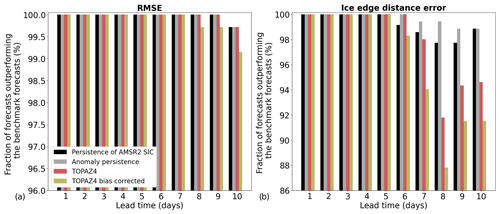

Figure 5Fraction of days in 2022 (test period) during which the forecasts from the models with the Attention Residual U-Net architecture outperform the different benchmark forecasts when the forecasts are evaluated with the RMSE (a) and with the ice edge distance error (b). AMSR2 sea ice concentration observations are used as reference.

In order to assess the impact of the different data sets used in the predictors (observations, sea ice, and weather forecasts), other deep learning models were developed without including either predictors from TOPAZ4 sea ice forecasts (the SIC forecasts and TOPAZ4 initial errors), predictors from ECMWF weather forecasts (temperature and wind forecasts), or predictors from AMSR2 SIC observations (the SIC during the day preceding the forecast start date, the SIC trend, and TOPAZ4 initial errors). These models have the same architecture and hyperparameters as the models using all predictors, and their performances are also shown in Fig. 4. Note that TOPAZ4 initial errors are considered to be a predictor from TOPAZ4 sea ice forecasts and from AMSR2 SIC observations in this experiment since both data sets are needed to create this predictor. Overall, the predictions are much more impacted by dropping ECMWF weather forecasts than by removing TOPAZ4 sea ice forecasts. On average, the relative increase in the RMSE is 2.1 % if the predictors from TOPAZ4 sea ice forecasts are removed compared to 7.7 % if the predictors from ECMWF weather forecasts are removed. The differences in the RMSE between the models using all predictors and those developed without ECMWF weather forecasts are statistically significant for all lead times (p value from the Wilcoxon signed-rank test < 0.05). When comparing the models using all predictors to those developed without TOPAZ4 sea ice forecasts, the differences in the RMSE are statistically significant for all lead times, except for 1 and 10 d. Furthermore, the forecasts from ECMWF and TOPAZ4 have relatively similar impacts on the RMSE for lead times from 8 to 10 d. The differences in the RMSE between the models developed without TOPAZ4 sea ice forecasts and those developed without ECMWF weather forecasts remain statistically significant for lead times up to 9 d, but this difference is not significant for a 10 d lead time.

The impact of removing predictors from TOPAZ4 or ECMWF forecasts is stronger for the position of the ice edge, with a mean increase in the ice edge distance error of 3.5 % and 12.3 % for the predictors from TOPAZ4 and ECMWF forecasts, respectively. Nevertheless, the models developed without TOPAZ4 sea ice forecasts have slightly smaller ice edge distance errors than the models using all predictors for lead times of 7 and 9 d, and the difference in the ice edge distance error is not statistically significant for a 10 d lead time. Furthermore, removing the predictors from sea ice observations has a very strong impact on the predictions, with a mean relative increase of 39 % in the RMSE and of 55 % in the ice edge distance error.

Figure 5 shows the fraction of days in 2022 during which the forecasts produced by the deep learning models outperform the different benchmark forecasts. When the forecasts are evaluated using the RMSE, the forecasts from the deep learning models outperform all benchmark forecasts for lead times from 1 to 7 d and at least 99 % of the different benchmark forecasts for longer lead times. Moreover, the forecasts from the deep learning models outperform all benchmark forecasts for lead times from 1 to 5 d when the ice edge position is evaluated. For longer lead times, the deep learning models outperform at least 97 % of persistence of AMSR2 SIC forecasts and 98 % of the anomaly persistence forecasts. They also predict the ice edge position with better accuracy than TOPAZ4 in at least 91 % of the cases for all lead times and in at least 87 % of the cases compared to TOPAZ4 bias corrected.

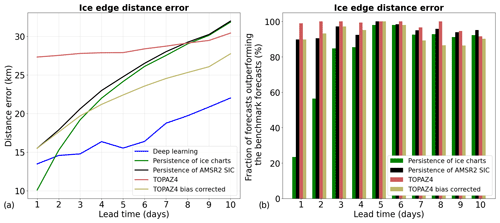

Figure 6Performances of the deep learning models with the Attention Residual U-Net architecture during 2022 (test period) using the ice charts as reference. The ice edge position (defined by the 10 % SIC contour) is evaluated. (a) The mean ice edge distance errors depending on lead time. (b) Fraction of days in 2022 during which the forecasts from the models with the Attention Residual U-Net architecture outperform the different benchmark forecasts when the forecasts are evaluated using the ice edge distance error. It is worth noting that this evaluation is performed over the area covered by the ice charts from the Norwegian Meteorological Institute (European Arctic) and that the number of forecasts evaluated varies depending on lead time because ice charts are not produced during weekends.

In order to assess the performances of the SIC forecasts using independent observations, an additional evaluation was performed in the European Arctic using the ice charts from the Norwegian Meteorological Institute as reference (Fig. 6). Since the ice charts provide sea ice categories (and not the SIC as a continuous variable), only the ice edge position is evaluated in Fig. 6. On average, the forecasts from the deep learning models have an ice edge distance error 40 % lower than TOPAZ4 forecasts, 23 % lower than TOPAZ4 bias corrected, 29 % lower than persistence of AMSR2 SIC, and 22 % lower than persistence of the ice charts. While the forecasts from the deep learning models outperform TOPAZ4, TOPAZ4 bias corrected, and persistence of AMSR2 SIC for all lead times, they have worse performances than persistence of the ice charts for a 1 d lead time (the ice edge distance error is 33 % larger). Moreover, only 23 % of the forecasts from the deep learning models outperform persistence of the ice charts for a 1 d lead time. Nevertheless, the forecasts from the deep learning models significantly outperform persistence of the ice charts for longer lead times (p value from the Wilcoxon signed-rank test < 0.05).

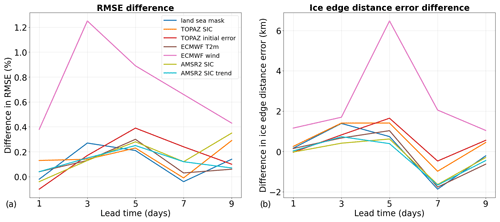

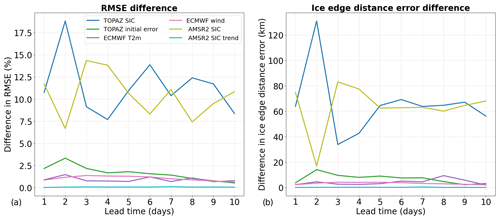

Figure 7Differences in the root mean square error in the sea ice concentration (a) and in the ice edge distance error (b) when one of the predictor variables is not used in the deep learning models during 2022 (test period). The differences represent the subtraction between the performances of the models in which one predictor was not used and the models using all the predictors. Therefore a positive value means that adding the variable in the model improves the forecasts. AMSR2 sea ice concentration observations are used as reference.

3.4 Predictor importance

In order to analyze the impact of each predictor on the forecasts, two approaches are used in this study. The first method is the same as the one used in Fig. 4 to test the impact of removing some data sets from the list of predictors, except that only one predictor is removed for each model. Then, the performances of the different models are compared to assess the impact of the different predictors on the forecasts. Due to the relatively long computing time necessary for developing the different models, this experiment has only been performed using half of the lead times. While two predictors are used for the wind forecasts (x and y components), only one model per lead time was developed by removing both predictors simultaneously to test the impact of wind forecasts. It is worth noting that the importance of highly correlated predictors can be underestimated using this method since similar information is provided to the neural network when one predictor is removed. The results from this experiment are shown in Fig. 7. While all the predictors tend to reduce the RMSE averaged over all lead times, some predictors have a negative impact on the predictions of the ice edge (ECMWF 2 m temperature forecasts and AMSR2 sea ice concentration observations and trend). The wind forecasts have the largest impact among the predictors for all lead times. Removing the wind forecasts leads to a mean absolute increase in the RMSE of 0.72 % and a mean increase in the ice edge distance error of 2.49 km. The other predictors have a much lower impact on the forecasts. Overall, the predictors from TOPAZ4 (the SIC forecasts and initial errors) have the strongest impact on the predictions of the ice edge among the other predictors, with a mean difference in the ice edge distance error of about 0.5 km for each predictor. However, the predictors from TOPAZ4 sea ice forecasts have a slight negative impact on the 7 d forecasts of the ice edge position.

Figure 8Differences in the root mean square error in the sea ice concentration (a) and in the ice edge distance error (b) when the field from a wrong date is provided to the deep learning models for one predictor during 2022 (test period). The differences represent the subtraction between the performances of the models in which one predictor is shuffled and the reference model. AMSR2 sea ice concentration observations are used as reference.

Another method called permutation feature importance has been used to assess the impact of the different predictors on the forecasts (Fig. 8). In this method, only the models developed using all predictors are used. When making a forecast, one predictor is randomly permuted by providing the predictor data from another forecast start date. The goal of this experiment is to test how much the models are fitted to the different predictors. Figure 8 shows that the neural networks are considerably fitted on TOPAZ4 SIC forecasts and AMSR2 SIC observations. Permuting the fields from these predictors produces very inaccurate forecasts, leading to mean absolute increases in the RMSE of 11.4 % and 10.4 % if TOPAZ4 SIC forecasts and AMSR2 SIC observations, respectively, are permuted. Similar results were obtained for the position of the ice edge, with large increases in the ice edge distance error if these predictors are permuted (65.7 and 63.3 km for TOPAZ4 SIC forecasts and AMSR2 SIC observations, respectively). Moreover, the relative importance of these two predictors seems anticorrelated depending on lead times. This suggests that the neural networks need at least one SIC field to guide the SIC predictions. Furthermore, permuting the AMSR2 SIC trend seems to have almost no impact on the forecasts, suggesting that the neural networks use this predictor only marginally.

Figure 9Seasonal variability in the performances of the deep learning models with the Attention Residual U-Net architecture in 2022 (test period) for different lead times (1, 5, and 10 d) when the forecasts are evaluated using the RMSE (a–c) and the ice edge distance error (d–f). AMSR2 sea ice concentration observations are used as reference.

3.5 Seasonal and spatial variabilities

Figure 9 shows the seasonal variability in the performances of the deep learning models for lead times of 1, 5, and 10 d. Overall, the deep learning models show robust results, with no clear seasonal cycle in the relative improvement compared to TOPAZ4 forecasts and persistence of AMSR2 SIC. Moreover, the deep learning models outperform all the benchmark forecasts for all the months, except in November when the 10 d forecasts are evaluated using the ice edge distance error. In November, the 10 d forecasts from the deep learning models have a similar ice edge distance error as the TOPAZ4 bias-corrected forecasts.

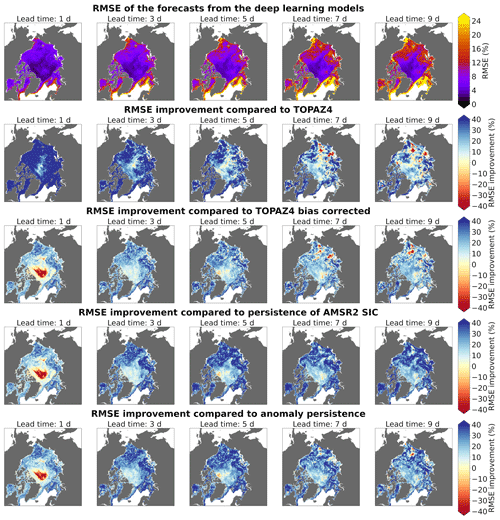

Figure 10The root mean square error (RMSE) of the forecasts from the deep learning models with the Attention Residual U-Net architecture (first row) in 2022 (test period). Relative improvement in the RMSE (%) compared to TOPAZ4 forecasts (second row), TOPAZ4 bias corrected (third row), persistence of AMSR2 SIC (fourth row), and anomaly persistence (fifth row). Positive values mean that the deep learning forecasts outperform the benchmark forecasts. AMSR2 sea ice concentration observations are used as reference, and the grid points with less than 50 d during which the AMSR2 observations indicate some sea ice (sea ice concentration higher than 0 %) are not taken into account in this figure.

The spatial variability in the performances of the deep learning models in 2022 is shown in Fig. 10. The grid points with less than 50 d during which the AMSR2 observations indicate some sea ice (a SIC higher than 0 %) are excluded from the analysis in order to only keep meaningful data. Nevertheless, Fig. 10 must be interpreted carefully because forecasts from different seasons with varying sea ice edge positions are taken into account in this analysis. The forecasts from the deep learning models outperform the TOPAZ4 forecasts almost everywhere but have slightly lower performances in the East Siberian Sea compared to the rest of the Arctic. Nevertheless, it is difficult to determine if these poorer performances in the East Siberian Sea are persistent because only 1 year is used for this analysis. Furthermore, the relative improvement from the forecasts produced by the deep learning models, compared to TOPAZ4 forecasts, decreases with increasing lead times. Compared to persistence of AMSR2 SIC and anomaly persistence, the relative improvement in the RMSE increases with increasing lead times. There is an area in the central Arctic where the 1 d forecasts from the deep learning models have a larger RMSE than TOPAZ4 bias corrected, persistence of AMSR2 SIC, and anomaly persistence. However, the forecasts from the deep learning models have a low RMSE in this area, meaning that the relative differences in this area do not represent large absolute values. Except for this area in the Central Arctic for a 1 d lead time, the forecasts from the deep learning models outperform the benchmark forecasts almost everywhere, with larger improvements in areas where the marginal ice zone is often located.

The forecasts from the deep learning models developed in this study significantly outperform all the benchmark forecasts for all lead times when the AMSR2 SIC observations are used as reference, with a mean RMSE 41 % lower than for TOPAZ4 forecasts and 29 % lower than for persistence of AMSR2 SIC. They also considerably better predict the ice edge position than the benchmark forecasts (the ice edge distance error is reduced by 44 % and 32 % compared to TOPAZ4 and persistence of AMSR2 SIC, respectively). Moreover, their good performances for various seasons and locations, as well as the relatively similar results obtained during the validation and test periods (see the Supplement), suggest that these models are robust. While it takes less than a second to predict the sea ice concentration for one lead time on a 12 GB GPU (NVIDIA Tesla P100 PCIe) once the list of predictors is available, the full processing chain, including the production of the predictors on a common grid, takes about 4 min for all lead times. This is negligible compared to the time necessary for producing TOPAZ4 forecasts and therefore reasonable in an operational context. However, the production of TOPAZ4 forecasts was stopped in April 2024, and the AMSR2 SIC observations used in this study are not available in near real time yet. This prevents the operational use of the post-processing method presented here.

Using the ice charts from the Norwegian Meteorological Institute as reference, the forecasts from the deep learning models outperform all benchmark forecasts for lead times longer than 1 d in the European Arctic but are worse than persistence of the ice charts for a 1 d lead time. Since the deep learning models are trained using AMSR2 SIC observations for the target variable, it cannot be expected that they perform better than the differences between the two observational products (Fig. 1). While using ice charts for training deep learning models has been recently proposed by Kvanum et al. (2024), this does not allow for the prediction of the SIC as a continuous variable.

Whereas previous studies used the original U-Net architecture for SIC predictions (Andersson et al., 2021; Grigoryev et al., 2022), our results suggest that slightly better performances can be achieved by adding residual and attention blocks (a RMSE about 2.8 % lower on average), resulting in the Attention Residual U-Net architecture. In addition to the original U-Net architecture, Grigoryev et al. (2022) also tested a recurrent U-Net architecture in order to take into account the temporal evolution of the sea ice before the forecast start date. They obtained slightly better results with the recurrent U-Net architecture for short lead times (until 5 d in the Labrador and Laptev seas and until 10 d in the Barents Sea) but worse results than with the original U-Net architecture for longer lead times. Furthermore, they reported that the computational cost for training the recurrent U-Net models was much higher than for training the U-Net models. In this study, training the models with the Attention Residual U-Net architecture took about the same time as training the models with the U-Net architecture, and the models with the Attention Residual U-Net architecture have better performances than the models with the U-Net architecture for all lead times.

Including predictors from ECMWF weather forecasts (particularly the wind) has a considerable impact on the SIC predictions, resulting in a 7.7 % reduction in the RMSE. This is consistent with the findings from Grigoryev et al. (2022), who assessed the impact of using predictors from weather forecasts produced by the National Centers for Environmental Prediction (NCEP) Global Forecast System (GFS) and reported significant improvements when these predictors are included in their U-Net models. Nevertheless, the impact of ECMWF weather forecasts decreases with increasing lead times in our study. This could be due to the lower skill of weather forecasts for longer lead times and to the preprocessing of these variables before providing them to the neural networks. Averaging the weather forecasts between the forecast start date and the predicted lead time could decrease the impact of these predictors for long lead times. This could be mitigated by providing several predictors covering different lead time ranges to the neural networks but with the disadvantage of increasing the computational cost.

The impact of using predictors from TOPAZ4 sea ice forecasts is much lower since these predictors lead to a reduction in the RMSE of only 2.1 % on average. While the impact of using sea ice forecasts from TOPAZ4 is limited in this study, this does not mean that using predictors from sea ice forecasts does not have stronger potential. TOPAZ4 is an operational system that has been constantly developed since 2012, which can lead to inconsistencies limiting the impact of these predictors. The production of consistent re-forecasts with operational systems could increase the impact of sea ice forecasts in the development of such methods and should be recommended in the sea ice community. Furthermore, it is likely that more accurate physically based sea ice forecasts would have a larger potential as predictors for machine learning models.

While this study focused on developing pan-Arctic SIC forecasts at the same resolution as the TOPAZ4 prediction system (12.5 km), there is also a need for higher-resolution (kilometer-scale) sea ice forecasts (Wagner et al., 2020). This can be addressed by developing regional high-resolution prediction systems using deep learning such as the recent works from Keller et al. (2023) and Kvanum et al. (2024). Most studies on sea ice forecasting using machine learning have focused on predicting the SIC and the sea ice edge (e.g., Kim et al., 2020; Fritzner et al., 2020; Liu et al., 2021; Andersson et al., 2021; Grigoryev et al., 2022; Ren et al., 2022), probably due to the larger number of reliable SIC observations available compared to other variables such as thickness, drift, and type. However, predictions of other sea ice variables, such as thickness and drift, are necessary for seafarers, and additional efforts should be made to better predict these variables as well. Finally, probabilistic forecasts can also be developed using supervised machine learning (Haynes et al., 2023), which should have a strong potential for sea ice forecasting at short timescales and would be highly relevant for end users (Wagner et al., 2020).

The codes used for this analysis are available at Zenodo (https://doi.org/10.5281/zenodo.11071206, Palerme, 2024) and the following GitHub directory: https://github.com/cyrilpalerme/Calibration_of_short_term_SIC_forecasts/ (last access: 26 April 2024).

The AMSR2 sea ice concentration observations are available on the THREDDS server of the Norwegian Meteorological Institute (https://thredds.met.no/thredds/catalog/cosi/AMSR2_SIC/catalog.html, Rusin et al., 2024b, v1, April 2023), the TOPAZ4 forecasts are distributed by the Copernicus Marine Service (https://data.marine.copernicus.eu/products, Hackett et al., 2024), and a license is needed to download the operational forecasts from the European Centre for Medium-Range Weather Forecasts (ECMWF).

The supplement related to this article is available online at: https://doi.org/10.5194/tc-18-2161-2024-supplement.

CP handled the conceptualization, analysis (machine learning), writing (original draft), and funding acquisition. TL handled the conceptualization, analysis (remote sensing), writing, and funding acquisition. JR handled the analysis (remote sensing) and writing. AM handled the analysis (verification of satellite observations), writing, and funding acquisition. JB handled the conceptualization and writing. AFK handled the conceptualization and writing. AMS handled the production of satellite observations. LB handled the writing and funding acquisition. MM handled the writing and funding acquisition.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors would like to thank Jean Rabault Førland for great discussions and Sreenivas Bhattiprolu for sharing the codes of some deep learning models (https://github.com/bnsreenu/python_for_microscopists/, last access: 25 April 2024). Finally, we thank the two reviewers for their comments which helped us to improve the manuscript.

This work has been carried out as part of the SEAFARING project supported by the Norwegian Space Agency and the Copernicus Marine Service COSI project. The Copernicus Marine Service is implemented by Mercator Ocean in the framework of a delegation agreement with the European Union. The new AMSR2 SIC observations were developed with support from the SIRANO project (Research Council of Norway; grant no. 302917).

This paper was edited by Yevgeny Aksenov and reviewed by Valentin Ludwig and one anonymous referee.

Andersson, T. R., Hosking, J. S., Pérez-Ortiz, M., Paige, B., Elliott, A., Russell, C., Law, S., Jones, D. C., Wilkinson, J., Phillips, T., Byrne, J., Tietsche, S., Sarojini, B. B., Blanchard-Wrigglesworth, E., Aksenov, Y., Downie, R., and Shuckburgh, E.: Seasonal Arctic sea ice forecasting with probabilistic deep learning, Nat. Commun., 12, 5124, https://doi.org/10.1038/s41467-021-25257-4, 2021. a, b, c, d, e

Barton, N., Metzger, E. J., Reynolds, C. A., Ruston, B., Rowley, C., Smedstad, O. M., Ridout, J. A., Wallcraft, A., Frolov, S., Hogan, P., Janiga, M. A., Shriver, J. F., McLay, J., Thoppil, P., Huang, A., Crawford, W., Whitcomb, T., Bishop, C. H., Zamudio, L., and Phelps, M.: The Navy's Earth System Prediction Capability: A New Global Coupled Atmosphere-Ocean-Sea Ice Prediction System Designed for Daily to Subseasonal Forecasting, Earth Space Sci., 8, e2020EA001199, https://doi.org/10.1029/2020EA001199, 2021. a

Bleck, R.: An oceanic general circulation model framed in hybrid isopycnic-Cartesian coordinates, Ocean Model., 4, 55–88, https://doi.org/10.1016/S1463-5003(01)00012-9, 2002. a

Chassignet, E. P., Hurlburt, H. E., Smedstad, O. M., Halliwell, G. R., Hogan, P. J., Wallcraft, A. J., and Bleck, R.: Ocean Prediction with the Hybrid Coordinate Ocean Model (HYCOM), Springer Netherlands, Dordrecht, https://doi.org/10.1007/1-4020-4028-8_16, pp. 413–426, 2006. a

Director, H. M., Raftery, A. E., and Bitz, C. M.: Probabilistic forecasting of the Arctic sea ice edge with contour modeling, Ann. Appl. Stat., 15, 711–726, https://doi.org/10.1214/20-AOAS1405, 2021. a

Dirkson, A., Merryfield, W. J., and Monahan, A. H.: Calibrated Probabilistic Forecasts of Arctic Sea Ice Concentration, J. Climate, 32, 1251–1271, https://doi.org/10.1175/JCLI-D-18-0224.1, 2019. a

Dirkson, A., Denis, B., Merryfield, W. J., Peterson, K. A., and Tietsche, S.: Calibration of subseasonal sea-ice forecasts using ensemble model output statistics and observational uncertainty, Q. J. Roy. Meteor. Soc., 148, 2717–2741, https://doi.org/10.1002/qj.4332, 2022. a

Durán Moro, M., Sperrevik, A. K., Lavergne, T., Bertino, L., Gusdal, Y., Iversen, S. C., and Rusin, J.: Assimilation of satellite swaths versus daily means of sea ice concentration in a regional coupled ocean–sea ice model, The Cryosphere, 18, 1597–1619, https://doi.org/10.5194/tc-18-1597-2024, 2024. a

Fritzner, S., Graversen, R., and Christensen, K. H.: Assessment of High-Resolution Dynamical and Machine Learning Models for Prediction of Sea Ice Concentration in a Regional Application, J. Geophys. Res.-Oceans, 125, e2020JC016277, https://doi.org/10.1029/2020JC016277, 2020. a, b, c

Frnda, J., Durica, M., Rozhon, J., Vojtekova, M., Nedoma, J., and Martinek, R.: ECMWF short-term prediction accuracy improvement by deep learning, Sci. Rep.-UK, 12, 1–11, https://doi.org/10.1038/s41598-022-11936-9, 2022. a

Goessling, H. F., Tietsche, S., Day, J. J., Hawkins, E., and Jung, T.: Predictability of the Arctic sea ice edge, Geophy. Res. Lett., 43, 1642–1650, https://doi.org/10.1002/2015GL067232, 2016. a, b

Grigoryev, T., Verezemskaya, P., Krinitskiy, M., Anikin, N., Gavrikov, A., Trofimov, I., Balabin, N., Shpilman, A., Eremchenko, A., Gulev, S., Burnaev, E., and Vanovskiy, V.: Data-Driven Short-Term Daily Operational Sea Ice Regional Forecasting, Remote Sens.-Basel, 14, https://doi.org/10.3390/rs14225837, 2022. a, b, c, d, e, f, g, h

Gunnarsson, B.: Recent ship traffic and developing shipping trends on the Northern Sea Route—Policy implications for future arctic shipping, Mar. Policy, 124, 104369, https://doi.org/10.1016/j.marpol.2020.104369, 2021. a

Hackett, B., Bertino, L., Ali, A., Burud, A., and Williams, T.: Copernicus Marine Environment Monitoring Service (CMEMS) ARCTIC_ANALYSIS_FORECAST_PHYS_002_001_a Product [data set], https://data.marine.copernicus.eu/products/, last access: 25 April 2024. a

Haynes, K., Lagerquist, R., McGraw, M., Musgrave, K., and Ebert-Uphoff, I.: Creating and Evaluating Uncertainty Estimates with Neural Networks for Environmental-Science Applications, Artificial Intelligence for the Earth Systems, 2, 220061, https://doi.org/10.1175/AIES-D-22-0061.1, 2023. a

He, K., Zhang, X., Ren, S., and Sun, J.: Delving deep into rectifiers: Surpassing human-level performance on imagenet classification, in: Proceedings of the IEEE international conference on computer vision, 7–13 December 2015, Santiago, Chile, 1026–1034, https://doi.org/10.48550/arXiv.1502.01852, 2015. a

He, K., Zhang, X., Ren, S., and Sun, J.: Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, NV, USA, 770–778, https://doi.org/10.1109/CVPR.2016.90, 2016. a, b

Hunke, E. C. and Dukowicz, J. K.: An Elastic–Viscous–Plastic Model for Sea Ice Dynamics, J. Phys. Oceanogr., 27, 1849–1867, https://doi.org/10.1175/1520-0485(1997)027<1849:AEVPMF>2.0.CO;2, 1997. a

JCOMM Expert Team on sea ice: Sea ice information services of the world, Edition 2017, Tech. Rep. WMO-No 574, World Meteorological Organization, Geneva, Switzerland, https://doi.org/10.25607/OBP-1325, 2017. a, b

Johnson, S. J., Stockdale, T. N., Ferranti, L., Balmaseda, M. A., Molteni, F., Magnusson, L., Tietsche, S., Decremer, D., Weisheimer, A., Balsamo, G., Keeley, S. P. E., Mogensen, K., Zuo, H., and Monge-Sanz, B. M.: SEAS5: the new ECMWF seasonal forecast system, Geosci. Model Dev., 12, 1087–1117, https://doi.org/10.5194/gmd-12-1087-2019, 2019. a

Keller, M. R., Piatko, C., Clemens-Sewall, M. V., Eager, R., Foster, K., Gifford, C., Rollend, D., and Sleeman, J.: Short-Term (7 Day) Beaufort Sea Ice Extent Forecasting with Deep Learning, Artificial Intelligence for the Earth Systems, 2, e220070, https://doi.org/10.1175/AIES-D-22-0070.1, 2023. a, b, c, d

Kim, Y. J., Kim, H.-C., Han, D., Lee, S., and Im, J.: Prediction of monthly Arctic sea ice concentrations using satellite and reanalysis data based on convolutional neural networks, The Cryosphere, 14, 1083–1104, https://doi.org/10.5194/tc-14-1083-2020, 2020. a, b

Kvanum, A. F., Palerme, C., Müller, M., Rabault, J., and Hughes, N.: Developing a deep learning forecasting system for short-term and high-resolution prediction of sea ice concentration, EGUsphere [preprint], https://doi.org/10.5194/egusphere-2023-3107, 2024. a, b, c, d

Lavergne, T., Sørensen, A. M., Tonboe, R., and Pedersen, L. T.: CCI+ Sea Ice ECV Sea Ice Concentration Algorithm Theoretical Basis Document, Tech. rep., European Space Agency, https://climate.esa.int/media/documents/SeaIce_CCI_P1_ATBD-SIC_D2.1_Issue_3.1_signed.pdf (last access: 2 October 2023), 2021. a

Liu, Q., Zhang, R., Wang, Y., Yan, H., and Hong, M.: 0 Short-Term Daily Prediction of Sea Ice Concentration Based on Deep Learning of Gradient Loss Function, Front. Mar. Sci., 8, 736429, https://doi.org/10.3389/fmars.2021.736429, 2021. a, b, c

Melsom, A., Palerme, C., and Müller, M.: Validation metrics for ice edge position forecasts, Ocean Sci., 15, 615–630, https://doi.org/10.5194/os-15-615-2019, 2019. a, b, c

Müller, M., Knol-Kauffman, M., Jeuring, J., and Palerme, C.: Arctic shipping trends during hazardous weather and sea-ice conditions and the Polar Code's effectiveness, npj Ocean Sustainability, 2, 12, https://doi.org/10.1038/s44183-023-00021-x, 2023. a, b

Oktay, O., Schlemper, J., Folgoc, L. L., Lee, M., Heinrich, M., Misawa, K., Mori, K., McDonagh, S., Hammerla, N. Y., Kainz, B., Glocker, B., and Rueckert, D.: Attention U-Net: Learning Where to Look for the Pancreas, arXiv preprint arXiv:1804.03999, https://doi.org/10.48550/arXiv.1804.03999, 2018. a

Palerme, C.: Code accompanying the article “Improving short-term sea ice concentration forecasts using deep learning”, Version 1.0.0, Zenodo [code], https://doi.org/10.5281/zenodo.11071206, 2024. a

Palerme, C. and Müller, M.: Calibration of sea ice drift forecasts using random forest algorithms, The Cryosphere, 15, 3989–4004, https://doi.org/10.5194/tc-15-3989-2021, 2021. a

Palerme, C., Müller, M., and Melsom, A.: An Intercomparison of Verification Scores for Evaluating the Sea Ice Edge Position in Seasonal Forecasts, Geophy. Res. Lett., 46, 4757–4763, https://doi.org/10.1029/2019GL082482, 2019. a

Ponsoni, L., Ribergaard, M. H., Nielsen-Englyst, P., Wulf, T., Buus-Hinkler, J., Kreiner, M. B., and Rasmussen, T. A. S.: Greenlandic sea ice products with a focus on an updated operational forecast system, Front. Mar. Sci., 10, 979782, https://doi.org/10.3389/fmars.2023.979782, 2023. a

Ren, Y., Li, X., and Zhang, W.: A Data-Driven Deep Learning Model for Weekly Sea Ice Concentration Prediction of the Pan-Arctic During the Melting Season, IEEE T. Geosci. Remote, 60, 1–19, https://doi.org/10.1109/TGRS.2022.3177600, 2022. a, b, c, d

Roberts, N., Ayliffe, B., Evans, G., Moseley, S., Rust, F., Sandford, C., Trzeciak, T., Abernethy, P., Beard, L., Crosswaite, N., Fitzpatrick, B., Flowerdew, J., Gale, T., Holly, L., Hopkinson, A., Hurst, K., Jackson, S., Jones, C., Mylne, K., Sampson, C., Sharpe, M., Wright, B., Backhouse, S., Baker, M., Brierley, D., Booton, A., Bysouth, C., Coulson, R., Coultas, S., Crocker, R., Harbord, R., Howard, K., Hughes, T., Mittermaier, M., Petch, J., Pillinger, T., Smart, V., Smith, E., and Worsfold, M.: IMPROVER: The New Probabilistic Postprocessing System at the Met Office, B. Am. Meteorol. Soc., 104, E680–E697, https://doi.org/10.1175/BAMS-D-21-0273.1, 2023. a

Röhrs, J., Gusdal, Y., Rikardsen, E. S. U., Durán Moro, M., Brændshøi, J., Kristensen, N. M., Fritzner, S., Wang, K., Sperrevik, A. K., Idžanović, M., Lavergne, T., Debernard, J. B., and Christensen, K. H.: Barents-2.5km v2.0: an operational data-assimilative coupled ocean and sea ice ensemble prediction model for the Barents Sea and Svalbard, Geosci. Model Dev., 16, 5401–5426, https://doi.org/10.5194/gmd-16-5401-2023, 2023. a, b, c

Ronneberger, O., Fischer, P., and Brox, T.: U-Net: Convolutional Networks for Biomedical Image Segmentation, Medical Image Computing and Computer-Assisted Intervention, in: Proceedings of the 18th Int. Conf. on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015, 234–241, https://doi.org/10.1007/978-3-319-24574-4_28, 2015. a, b, c, d, e

Rusin, J., Lavergne, T., Doulgeris, A. P., and Scott, K. A.: Resolution enhanced sea ice concentration: a new algorithm applied to AMSR2 microwave radiometry data, Ann. Glaciol., 65, 1–12, https://doi.org/10.1017/aog.2024.6, 2024a. a

Rusin, J., Lavergne, T., and Sørensen, A.: Pan-Arctic Sea Ice Concentration from AMSR2 using a pansharpening algorithm, Version 1, Norwegian Meteorological Institute [data set], https://thredds.met.no/thredds/catalog/cosi/AMSR2_SIC/catalog.html, last accessed: 25 April 2024. a

Sakov, P., Counillon, F., Bertino, L., Lisæter, K. A., Oke, P. R., and Korablev, A.: TOPAZ4: an ocean-sea ice data assimilation system for the North Atlantic and Arctic, Ocean Sci., 8, 633–656, https://doi.org/10.5194/os-8-633-2012, 2012. a, b, c, d

Smith, G. C., Roy, F., Reszka, M., Surcel Colan, D., He, Z., Deacu, D., Belanger, J.-M., Skachko, S., Liu, Y., Dupont, F., Lemieux, J.-F., Beaudoin, C., Tranchant, B., Drévillon, M., Garric, G., Testut, C.-E., Lellouche, J.-M., Pellerin, P., Ritchie, H., Lu, Y., Davidson, F., Buehner, M., Caya, A., and Lajoie, M.: Sea ice forecast verification in the Canadian Global Ice Ocean Prediction System, Q. J. Roy. Meteor. Soc., 142, 659–671, https://doi.org/10.1002/qj.2555, 2016. a

Vannitsem, S., Bremnes, J. B., Demaeyer, J., Evans, G. R., Flowerdew, J., Hemri, S., Lerch, S., Roberts, N., Theis, S., Atencia, A., Bouallègue, Z. B., Bhend, J., Dabernig, M., Cruz, L. D., Hieta, L., Mestre, O., Moret, L., Plenković, I. O., Schmeits, M., Taillardat, M., den Bergh, J. V., Schaeybroeck, B. V., Whan, K., and Ylhaisi, J.: Statistical Postprocessing for Weather Forecasts: Review, Challenges, and Avenues in a Big Data World, B. Am. Meteorol. Soc., 102, E681–E699, https://doi.org/10.1175/BAMS-D-19-0308.1, 2021. a

Veland, S., Wagner, P., Bailey, D., Everet, A., Goldstein, M., Hermann, R., Hjort-Larsen, T., Hovelsrud, G., Hughes, N., Kjøl, A., Li, X., Lynch, A., Müller, M., Olsen, J., Palerme, C., Pedersen, J., Rinaldo, Ø., Stephenson, S., and Storelvmo, T.: Knowledge needs in sea ice forecasting for navigation in Svalbard and the High Arctic, Svalbard Strategic Grant, Svalbard Science Forum, NF-rapport 4/2021, https://doi.org/10.13140/RG.2.2.11169.33129, 2021. a

von Schuckmann, K., Le Traon, P.-Y., Smith, N., Pascual, A., Djavidnia, S., Gattuso, J.-P., Grégoire, M., Aaboe, S., Alari, V., Alexander, B. E., Alonso-Martirena, A, Aydogdu, A., Azzopardi J., Bajo, M., Barbariol, F., Batistić, M., Behrens, A., Ben Ismail, S., Benetazzo, A., Bitetto, I., Borghini, M., Bray, L., Capet, A., Carlucci, R., Chatterjee, S., Chiggiato, J., Ciliberti, S., Cipriano, G., Clementi, E., Cochrane, P., Cossarini, G., D'Andrea, L., Davison, S., Down, E., Drago, A., Druon, J.-N., Engelhard, G., Federico, I., Garić, R., Gauci, A., Gerin, R., Geyer, G., Giesen, R., Good, S., Graham, R., Greiner, E., Gundersen, K., Hélaouët, P., Hendricks, S., Heymans, J. J., Holt, J., Hure, M., Juza, M., Kassis, D., Kellett, P., Knol-Kauffman, M., Kountouris, P., Kõuts, M., Lagemaa, P., Lavergne, T., Legeais, J.-F., Libralato, S., Lien, V. S., Lima, L., Lind, S., Liu, Y., Macías, D., Maljutenko, I., Mangin, A., Männik, A., Marinova, V., Martellucci, R., Masnadi, F., Mauri, E., Mayer, M., Menna, M., Meulders, C., Møgster, J. S., Monier, M., Mork, K. A., Müller, M., Nilsen, J. E. Ø., Notarstefano, G., Oviedo, J. L., Palerme, C., Palialexis, A., Panzeri, D., Pardo, S., Peneva, E., Pezzutto, P., Pirro, A., Platt, T., Poulain, P. M., Prieto, L., Querin, S., Rabenstein, L., Raj, R. P., Raudsepp, U., Reale, M., Renshaw, R., Ricchi, A., Ricker, R., Rikka, S., Ruiz, J., Russo, T., Sanchez, J., Santoleri, R., Sathyendranath, S., Scarcella, G., Schroeder, K., Sparnocchia, S., Spedicato, M. T., Stanev, E., Staneva, J., Stocker, A., Stoffelen, A., Teruzzi, A., Townhill, B., Uiboupin, R., Valcheva, N., Vandenbulcke, L., Vindenes, H., Vrgoč, N., Wakelin, S., and Zupa, W.: Copernicus marine service ocean state report, issue 5, J. Oper. Oceanogr., 14, 1–185, https://doi.org/10.1080/1755876X.2021.1946240, 2021. a

Wagner, P. M., Hughes, N., Bourbonnais, P., Stroeve, J., Rabenstein, L., Bhatt, U., Little, J., Wiggins, H., and Fleming, A.: Sea-ice information and forecast needs for industry maritime stakeholders, Polar Geography, 43, 160–187, https://doi.org/10.1080/1088937X.2020.1766592, 2020. a, b, c, d

Wang, Q., Shao, Y., Song, Y., Schepen, A., Robertson, D. E., Ryu, D., and Pappenberger, F.: An evaluation of ECMWF SEAS5 seasonal climate forecasts for Australia using a new forecast calibration algorithm, Environ. Modell. Softw., 122, 104550, https://doi.org/10.1016/j.envsoft.2019.104550, 2019. a

Williams, T., Korosov, A., Rampal, P., and Ólason, E.: Presentation and evaluation of the Arctic sea ice forecasting system neXtSIM-F, The Cryosphere, 15, 3207–3227, https://doi.org/10.5194/tc-15-3207-2021, 2021. a

Zhao, J., Shu, Q., Li, C., Wu, X., Song, Z., and Qiao, F.: The role of bias correction on subseasonal prediction of Arctic sea ice during summer 2018, Acta Oceanol. Sin., 39, 50–59, https://doi.org/10.1007/s13131-020-1578-0, 2020. a

Sea ice forecasts are operationally produced using physically based models, but these forecasts are often not accurate enough for maritime operations. In this study, we developed a statistical correction technique using machine learning in order to improve the skill of short-term (up to 10 d) sea ice concentration forecasts produced by the TOPAZ4 model. This technique allows for the reduction of errors from the TOPAZ4 sea ice concentration forecasts by 41 % on average.

Sea ice forecasts are operationally produced using physically based models, but these forecasts...