the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Measuring the spatiotemporal variability in snow depth in subarctic environments using UASs – Part 1: Measurements, processing, and accuracy assessment

Leo-Juhani Meriö

Anton Kuzmin

Pasi Korpelainen

Pertti Ala-aho

Timo Kumpula

Bjørn Kløve

Hannu Marttila

Snow conditions in the Northern Hemisphere are rapidly changing, and information on snow depth is critical for decision-making and other societal needs. Uncrewed or unmanned aircraft systems (UASs) can offer data resolutions of a few centimeters at a catchment-scale and thus provide a low-cost solution to bridge the gap between sparse manual probing and low-resolution satellite data. In this study, we present a series of snow depth measurements using different UAS platforms throughout the winter in the Finnish subarctic site Pallas, which has a heterogeneous landscape. We discuss the different platforms, the methods utilized, difficulties working in the harsh northern environment, and the UAS snow depth results compared to in situ measurements. Generally, all UASs produced spatially representative estimates of snow depth in open areas after reliable georeferencing by using the structure from motion (SfM) photogrammetry technique. However, significant differences were observed in the accuracies produced by the different UASs compared to manual snow depth measurements, with overall root mean square errors (RMSEs) varying between 13.0 and 25.2 cm, depending on the UAS. Additionally, a reduction in accuracy was observed when moving from an open mire area to forest-covered areas. We demonstrate the potential of low-cost UASs to efficiently map snow surface conditions, and we give some recommendations on UAS platform selection and operation in a harsh subarctic environment with variable canopy cover.

- Article

(10367 KB) - Full-text XML

- Companion paper

-

Supplement

(2154 KB) - BibTeX

- EndNote

Knowledge of changes in snow accumulation, depth, and melt is crucial for nature and society in northern and alpine regions. In the Northern Hemisphere especially, snow is important to local ecology providing shelter and protection from harsh winter conditions and supporting early summer hydrological conditions (soil moisture, discharge, etc.) and a unique environment in north and mountainous areas (Demiroglu et al., 2019; Boelman et al., 2019). Also, northern communities, tourism, and industry are adapted and often dependent on snow conditions as winter resources (transport, leisure) but also as water storage for hydropower and other needs. Currently, snow resources are threatened by global warming, which will have many direct and indirect effects on northern environments (Carey et al., 2010; Bring et al., 2016). Any changes in the magnitude, timing, and variability of snowfall; accumulation patterns; and melting will alter, among other things, water availability and soil moisture (Barnett et al., 2005; Kellomäki et al., 2010), which, in turn, impacts flood prediction and warning, hydropower generation (reservoir inflow forecasting), water management, transportation, local authority daily management activities, and the tourism sector (Veijalainen et al., 2010).

Currently, snow depths are routinely monitored using manual snow course measurements (Lundberg et al., 2010; Stuefer et al., 2020), single or a network of multiple automatic stations (Zhang et al., 2017), or coarse satellite images (Frei et al., 2012). However, multiple studies have highlighted how measurements based on a few sampling locations do not provide a representative picture of the wider spatial snow distribution (Grünewald and Lehning, 2015), and thus we lack reliable measures for spatially representative high-resolution snow depth information. Though technologies for manual sampling of snow depth exist (Sturm and Holmgren, 2018), on a wider scale manual measurements quickly become time-consuming and expensive. Modern remote sensing techniques can provide a cost-effective option for more spatially and temporally comprehensive snow depth measurements.

The remote sensing of snow depth has traditionally utilized satellites or manned aircraft (Nolin, 2010; Dietz et al., 2012). Satellite-derived snow depth products can offer global coverage, but the spatial resolution is low (Frei et al., 2012). Manned aircraft (e.g., airborne laser scanning, ALS), on the other hand, can provide better resolution with regional coverage (Deems et al., 2013; Currier et al., 2019), but the costs can be comparably high. Recently popularized uncrewed or unmanned aircraft systems (UASs) can offer resolutions of a few centimeters at a catchment-scale and thus provide a low-cost solution to bridge the gap between sparse manual probing and low-resolution satellite data.

Numerous studies have assessed the potential of using UASs in snow depth mapping during recent years in various locations including alpine (Vander Jagt et al., 2015; Bühler et al., 2016; De Michele et al., 2016; Bühler et al., 2017; Adams et al., 2018; Avanzi et al., 2018; Fernandes et al., 2018; Redpath et al., 2018; Revuelto et al., 2021), alpine and prairie (Harder et al., 2016, 2020), meadow and forest (Lendzioch et al., 2016; Broxton and van Leeuwen, 2020), arctic (Cimoli et al., 2017), and freshwater lake settings (Gunn et al., 2021). All the mentioned studies utilize structure from motion (SfM) photogrammetry, a low-cost survey technique developed from machine vision and traditional photogrammetry techniques, which has become widely popular for geoscience applications such as topographic mapping (Westoby et al., 2012). The general approach when utilizing SfM photogrammetry in snow depth mapping is to produce at least two (snow-free and snow-covered) digital surface models (DSMs) and then differentiate between the acquired models to estimate the snow depth.

The majority of studies utilizing the UAS–SfM approach use a single UAS and either present results between only two surveys or are in relatively open areas in alpine, prairie, and/or arctic settings, with only a few exceptions providing results for forested areas (e.g., Lendzioch et al., 2016; Broxton and van Leeuwen, 2020) or providing a comparison between different UASs (Revuelto et al., 2021). In this study, we present a series of snow depth measurements with different UAS platforms throughout the winter in the Finnish subarctic. We discuss the different platforms, the utilized methods, the difficulties of working in a harsh northern environment, and the results and their accuracy compared to in situ measurements. The accompanying paper (Meriö et al., 2023) delves deeper into the insights provided by the gathered data on local snow accumulation and melting patterns. To our knowledge, we are providing the first series of snow depth measurements which rely on the UAS–SfM approach in a heterogeneous, subarctic, boreal, forest landscape and give a comparison of multiple UASs in variable lighting conditions and landscapes.

2.1 Study area

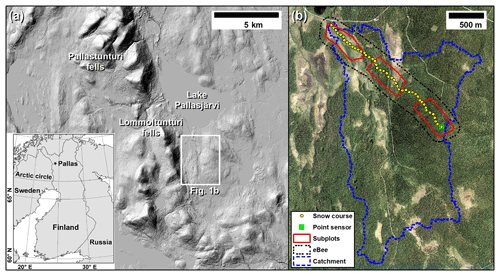

The study site (68.00∘ N, 24.21∘ E) is near the Pallas-Yllästunturi National Park in northern Finland, some 160 km north of the Arctic circle (Fig. 1a). The site consists of mostly mountaintop tundra, lower-elevation forests, wetlands, streams, and lakes (Aurela et al., 2015). The study site is part of the Pallas research catchment, which hosts multiple hydrological and meteorological observation stations (Marttila et al., 2021). The measurements were done within the Pallaslompolo catchment (total area 4.87 km2; Fig. 1b), east of the Lommoltunturi and Sammaltunturi fells. The catchment ranges in altitude from 268 to 375 m a.s.l (above sea level) and drains into a large lake, Pallasjärvi. The climate is characterized as a subarctic climate with persistent snow cover during the winter. The long-term (1981–2010) mean annual temperature and precipitation in the area are −1.0 ∘C and 521 mm, respectively (Pirinen et al., 2012). One-third of the annual precipitation falls as snow, and the annual maximum snow depth, measured at the end of March, averages at 104 cm, while the mean annual snow water equivalent (SWE) was 200 mm in the period 1967–2020 (Marttila et al., 2021). Snow melting usually occurs during the second half of May or the beginning of June, and the first permanent snow typically appears in mid-October. Three subplots were chosen within the study site catchment to reduce the aerial mapping area to a more manageable size (Fig. 1b). Each subplot represents different land cover types in the area. One is an open treeless area with waterlogged peat soils, here referred to as mire area (approx. 14.4 ha). The other land cover is a mostly coniferous-forest-covered area (approx. 15.9 ha), and one is a mix between the two (approx. 15.4 ha).

Figure 1(a) Location of the study site south of Lake Pallasjärvi and east of the Lommoltunturi and Sammaltunturi fells. The location of (b) is highlighted by the white rectangle. (Hillshade courtesy of National Land Survey of Finland.) (b) Locations of the manual snow course measurements and an automatic ultrasonic snow depth sensor, as well as outlines of the subplots (mire, mixed, and forest read from northwest to southeast) and the eBee mapping area within the catchment. (Orthophoto courtesy of National Land Survey of Finland.)

2.2 Equipment

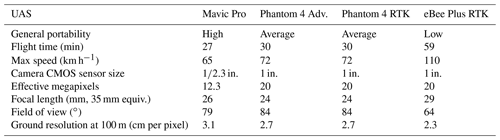

Three quadcopters were utilized in mapping the subplots: a DJI Mavic Pro, a DJI Phantom 4 Advanced, and a DJI Phantom 4 RTK (Table 1). The Phantom 4 RTK represents the recently popularized UAS type which utilizes two GNSS (global navigation satellite system) receivers, one operating as a base station and one as a rover. Using a RTK (real-time kinematic) or PPK (post-processing kinematic) solution, positioning accuracy can achieve a level of a few centimeters, compared to the accuracy of a few meters obtained by the autonomously operating single-frequency GNSS receiver found in consumer-grade UASs such as the DJI Mavic Pro or the DJI Phantom 4 Advanced (e.g., Tomaštík et al., 2019). Besides the quadcopters, a fixed-wing senseFly eBee Plus RTK drone was utilized during the four field campaigns to collect larger datasets encompassing either the subplots in a single flight or the whole catchment area (Fig. 1b).

The DJI Mavic Pro quadcopter is a comparably small UAS with a weight of less than 800 g and a folded size of cm; it thus represents a very portable option considering aerial mapping in areas that are difficult to traverse, especially during the winter months. The Mavic Pro has a comparably small in. sensor with 12.3 megapixels and a 26 mm (35 mm equivalent) lens, with the focus being mostly on portability.

The DJI Phantom 4 Advanced and the DJI Phantom 4 RTK quadcopters are roughly cm in size and weigh around 1.4 kg, and both have 1 in. sensors with 20 megapixels and a 24 mm (35 mm equivalent) lens. The DJI Phantom 4 quadcopters represent average-sized UASs when considering the portability in this context. Compared to the Mavic Pro, the Phantom 4 quadcopters have an increased maximum speed (65 vs. 72 km h−1), increased maximum flight time (27 vs. 30 min), and arguably better wind resistance. The larger sensor size of the Phantoms also improves their light gathering ability, which is generally linked to improved image quality.

The senseFly eBee Plus RTK fixed-wing UAS has a wingspan of 110 cm and a weight (including camera) of around 1.1 kg. The large size greatly reduces the portability of the eBee. However, being a fixed-wing UAS, the eBee has a greatly improved flight time (59 min) and maximum speed (110 km h−1) and consequently has a greatly improved areal coverage when compared to the quadcopters. The eBee was equipped with a senseFly S.O.D.A. with a 1 in. 20 megapixel sensor and a 29 mm (35 mm equivalent) lens. As the eBee Plus RTK fixed-wing UAS cannot ascend and descend vertically, it requires a comparably flat and large clearing for takeoff and landing when compared to the quadcopters.

External ground control points (GCPs) and an RTK GNSS receiver to measure location are needed for rectifying the gathered aerial imagery, especially with UASs which are not equipped with internal RTK correction. In this study, a Trimble R10 and a Topcon HiPer V RTK GNSS receiver were utilized for measuring the GCP locations. The necessity of marking and measuring the GCPs can be burdensome for the field crew, especially during the polar nights, when the time window for flights is short and the GCPs might have to be crafted in the dark. Furthermore, carrying the extra equipment severely reduces the portability of the UAS, although, with a field crew of two or more people, it is somewhat easier to divide the load or use a sled, even when equipped with skis. The RTK-equipped UASs also require an RTK base station. However, as static equipment which can be placed into a suitable location, the RTK system is much less work-intensive, although the purchasing cost of it is many times higher.

2.3 UAS data acquisition and field data collection

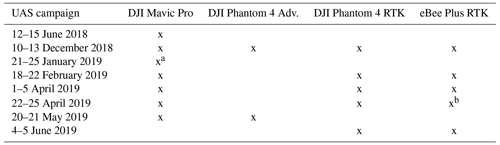

Eight UAS campaigns were carried out at the site between June 2018 and June 2019 (Table 2). The utilized equipment varied between different campaigns, depending on the availability of UASs and field crews. Of the eight campaigns, five, from December 2018 to April 2019, were during the snowy season. During the January campaign, only the mire subplot was mapped with the DJI Mavic Pro, and the data were of poor quality due to the camera lens mechanism freezing in very low temperatures, which reached −30 ∘C. During the 22–25 April campaign, the data from eBee Plus RTK were lost due to a likely electronic malfunction. The target ground sampling distances (GSDs) for the UASs were 3.7 cm for the DJI Mavic Pro, 3.0 cm for the DJI Phantom 4 Advanced, 3.0 cm for the DJI Phantom 4 RTK, and 4.5 cm for the eBee Plus RTK. Slight variations in the target GSDs were inherently required due to different sensors/focal lengths and different flight heights because the aim was to demonstrate typical use case scenarios, e.g., ability to capture a subplot (or all subplots in the case of the eBee) with a single battery. The targets for forward and side overlap were a minimum of 80 % and 75 %, respectively.

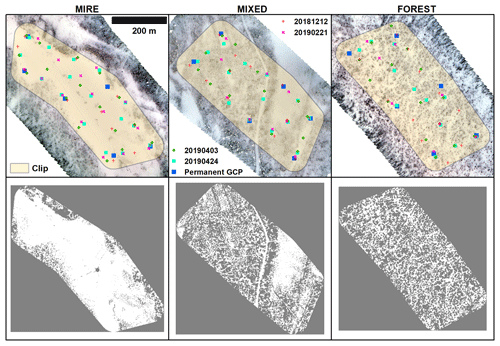

With the non-RTK UAS, GCPs were utilized to rectify the models. The number of GCPs varied slightly for different sites and campaigns, as the low-light conditions during the polar night and the peak snow depth later in the spring limited the mobility of the field crew. During the snow-free surveys, painted plywood targets were utilized as GCPs, and during the snow-covered surveys, the targets were painted straight onto the snow surface. In addition to the temporary GCPs, six permanent GCPs were installed on top of ∼ 2 m wooden posts and were tested in each subplot. On average, 13 temporary GCPs (8–17, median 13.5) and 6 permanent GCPs (PGCPs) were utilized to rectify the non-RTK data (Fig. 3) during the winter campaigns. The summer campaign of June 2018 utilized 18–21 GCPs per subplot. For the RTK UAS, a single GCP was used for correcting elevation bias. Also, an average of 16 elevation checkpoints (6–38, median 14.5) were measured at random locations every time at each plot to get a rough estimate of the external accuracy of the generated DSMs compared to the RTK GNSS reference elevation.

Besides the gathered UAS data, we also utilized airborne laser scanning (ALS) data with a reported maximum vertical standard error of 15 cm, collected by the National Land Survey of Finland during the summer of 2018 as part of the national survey campaign. The DEM based on ALS data has a ground resolution of 1 m per pixel. Snow depth reference data were collected from a snow course passing through all the subplots, consisting of 46 fixed snow stake measurement points, placed an average of 52 m apart (standard deviation 6.2 m), of which 35 were within the subplots (Fig. 1b). The snow depth monitoring and ∼ 50 m spacing between measurements follows the Finnish Environment Institute nationwide snow survey procedure, which is designed to monitor differences in snow depth and water equivalent in different land covers (Kuusisto, 1984; Lundberg and Koivusalo, 2003). Snow depth reference data were also available through the use of an automatic ultrasonic snow depth sensor (Campbell Scientific SR50-45H) with an accuracy of ±1 cm, located in the forest subplot at the highest elevation of the study area and operated by the Finnish Meteorological Institute.

2.4 Data processing and analysis

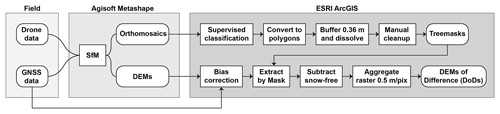

The acquired aerial data were processed using Agisoft Photoscan/Metashape Professional v1.4.5./v.1.6., which employs the SfM technique to produce orthomosaics and DSMs. The SfM technique is described in detail by Westoby et al. (2012). To better harmonize the data, each dataset was processed using high-quality and moderate depth filtering settings. The produced orthomosaics and DSMs were further processed in ESRI ArcGIS 10.6 (Fig. 2). Due to the poor sub-canopy penetration of the SfM technique (Harder et al., 2020), masking was used to omit data at the locations and in the immediate vicinity of trees (Fig. 3). The three masks were generated using maximum likelihood supervised classification, which is a probabilistic approach derived from Bayes' theorem (Ahmad and Quegan, 2012). In the classification, each pixel is assigned to one of the desired classes to which it has the highest likelihood of belonging, based on the training samples. The supervised classification was based on the orthomosaics from the 3 April 2019 survey that had snow-free tree canopies, thus showing the high contrast between the canopies and snowy ground. Further analysis revealed that sometimes the SfM technique struggled with depth mapping of deciduous and snow-covered trees, thus leading to artificially high snow depths immediately next to trees/masks. To mitigate the errors, the masks were further buffered by 36 cm, which was found to be a good compromise for removing artificially high values without losing too much data close to the trees.

Figure 3Above: the subplots and locations of the permanent and temporary ground control points utilized during the successful winter measurement campaigns. Below: tree masks generated for each subplot.

After manual cleanup of a few classification errors, the masks were utilized for canopy removal before subtracting the snow-free (bare-ground) DSM from each snow-covered DSM, thus producing the DEMs of difference (DoDs) highlighting the snow depth. Finally, the DoDs (used interchangeably with snow depth map) were aggregated to 50 cm per pixel resolution before further analysis. The aggregation allowed us to smoothen some small-scale variability while retaining a reasonable resolution for the snow–vegetation interaction analysis discussed in the accompanying paper (Meriö et al., 2023). The 50 cm per pixel resolution was decided as a good middle ground following the findings of De Michele et al. (2016), who demonstrated how the standard deviation of UAS-derived snow depth increases with increasing resolution but stabilizes at a ≤ 1 m pixel size.

To estimate the uncertainty of generated DSMs, the difference between UAS and RTK GNSS elevation Δz at each checkpoint was calculated following Eq. (1):

where t is the date of survey, DSMS is the snow surface elevation from the UAS survey, and zCP is the checkpoint elevation measured with RTK GNSS. Considering error propagation when differentiating between two DSMs (e.g., Brasington et al., 2003), the precision of the DoDs highlighting the snow depth was estimated following Eq. (2):

where σ(Δzt) is the standard deviation for the difference between UAS and RTK GNSS elevation Δz for each winter survey and σ(ΔzG) is the standard deviation for the difference between UAS and RTK GNSS elevations for the snow-free ground DSM. Since the DSMs are not free of bias (i.e., mean error), error propagation for mean errors highlighting the trueness of DoDs were calculated following Eq. (3):

where μ (Δzt) is the mean error for the difference between UAS and RTK GNSS elevation Δz for each winter survey and μ (ΔzG) is the mean error for the difference between UAS and RTK GNSS elevations for the snow-free ground DSM. Snow depth for each pixel hsDSM was calculated following Eq. (4):

where DSMS is snow surface elevation from the UAS survey and DSMG is the snow-free ground elevation from the UAS/ALS survey. The difference between UAS-derived snow depth and manual snow course measurements Δhs was calculated following Eq. (5):

where hsSL,t is the manual snow depth measurement.

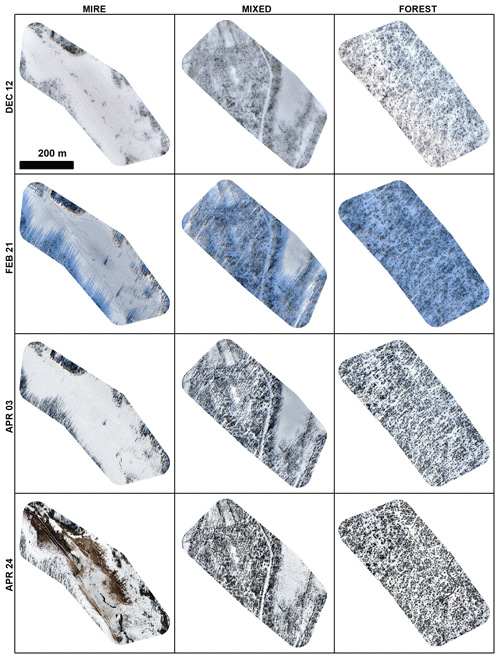

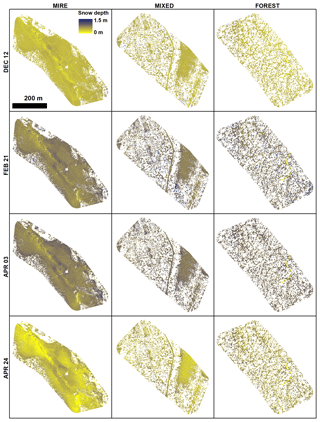

Figures 4 and 5 show the resulting orthomosaics and snow depth maps for different subplots generated from the data collected with the DJI Phantom 4 RTK on the dates of 12 December 2018 (DEC 12), 21 February 2019 (FEB 21), 3 April 2019 (APR 03), and 24 April 2019 (APR 24). Similar maps were also produced for the other UAS data when available (see Table 2).

Figure 4Orthomosaics for different subplots produced from the DJI Phantom 4 RTK data obtained during the winter surveys.

Figure 5Snow depth maps for different subplots produced from the DJI Phantom 4 RTK data obtained during the winter surveys.

3.1 Comparison to GNSS checkpoints

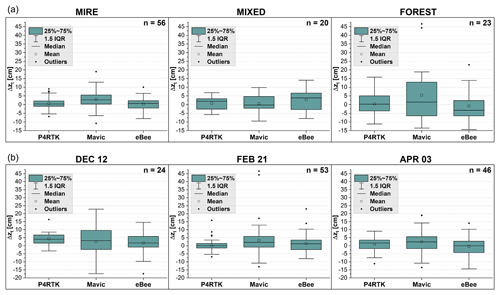

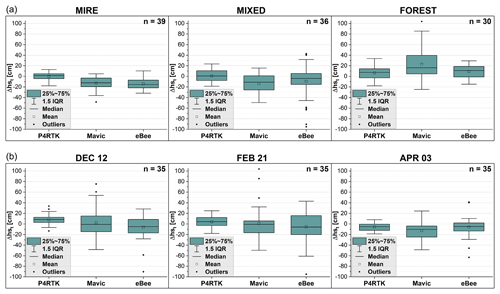

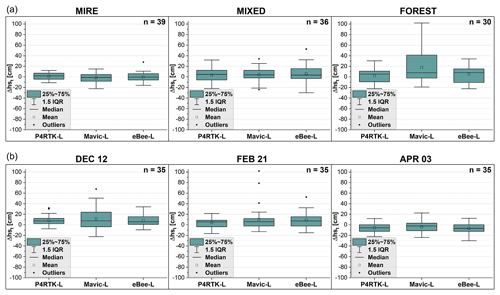

Figure 6a combines measurements from the FEB 21 and APR 03 surveys using the DJI Phantom 4 RTK (P4RTK), DJI Mavic Pro (Mavic), and eBee Plus RTK (eBee) to highlight the effects of land cover on the difference between the DEM and GNSS survey elevations as calculated following Eq. (1). Separate boxplots for each subplot and date are provided in the Supplement (see Fig. S1 in the Supplement), including results for DJI Phantom 4 Advanced (P4A), which was only utilized during the DEC 12 winter survey. There is a general trend of increase in the differences between the DEM and GNSS survey elevations when moving from the mire subplot to mixed and forest subplots, which is observable with all the UASs. Based on Levene's test (Levene, 1960), there are statistically significant differences in sample variances at a significance level of 0.05 with each UAS. When comparing the UASs to each other, there are statistically significant differences in the mire and forest subplots, where P4RTK and eBee produce fairly similar data, but Mavic is clearly the least accurate. In each case, P4RTK has the best error statistics.

Figure 6b combines all subplots for the DEC 12, FEB 21, and APR 03 surveys to highlight the effect of date (lighting conditions) on the uncertainty of UAS measurements compared to checkpoints. There are no statistically significant differences in sample variances for any UAS from the DEC 12 low-light polar-night conditions and the FEB 22 and APR 03 measurements, although there is a slight improvement (∼ 1–2 cm) in mean absolute error (MAE) from DEC 12 to FEB 22 with each UAS. However, when the UASs are compared to each other, P4RTK produced the most accurate data in each case, and statistically significant differences in variance can be observed in the DEC 12 and FEB 21 surveys, where P4RTK and eBee are again fairly similar, but Mavic performs poorly.

Figure 6(a) Difference between the DEM and GNSS survey elevations for each subplot from the FEB 21 and APR 03 surveys. (b) Difference between the DEM and GNSS survey elevations for the DEC 12, FEB 21, and APR 03 surveys from all subplots. IQR signifies interquartile range.

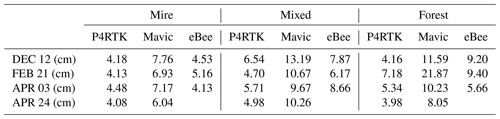

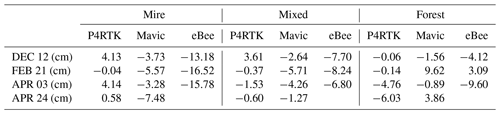

Table 3 highlights the precision of snow depth DoDs calculated following Eq. (2). With P4RTK, the precision is most stable in the mire subplot (in the < 4.5 cm range), whereas there is slightly more variation in the mixed and forest subplots, ranging from 3.98 to 7.18 cm. With Mavic, the precision is in each case lower than with P4RTK or eBee, and there is a tendency for accuracies to decrease from the mire subplot to the mixed and forest subplots. With eBee, the precision is very similar to P4RTK in the mire subplot. However, there is also a general tendency for precision to decrease towards mixed and forest subplots with eBee, the exception being the APR 03 dataset, during which eBee also provides slightly better precision in the mire subplot when compared to P4RTK. Table 4 highlights the trueness of snow depth DoDs calculated following Eq. (3). Again, P4RTK provides the best accuracy overall, although in a few instances Mavic has a better trueness. With eBee, the trueness suffers from large biases of ∼ 16.6 and ∼ 10.0 cm for the snow-free bare-ground DSMs for the mire and mixed subplots, respectively (see Table S1 in the Supplement).

3.2 Comparison to manual snow course measurements

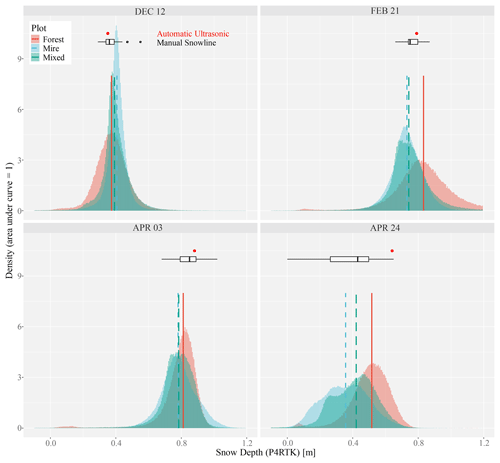

Manual snow course measurements resulted in mean snow depths and standard deviations of 36.8 and 4.8 cm for the DEC 12 survey, 76.5 for the FEB 21 survey, 86.9 and 9.1 cm for the APR 03 survey, and 35.8 and 20.6 cm for the APR 24 survey. A general trend of increasing snow depth variation in the landscape was observed as winter progressed, indicated by the standard deviations. During the APR 24 survey, the variation was high due to the spring melt and resulting flooding already being especially pronounced in the mire area. If the mire area is ignored, the mean snow depth and standard deviations were 46.2 and 10.5 cm for APR 24. Figure 7 shows examples of snow depth distributions, along with manual snow course and single automatic ultrasonic snow depth measurements during different surveys, obtained using the P4RTK data. The histogram shapes are generally long-tailed normal distributions. The biggest deviances from a normal distribution are seen on the mire and mixed subplots during the APR 24 spring melt.

Figure 7Snow depth histograms for different subplots based on P4RTK data during the DEC 12, FEB 21, APR 03, and APR 24 surveys. Vertical lines indicate the median snow depth for each subplot. The boxplots indicate the results from manual snow course measurements, and the red dot indicates data from an automatic ultrasonic snow depth sensor located in the forest subplot.

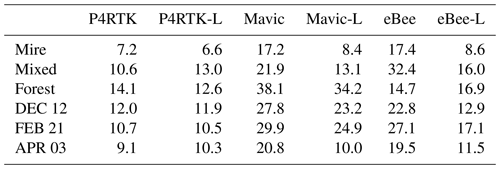

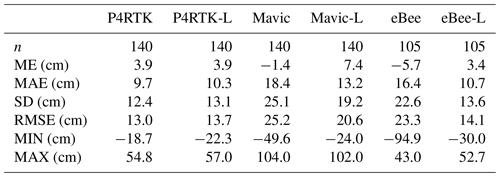

Table 5 shows the statistics for differences between the UAS-derived snow depths and the manual snow course measurements calculated following Eq. (4). The data are provided for snow depth DoDs utilizing the UAS-derived snow-free DEM and the ALS-derived snow-free DEM. It should be noted that the values do not account for any potential systematic or random errors in the manual snow course measurements. The snow depth measurements with P4RTK are of practically equal accuracy regardless of whether UAS or ALS data are used as the snow-free bare-ground model. With Mavic and eBee, utilizing the ALS bare-ground model produced generally more accurate results. Mavic and eBee tend to produce a higher number of outlier magnitude errors when compared to P4RTK, which clearly generates the most accurate data of the three, especially when UAS data are used as a snow-free model.

Table 5The difference between UAS-derived snow depths and manual snow course measurements for the combined winter dataset. Mean error (ME), mean absolute error (MAE), standard deviation (SD), root mean square error (RMSE), minimum error (MIN), and maximum error (MAX) are provided for snow depth DoDs utilizing the UAS-derived snow-free DEM and the ALS-derived snow-free DEM (indicated by the -L suffix).

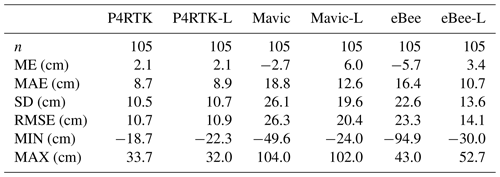

Not including outliers, the greatest differences between the snow course and UAS-derived snow depths were observed in the mire subplot during the APR 24 survey. These differences are most probably related to the spring melt and flooding that were pronounced on the mire subplot (see Fig. 4), which resulted in some of the snow course points being under a mixture of muddy water and ice. In the manual snow course survey, these points were marked as having zero snow depth. However, the UAS approach, based on SfM photogrammetry and differentiating between the DEMs, produces average snow depths of 23–35.6 cm for these points. Table 6 shows the statistics for differences between the UAS-derived snow depths and manual snow course measurements when the APR 24 survey data are removed from the dataset, i.e., when all UASs have an equal number of data points. The removal of the APR 24 data, including the spring melt data points from the mire subplot, further demonstrates the better accuracy of P4RTK compared to the other UASs.

Table 6Difference between UAS-derived snow depths and manual snow course measurements for the DEC 12–APR 03 dataset. Mean error (ME), mean absolute error (MAE), standard deviation (SD), root mean square error (RMSE), minimum error (MIN), and maximum error (MAX) are provided for snow depth DoDs utilizing the UAS-derived snow-free DEM and the ALS-derived snow-free DEM (indicated by the -L suffix).

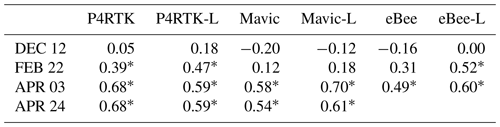

In the early snow season with low snow depths, no clear correlation was observable on individual subplots/dates when comparing manual snow depth measurements and the UAS-derived snow depth pixels at the location of the manual measurement (Table 7). This is likely due to the snow depth being quite uniform in the early winter. Thus, the small variations in the snow depth are not captured well due to inherent random errors in the UAS-based snow depth measurements. A clear increase in correlation is observed when the winter progresses and the snow depths increase and/or local snow depth variability increases. If the flooding mire subplot during APR24 is excluded, the correlation coefficient for P4RTK would be as high as 0.86. When all subplots from different field trips were combined, statistically significant Pearson correlations were observed for each UAS at a significance level of 0.05. The correlations between UAS and field measurements were 0.89 for P4RTK, 0.64 for Mavic, and 0.60 for eBee, when utilizing UAS data as a snow-free bare-ground model. Correspondingly, the correlations were 0.87 for P4RTK, 0.74 for Mavic, and 0.81 for eBee when utilizing ALS data for the snow-free model.

Table 7Correlation between UAS-derived snow depths and manual snow course measurements. Pearson correlation coefficients are provided for all surveys utilizing the UAS-derived snow-free DEM and the ALS-derived snow-free DEM (indicated by the -L suffix).

* Statistically significant at the p<0.05 level.

Figure 8a combines measurements from the DEC 12, FEB 21, and APR 03 surveys to highlight the effect of land cover, and Fig. 8b combines all subplots to highlight the effect of survey date on the difference between UAS-derived snow depths and manual snow course measurements when UAS data are used as the snow-free bare-ground model. Figure 9a and b provide corresponding data for when ALS data are used as the snow-free model. Separate boxplots for each subplot and date are provided in the Supplement (Fig. S3).

When utilizing UAS data as a snow-free model, there are statistically significant differences in sample variances with respect to land cover (Fig. 8a) for each UAS, but there are no significant differences concerning survey date (Fig. 8b). A similar trend that was observed with the GNSS checkpoints can also be seen, with accuracy decreasing when moving from mire to mixed–forest subplot. When comparing the UASs to each other, there are statistically significant differences for each subplot and survey date with P4RTK always producing the most accurate data and eBee producing the second best, aside from the mixed subplot, where a large bias was observed with eBee when comparing the DEM and DoD data on GNSS checkpoints (Table 4).

Figure 8(a) Difference between the snow depth DoDs and manual snow course measurements for each subplot from the DEC 12, FEB 21, and APR 03 surveys utilizing the UAS-derived snow-free DEM. (b) Difference between the snow depth DoDs and manual snow course measurements for the DEC 12, FEB 21, and APR 03 surveys from all subplots utilizing the UAS-derived snow-free DSM.

When ALS data are used as a snow-free model, there are similarly significant differences in sample variances to land cover with each UAS (Fig. 9a) but also for survey date with Mavic and eBee (Fig. 9b). Again, the accuracies decrease with a move from mire to mixed–forest. With respect to survey date, Mavic performed poorly during the DEC 12 and FEB 21 surveys, and eBee performed poorly during the FEB 21 survey. There is noticeable positive bias with all UASs during the DEC 12 survey and a negative bias during the APR 03 survey. To a degree, these biases are also seen in comparison to checkpoints, especially in the case of the DEC 12 survey when all UASs had the tendency of overestimating the snow surface elevation. When comparing the UASs to each other, statistically significant differences in variances between the UASs are observed only with the forest subplot and DEC 12 survey. In both cases, Mavic clearly produces the least accurate data. In general, the utilization of ALS data as a snow-free model clearly benefits Mavic and eBee in all situations, whereas there is no practical difference with P4RTK, which again produces the most accurate data in each case. However, with the ALS, the accuracies of eBee and Mavic are in general much closer to P4RTK. Table 8 shows the RMSEs for the data displayed in Figs. 8 and 9 for a comparison, with existing literature discussed in the next section.

Figure 9(a) Difference between the snow depth DoDs and manual snow course measurements for each subplot from the DEC 12, FEB 21, and APR 03 surveys utilizing the ALS-derived snow-free DEM. (b) Difference between the snow depth DoDs and manual snow line measurements for the DEC 12, FEB 21, and APR 03 surveys from all subplots utilizing the ALS-derived snow-free DSM.

4.1 Accuracy of UAS-based snow depth measurements for different platforms

When considering the SfM approach and the resulting model accuracy to detect snow depth, the biggest differences in the specifications of the UAS and flight parameters used in the study were between the camera sensors and focal lengths, as well as the utilized flight height and georeferencing method. The flight heights had to be optimized to be able to fly individual missions in 15–20 min with the multicopters to account for the reduced battery performance in cold weather. This resulted in slight differences in the resulting ground sample distances between the UASs. However, by far the greatest differences were in the georeferencing methods, with Mavic (and P4A) relying solely on GCPs and P4RTK and eBee relying more on RTK correction to ensure high positional accuracy of the UAS, with a single GCP utilized to mitigate possible elevation bias.

The accuracy of GCP-based georeferencing and the suitable number of GCPs have been discussed by several authors (Tonkin and Midgley, 2016; Martínez-Carricando et al., 2018; Sanz-Ablanedo et al., 2018; Yu et al., 2020). Generally, such assessments are done in open-area locations, where the planning and distribution of the GCP network is relatively easy. In complex, forested environments with thick snow cover, the distribution of GCPs is generally more challenging, considering the lack of mobility, time constraints, and a lack of open areas with an unrestricted view of the sky. Nevertheless, these kinds of studies can be used as a baseline to assess the quality of GCP placement in this study. The importance of uniform GCP distribution for high data accuracy was highlighted by Tonkin and Midgley (2016), although they also note that excess GCPs lead to diminishing returns. Sanz-Ablanedo et al. (2018) demonstrated that a planimetric RMSE accuracy similar to ±GSD was achieved with approx. 2.5–3 GCPs per 100 photos. Vertical accuracy improved towards 1.5× GSD when using more GCPs, with the maximum in their tests being achieved with 4 GCPs per 100 photos. Martínez-Carricando et al. (2018) demonstrated that with stratified GCP distribution, there was a clear improvement in vertical accuracy when moving from 0.25 to 1 GCP per hectare (RMSE from 30.8 to 5.2 cm), whereas moving from 1 to 2 GCPs per hectare (RMSE 4.3 cm) yielded diminishing returns. Yu et al. (2020) argued that for survey areas of 7–39 ha, a minimum of 6 GCPs were required for an accuracy of 10 cm, and more than 12 GCPs were required for optimal results.

In recent years, multiple studies have also compared DSM accuracies produced by GCP georeferencing and DSM accuracies produced through RTK/PPK solutions (Benassi et al., 2017; Forlani et al., 2018; Bolkas, 2019; Padró et al., 2019; Tomaštík et al., 2019; Zhang et al., 2019). Tomaštík et al. (2019) compared the PPK approach to georeferencing with 4 and 9 GCPs under two different canopy conditions (study area approx. 270 ha) and concluded that the PPK approach offered better or equal accuracy and was not influenced by vegetation seasonal variation, unlike GCP georeferencing. Zhang et al. (2019) compared PPK to georeferencing with 8 GCPs on cultivated land (study area approx. 1.7 ha) before and after plowing and concluded that a PPK solution produces the same accuracy as the GCP approach, but a single GCP is necessary to correct possible vertical bias. Comparing the RTK approach to georeferencing with 12 GCPs in an urban area (approx. 18 ha) with buildings, roads, car parks, and meadows, Forlani et al. (2018) had similar results showing how RTK can offer a similar accuracy when at least 1 GCP is utilized to correct vertical bias.

The three subplots in this study were between approx. 14.4–15.9 ha, and the average number of GCPs was 13 per subplot (8–17 depending on the survey), resulting in an average of 5.1 GCPs per 100 photos and 0.87 GCP per hectare or 7.38 GCPs per 100 photos and 1.27 GCP per hectare when the 6 PGCPs are also included. Thus, the number of GCPs utilized was within the range suggested by Sanz-Ablanedo et al. (2018), Martínez-Carricando et al. (2018), and Yu et al. (2020). Furthermore, there was no significant Kendall's rank correlation between the number of GCPs utilized and the accuracy (e.g., standard deviation or mean absolute error) of snow depth measurements with Mavic, which relied solely on GCP georeferencing. Nevertheless, with regards to accuracy, P4RTK utilizing a single GCP clearly outperformed Mavic utilizing all available GCPs and PGCPs, although it should be noted that Mavic has a smaller sensor size and had slightly lower (∼ 0.7 cm) GSD. More surprisingly, the accuracy of P4A utilized during the DEC 12 surveys was clearly worse compared to P4RTK and was more in line with Mavic data (see Supplement), although the two Phantoms have the same sensor size and focal length and had practically the same GSD. This might indicate that RTK-supported data acquisition outperforms the traditional GCP-based method in these conditions even when only utilizing a single GCP with the RTK. This is somewhat in line with the results of Tomaštík et al. (2019), who reported that the PPK approach was not influenced by seasonal variation in vegetation, unlike the GCP georeferencing approach. However, it should also be noted that the DEC 12 measurements were made during low-light polar-night conditions in which a comparably short time difference between the subsequent flights could significantly affect the amount of available light. The eBee data show a clear negative bias in the mire subplot and on some occasions positive bias in the forest subplot (see Fig. S2 in the Supplement), possibly indicating small orientational errors in the datasets acquired for the whole catchment. Thus, for large datasets, a single GCP might not be sufficient for RTK-equipped UASs. A recent study by Rauhala (2023) highlighted that a UAS survey in a 1 km2 area significantly benefitted from the utilization of multiple GCPs even when utilizing PPK correction.

Processing the non-RTK data with only PGCPs did not provide sufficiently accurate data, as broad-scale systematic errors were observed with a pattern sometimes referred to as “bowing” or “doming” which can affect SfM-processed nadir-only imagery (James and Robson, 2014). However, solely using GCPs resulted in slightly reduced vertical accuracy compared to utilizing both GCPs and PGCPs. The PGCPs would have been particularly helpful in remedying potential vertical or horizontal offsets between different models with further georeferencing done using, for example, an iterative closest point (ICP) algorithm, which would provide comparably stable control points regardless of the snow depth. To be practical with the RTK–UAS workflow, the PGCPs would have to be shaped in a way that discourages the accumulation of snow on top of the PGCP to remove the need for manual cleaning of accumulated snow.

Recently, Revuelto et al. (2021) did a comparison of different UASs in snow depth mapping, including two affordable multicopters (Parrot ANAFI and DJI Mavic Pro 2) and a senseFly eBee Plus fixed-wing UAS, which was also utilized in this study. They concluded that under same illumination conditions, all the tested platforms provide equivalent snow depth products in terms of accuracy. However, they noted that all the snow depth maps utilized the same snow-free point cloud (acquired by the eBee Plus) and thus are not fully independent. In our case, statistically significant differences were observed between the UASs in each subplot and survey date when utilizing independent UAS-derived snow-free models. When utilizing the non-independent ALS-derived snow-free model, however, significant differences were only observed in the forest subplot or during the low-light DEC 12 conditions. This clearly highlights the importance of an accurate snow-free model and how the snow-free model can be a bottleneck with regards to snow depth map accuracy when operating certain UASs (e.g., eBee or Mavic). It might be beneficial to acquire a very accurate snow-free model with lidar as a baseline for snow depth mapping, especially in a complex, forested landscape. Another option would be to make extra effort in acquiring a very high-resolution and high-accuracy snow-free model with UAS–SfM utilizing professional UASs, even if the winter measurements would be performed with a more portable platform. Especially in northern locations, there generally are very long daily aerial survey windows as the Sun never sets and the fieldwork is overall less demanding.

Revuelto et al. (2021) also noted that in challenging lighting (overcast sky), all of the UASs failed to properly retrieve the snow surface. Diffuse lighting during cloudy conditions and homogeneous snow cover, especially immediately after fresh snowfall, results in low contrast that can cause gaps and large outliers in the generated point clouds (Harder et al., 2016; Bühler et al., 2017). Some authors have also noted that direct sunlight and for example patchy snow cover can lead to similar issues due to overexposed pixels, especially if relying on automatically adjusted exposure (Harder et al., 2016). In our case, there were surprisingly no statistically significant differences for any UAS between the low-light conditions in December and sunny conditions in February and April when utilizing independent UAS-derived snow-free models, although a slight increase in accuracy is seen in the DEC 12 to FEB 21 survey. This could be explained by the polar twilight (civil twilight) providing enough directional light to create sufficient contrast during solar noon and clear-sky conditions. Also, the low snow depths with less spatial variability during the early winter results in a feature-rich snow surface due to the natural variability in the forest floor topography and vegetation. Further steps were taken to adjust the flight speed and camera parameters to allow a lower shutter speed and only a slight increase in ISO value (max two stops to ISO400) to keep the image noise tolerable.

However, we observed that regardless of the utilized snow-free model, the accuracy was more dependent on land cover type. This seems to be the general trend observed in other studies utilizing a UAS–SfM approach in snow depth measurements, although most studies report accuracy only in open areas or do not separate between different vegetation types, with reported RMSEs varying between ∼ 6–58 cm depending on the study (Vander Jagt et al., 2015; De Michele et al., 2016; Harder et al., 2016; Bühler et al., 2017; Cimoli et al., 2017; Adams et al., 2018; Avanzi et al., 2018; Revuelto et al., 2021). Bühler et al. (2016) report an RMSE of 7 cm for areas with short grass and 30 cm for areas with brushes and/or high grass, while Broxton and van Leeuwen (2020) report an RMSE of ∼ 10 cm in sparsely forested area and ∼ 10–20 cm in densely forested area. Harder et al. (2020) utilized the UAS–SfM approach in mapping snow depths at study sites classified by vegetation height to open (< 0.5 m), shrub-covered, and tree-covered (> 2 m) areas, looking at two sites each. They report an RMSE of 10–30 cm in open areas, 13–19 cm in shrub areas, and 20–33 cm in tree areas with dense needleleaf forest having a higher RMSE (33 cm) compared to leaf-off deciduous trees (20 cm).

In our case, none of the GNSS checkpoints or snow stakes were directly under canopy, yet there is a clear difference between the accuracies obtained for open mire and mixed–forest subplots. It is difficult to determine whether the different studies that include forested areas have reference data points under canopy or between trees. Nevertheless, the general trend seems to be for forest areas providing less accurate snow depth data. Possible explanations for this may include reduced GNSS accuracy, understory vegetation, shadows, lighting-related issues, and the reduced accuracy of SfM procedure for more complex landscape.

Recent studies have also started to investigate a UAS–lidar approach to snow depth mapping partly due to the greater canopy penetration of lidar systems compared to SfM. Harder et al. (2020) compared UAS–SfM and UAS–lidar approaches and reported the UAS–lidar approach as equally successful in penetrating deciduous and needleleaf canopies, although the errors were larger (RMSE 13–17 cm) in vegetated sites compared to open areas (9–10 cm). The UAS–SfM approach had a wider variation in errors as discussed above. Jacobs et al. (2021) utilized a more moderately priced UAS–lidar system, about a third of the price of the system utilized by Harder et al. (2020), and report RMSEs of 1.2 cm for open areas and 10.5 cm for mixed forest sites consisting of deciduous and coniferous trees. However, the system utilized by Jacobs et al. (2021) had a relatively short battery life, and the total reported survey time of 2 h for the 9.8 ha survey was relatively high. Dharmadasa et al. (2022) also utilized a UAS–lidar approach and report RMSEs of 4.3–22 cm for field sites, 7.9–12 cm for deciduous forest sites, and 19–22 cm for coniferous boreal forest sites. Furthermore, Dharmadasa et al. (2022) argue that remote sensing techniques alone are not able to provide comprehensive snow depth distribution under a coniferous canopy despite the increased point density provided by the UAS–lidar approach when compared to a traditional ALS approach. Recent study by Štroner et al. (2023) highlighted that while mapping snow-free forest sites, low-cost UAS–lidar (DJI Zenmuse L1) can produce much better coverage under the canopy but still has significantly lower vertical accuracy than a high-quality UAS–SfM camera (DJI Zenmuse P1) that is half the price.

4.2 Operational challenges and further considerations

UAS platforms and their use in general topographic mapping have been reviewed by Nex and Remendino (2014) and Colomina and Molina (2014), whereas relevant camera system and camera setting considerations have been reviewed by Mosbrucker et al. (2017) and O'Connor et al. (2017), as well as different challenges related to, for example, location and weather by Duffy et al. (2018) and Kramar et al. (2022). Typical platform considerations, such as payload, flight speed, and wind resistance, are relevant for snow depth mapping, but there are also some special considerations to be made. First, battery life may drop severely in sub-zero temperatures. Secondly, as plowed roads may not be available, portability can become an issue depending on whether a snowmobile or sled is available or if the crew has to rely on snowshoes or skis. Considering the selection of suitable camera systems and camera settings for arctic and subarctic conditions, the short days and low-light conditions during winters in high latitudes require extra attention. An emphasis should be placed on selecting a lens with a relatively large aperture and a large sensor which allows a high enough ISO value (i.e., sensor gain) to provide sufficient shutter speeds without compromising the signal-to-noise ratio. The typically greater dynamic range of large sensors is also important, since the polar-night conditions have lower contrast over snow cover, while low sun angles during early spring create heavy shadows. However, a large sensor often increases the camera size and weight, which in turn affects the battery life, UAS platform size, and the portability of the system.

When operating in subarctic winter, weather-related phenomena produce the clearest challenges in terms of preflight preparations, operation, data quality, and battery life. In our case, study site evaluation and preliminary flights were performed during the spring preceding the study winter to find the optimal flight parameters and suitable takeoff and landing areas. Due to the remoteness of the site, the amount of time and the field crew size required for the ground and aerial surveys were also considered, with a target of completing each survey within 1 week. The most notable changes were to the size of the survey area in order to ensure it could be covered in the available time with the required data quality. This was needed because of the issues with the drone battery life, the short daily aerial survey window due to limited daylight time during midwinter, often unpredictable weather conditions, and deep soft snow conditions slowing down the deployment of GCPs. Unpredictable weather places unavoidable limitations on all UAS operations. In our case, the planned surveys were postponed several times during the winter due to weather conditions, including snowfall, very low temperatures, and high wind speeds.

During fieldwork, wind and temperature data from local weather stations were used to find optimal time windows for the surveys. However, partly because of the nearby fells, sudden wind gusts forced flight operations to be halted a few times. On one occasion, sudden gusts stopped the autopilot-controlled UAS in its place during a mission and manual “tacking” maneuvers were required to bring it back to the takeoff and landing location. The field experience indicated that the more robust drones (P4A, P4RTK, eBee) could be operated at up to 10 m s−1 with higher wind/gust speeds, whereas the lighter-weight Mavic could not be confidently operated in such conditions.

Furthermore, the topography in the area caused rapid temperature changes, especially in the open mire located in lower elevations between the fells. The survey in January was unsuccessful due to very low temperatures (−30 ∘C), which caused freezing of the DJI Mavic lens system, leading to unfocused aerial photographs. One potential cause of this may be condensation and subsequent freezing of water in the lens machinery caused by temperature changes while moving from the warm storage area to a cold car and again to a warm research station premise before outdoor flights in cold conditions. One possible solution could be to use desiccant bags to reduce moisture and use a more heavily insulated bag for the storage of the UASs to help slow acclimation to a new environment.

UAS manufacturer guidelines usually give general recommendations on the operational temperature range of the UAS batteries. In cold climates, the lower limit is naturally the concern, and significant drops in capacity or even malfunctions can be experienced in low, sub-zero temperatures (Ranquist et al., 2017). Some smart batteries can also have digital warning indicators or even power cutoffs preventing takeoff if the temperature is too low, thus requiring pre-heating. One good option is to store the batteries in a heat box to retain an optimal battery temperature and as much capacity as possible during fieldwork. The UAS powertrain in quadcopters was observed to create enough heat to keep the drone operational in cold temperatures. However, there might be variability between different models of UASs. Consumer-grade drones are usually certified to operate in above-zero temperatures with some exceptions to above −10 ∘C (Ranquist et al., 2017). Real-world experience has shown that P4RTKs rated as operating in temperatures between 0 and 40 ∘C can be fully operated in under −10 ∘C temperatures.

Temperatures close to 0 ∘C also caused problems due to moisture, especially for the fixed-wing systems. As highlighted by Revuelto et al. (2021), fixed-wing models that rely on belly landing, such as eBee, can have issues with rugged or wet surfaces. We only experienced issues with eBee during the spring melt season, when a malfunction resulted in a loss of data, possibly due to water getting into the electronics during a landing on wet snow. Fixed-wing UASs with VTOL (vertical takeoff and landing) capabilities, which have recently become more widely available, may partly mitigate the issues of sub-optimal landing areas while still retaining the advantages of fixed-wing platform, such as longer flight times and larger mapping area extent compared to multicopters.

There are several advantages to the UAS–lidar approach over the UAS–SfM, including more accurate DEM extraction when flying over homogenous textures and the possibility of better penetration of the tree canopy. Compared to the UAS–SfM approach, which uses passive RGB camera sensors, UAS–lidar, as an active measurement technique, provides the possibility of nighttime operation, which becomes very useful during the winter months in northern latitudes when the day only lasts for a couple of hours. Furthermore, the price of a professional grade UAS–lidar setup is considerably higher than a professional grade UAS–SfM setup, although prices have dropped noticeably over recent years. Nonetheless, relatively cheap UASs relying on UAS–SfM, such as DJI Mavic Pro, can do the job, especially in more open areas and under good lighting conditions. A slightly more expensive RTK-equipped UAS can be well worth the extra costs as the need for GCPs are reduced and the data quality is generally superior due to better sensor capabilities and improved georeferencing.

Finally, some recommendations and observations can be summarized:

-

The obtained results indicate that UASs with RTK correction and a single GCP for bias correction can provide sufficient accuracy for snow depth mapping with much less fieldwork involved, thus improving efficiency and safety.

-

For considerably larger areas than the subplots (< 20 ha), multiple GCPs would likely be beneficial even with RTK-capable UASs.

-

An accurate snow-free model is essential since any errors will propagate to snow depth models.

-

A snow-free baseline obtained with ALS can benefit some UASs, especially in complex, forested landscapes.

-

Polar twilight can provide enough (directional) light during solar noon and in clear-sky conditions for sufficient contrast required in UAS–SfM processing.

-

Consumer-grade and professional UASs can be fully operated in under −10 ∘C temperatures, but care should be taken to keep batteries warm and to avoid quick temperature changes moving outdoors.

Our analysis indicates that the measurement accuracy of snow depth using the UAS–SfM approach in subarctic conditions is associated with the (i) the UAS platform, (ii) land cover type, and to a lesser degree (iii) light conditions (i.e., polar twilight vs. direct sunlight) during flights. Significant differences between the UAS platforms were observed with overall RMSEs varying between 13.0 to 25.2 cm, depending on the UAS. However, data from all platforms could be usable in further analysis and to produce spatially detailed snow depth information, especially during the times in winter when the snow depths relative to the uncertainty of snow depths are high (i.e., high signal-to-noise ratio).

All the tested UAS platforms exhibited increased uncertainty when operated in forest or mixed landscapes compared to open mire areas, even though none of the GNSS checkpoints or snow course measurement points were directly under canopy. A small increase in accuracy was observed when the data collected during low-light polar-night conditions were compared to data collected in brighter conditions in the spring; these differences was generally not statistically significant, however. Of the tested platforms, eBee Plus RTK and DJI Mavic Pro clearly benefitted accuracy-wise from utilizing ALS data for the snow-free model. This highlights the importance of an accurate snow-free model as any large errors will propagate to all the snow depth maps. The DJI Phantom 4 RTK did not see benefits from utilizing ALS data and provided the best data quality in each situation.

The DJI Phantom 4 RTK provided the best overall accuracy (RMSE 13.0 cm) and correlation (r=0.89) with the manual snow course measurements. Recommendation can be made for similar platforms utilizing RTK or PPK with at least 1 GCP for snow depth mapping in areas of similar size (< 20 ha). Our findings present the potential of the UAS–SfM approach for measuring snow depth spatially and accurately in harsh subarctic conditions and under the influence of canopy structures. We propose that this technology should be further explored and taken as part of regular snow monitoring schemes to help identify spatial snow cover changes, snow accumulation patterns, and overall snow depths.

The data underlying this analysis and their documentation are available at https://doi.org/10.23729/43d37797-e8cf-4190-80f1-ff567ec62836 (Rauhala et al., 2022) under a Creative Commons CC-BY-4.0 license.

The supplement related to this article is available online at: https://doi.org/10.5194/tc-17-4343-2023-supplement.

AR, HM, PA, and LJM designed the field studies, while AR, LJM, AK, and PK carried them out and processed the data. AR analyzed the data and prepared the manuscript with contributions from all co-authors. HM, TK, and PA supervised the research.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This study was supported by the Maa- ja vesitekniikan tuki ry, K. H. Renlund Foundation, Academy of Finland (projects 316349, 330319, and ArcI Profi 4); the Strategic Research Council (SRC) decision no. 312636 (IBC-Carbon); EU Horizon 2020 Research and Innovation Programme grant agreement no. 869471; and Kvantum Institute at the University of Oulu. We thank Valtteri Hyöky and personnel of Metsähallitus for assisting with field sampling campaigns. We gratefully acknowledge the fieldwork assistance of Filip Muhic, Kashif Noor, Aleksi Ritakallio, Alexandre Pepy, Jari-Pekka Nousu, and Valtteri Hyöky.

This research has been supported by the Academy of Finland (grant nos. 316349, 330319, 312636, and ArcI Profi 4) and the Horizon 2020 (grant no. 869471).

This paper was edited by Carrie Vuyovich and reviewed by two anonymous referees.

Adams, M. S., Bühler, Y., and Fromm, R.: Multitemporal Accuracy and Precision Assessment of Unmanned Aerial System Photogrammetry for Slope-Scale Snow Depth Maps in Alpine Terrain, Pure Appl. Geophys., 175, 3303–3324, https://doi.org/10.1007/s00024-017-1748-y, 2018.

Ahmad, A. and Quegan, S.: Analysis of maximum likelihood classification on multispectral data, Appl. Math. Sci., 6, 6425–6436, 2012.

Aurela, M., Lohila, A., Tuovinen, J. P., Hatakka, J., Penttilä, T., and Laurila, T.: Carbon dioxide and energy flux measurements in four northern-boreal ecosystems at Pallas, Boreal Environ. Res., 20, 455–473, 2015.

Avanzi, F., Bianchi, A., Cina, A., De Michele, C., Maschio, P., Pagliari, D., Passoni, D., Pinto, L., Piras, M., and Rossi, L.: Centimetric accuracy in snow depth using unmanned aerial system photogrammetry and a multistation, Remote Sens., 10, 1–16, https://doi.org/10.3390/rs10050765, 2018.

Barnett, T. P., Adam, J. C., and Lettenmaier, D. P.: Potential impacts of a warming climate on water availability in snow-dominated regions, Nature, 438, 303–309, https://doi.org/10.1038/nature04141, 2005.

Benassi, F., Dall'Asta, E., Diotri, F., Forlani, G., di Cella, U. M., Roncella, R., and Santise, M.: Testing accuracy and repeatability of UAV blocks oriented with gnss-supported aerial triangulation, Remote Sens., 9, 1–23, https://doi.org/10.3390/rs9020172, 2017.

Boelman, N. T., Liston, G. E., Gurarie, E., Meddens, A. J. H., Mahoney, P. J., Kirchner, P. B., Bohrer, G., Brinkman, T. J., Cosgrove, C. L., Eitel, J. U. H., Hebblewhite, M., Kimball, J. S., Lapoint, S., Nolin, A. W., Pedersen, S. H., Prugh, L. R., Reinking, A. K., and Vierling, L. A.: Integrating snow science and wildlife ecology in Arctic-boreal North America, Environ. Res. Lett., 14, 010401, https://doi.org/10.1088/1748-9326/aaeec1, 2019.

Bolkas, D.: Assessment of GCP Number and Separation Distance for Small UAS Surveys with and without GNSS-PPK Positioning, J. Surv. Eng., 145, 1–17, https://doi.org/10.1061/(ASCE)SU.1943-5428.0000283, 2019.

Brasington, J., Langham, J., and Rumsby, B.: Methodological sensitivity of morphometric estimates of coarse fluvial sediment transport, Geomorphology, 53, 299–316, https://doi.org/10.1016/S0169-555X(02)00320-3, 2003.

Bring, A., Fedorova, I., Dibike, Y., Hinzman, L., Mård, J., Mernild, S. H., Prowse, T., Semenova, O., Stuefer, S. L., and Woo, M. K.: Arctic terrestrial hydrology: A synthesis of processes, regional effects, and research challenges, J. Geophys. Res.-Biogeo., 121, 621–649, https://doi.org/10.1002/2015JG003131, 2016.

Broxton, P. D. and van Leeuwen, W. J. D.: Structure from motion of multi-angle RPAS imagery complements larger-scale airborne lidar data for cost-effective snow monitoring in mountain forests, Remote Sens., 12, 2311, https://doi.org/10.3390/rs12142311, 2020.

Bühler, Y., Adams, M. S., Bösch, R., and Stoffel, A.: Mapping snow depth in alpine terrain with unmanned aerial systems (UASs): potential and limitations, The Cryosphere, 10, 1075–1088, https://doi.org/10.5194/tc-10-1075-2016, 2016.

Bühler, Y., Adams, M. S., Stoffel, A., and Boesch, R.: Photogrammetric reconstruction of homogenous snow surfaces in alpine terrain applying near-infrared UAS imagery, Int. J. Remote Sens., 38, 3135–3158, https://doi.org/10.1080/01431161.2016.1275060, 2017.

Carey, S. K., Tetzlaff, D., Seibert, J., Soulsby, C., Buttle, J., Laudon, H., McDonnell, J., McGuire, K., Caissie, D., Shanley, J., Kennedy, M., Devito, K. and Pomeroy, J. W.: Inter-comparison of hydro-climatic regimes across northern catchments: Synchronicity, resistance and resilience, Hydrol. Process., 24, 3591–3602, https://doi.org/10.1002/hyp.7880, 2010.

Cimoli, E., Marcer, M., Vandecrux, B., Bøggild, C. E., Williams, G., and Simonsen, S. B.: Application of low-cost UASs and digital photogrammetry for high-resolution snow depth mapping in the Arctic, Remote Sens., 9, 1–29, https://doi.org/10.3390/rs9111144, 2017.

Colomina, I. and Molina, P.: Unmanned aerial systems for photogrammetry and remote sensing: A review, ISPRS J. Photogramm., 92, 79–97, https://doi.org/10.1016/j.isprsjprs.2014.02.013, 2014.

Currier, W. R., Pflug, J., Mazzotti, G., Jonas, T., Deems, J. S., Bormann, K. J., Painter, T. H., Hiemstra, C. A., Gelvin, A., Uhlmann, Z., Spaete, L., Glenn, N. F., and Lundquist, J. D.: Comparing Aerial Lidar Observations With Terrestrial Lidar and Snow-Probe Transects From NASA's 2017 SnowEx Campaign, Water Resour. Res., 55, 6285–6294, https://doi.org/10.1029/2018WR024533, 2019.

Deems, J. S., Painter, T. H., and Finnegan, D. C.: Lidar measurement of snow depth: A review, J. Glaciol., 59, 467–479, https://doi.org/10.3189/2013JoG12J154, 2013.

De Michele, C., Avanzi, F., Passoni, D., Barzaghi, R., Pinto, L., Dosso, P., Ghezzi, A., Gianatti, R., and Della Vedova, G.: Using a fixed-wing UAS to map snow depth distribution: an evaluation at peak accumulation, The Cryosphere, 10, 511–522, https://doi.org/10.5194/tc-10-511-2016, 2016.

Demiroglu, O. C., Lundmark, L., Saarinen, J., and Müller, D. K.: The last resort? Ski tourism and climate change in Arctic Sweden, J. Tour. Futur., 6, 91–101, https://doi.org/10.1108/JTF-05-2019-0046, 2019.

Dharmadasa, V., Kinnard, C., and Baraër, M.: An Accuracy Assessment of Snow Depth Measurements in Agro-Forested Environments by UAV Lidar, Remote Sens., 14, 1649, https://doi.org/10.3390/rs14071649, 2022.

Dietz, A. J., Kuenzer, C., Gessner, U., and Dech, S.: Remote sensing of snow – a review of available methods, Int. J. Remote Sens., 33, 4094–4134, https://doi.org/10.1080/01431161.2011.640964, 2012.

Duffy, J. P., Cunliffe, A. M., DeBell, L., Sandbrook, C., Wich, S. A., Shutler, J. D., Myers-Smith, I. H., Varela, M. R., and Anderson, K.: Location, location, location: considerations when using lightweight drones in challenging environments, Remote Sens. Ecol. Conserv., 4, 7–19, https://doi.org/10.1002/rse2.58, 2018.

Fernandes, R., Prevost, C., Canisius, F., Leblanc, S. G., Maloley, M., Oakes, S., Holman, K., and Knudby, A.: Monitoring snow depth change across a range of landscapes with ephemeral snowpacks using structure from motion applied to lightweight unmanned aerial vehicle videos, The Cryosphere, 12, 3535–3550, https://doi.org/10.5194/tc-12-3535-2018, 2018.

Forlani, G., Dall'Asta, E., Diotri, F., di Cella, U. M., Roncella, R., and Santise, M.: Quality assessment of DSMs produced from UAV flights georeferenced with on-board RTK positioning, Remote Sens., 10, 311, https://doi.org/10.3390/rs10020311, 2018.

Frei, A., Tedesco, M., Lee, S., Foster, J., Hall, D. K., Kelly, R., and Robinson, D. A.: A review of global satellite-derived snow products, Adv. Space Res., 50, 1007–1029, https://doi.org/10.1016/j.asr.2011.12.021, 2012.

Grünewald, T. and Lehning, M.: Are flat-field snow depth measurements representative? A comparison of selected index sites with areal snow depth measurements at the small catchment scale, Hydrol. Process., 29, 1717–1728, https://doi.org/10.1002/hyp.10295, 2015.

Gunn, G. E., Jones, B. M., and Rangel, R. C.: Unpiloted Aerial Vehicle Retrieval of Snow Depth Over Freshwater Lake Ice Using Structure From Motion, Front. Remote Sens., 2, 1–14, https://doi.org/10.3389/frsen.2021.675846, 2021.

Harder, P., Schirmer, M., Pomeroy, J., and Helgason, W.: Accuracy of snow depth estimation in mountain and prairie environments by an unmanned aerial vehicle, The Cryosphere, 10, 2559–2571, https://doi.org/10.5194/tc-10-2559-2016, 2016.

Harder, P., Pomeroy, J. W., and Helgason, W. D.: Improving sub-canopy snow depth mapping with unmanned aerial vehicles: lidar versus structure-from-motion techniques, The Cryosphere, 14, 1919–1935, https://doi.org/10.5194/tc-14-1919-2020, 2020.

Jacobs, J. M., Hunsaker, A. G., Sullivan, F. B., Palace, M., Burakowski, E. A., Herrick, C., and Cho, E.: Snow depth mapping with unpiloted aerial system lidar observations: a case study in Durham, New Hampshire, United States, The Cryosphere, 15, 1485–1500, https://doi.org/10.5194/tc-15-1485-2021, 2021.

James, M. R. and Robson, S.: Mitigating systematic error in topographic models derived from UAV and ground-based image networks, Earth Surf. Process. Land., 39, 1413–1420, https://doi.org/10.1002/esp.3609, 2014.

Kellomäki, S., Maajärvi, M., Strandman, H., Kilpeläinen, A., and Peltola, H.: Model computations on the climate change effects on snow cover, soil moisture and soil frost in the boreal conditions over Finland, Silva Fenn., 44, 213–233, https://doi.org/10.14214/sf.455, 2010.

Kramar, V., Röning, J., Erkkilä, J., Hinkula, H., Kolli, T., and Rauhala, A.: Unmanned Aircraft Systems and the Nordic Challenges, in: New Developments and Environmental Applications of Drones, edited by: Lipping, T., Linna, P., and Narra, N., Springer International Publishing, Cham, 1–30, https://doi.org/10.1007/978-3-030-77860-6_1, 2022.

Kuusisto, E.: Snow accumulation and snowmelt in Finland, Publications of the Water Research Institute 55, National Board of Waters, Helsinki, 149, ISBN 951-46-7494-4, 1984.

Lendzioch, T., Langhammer, J., and Jenicek, M.: TRACKING FOREST AND OPEN AREA EFFECTS ON SNOW ACCUMULATION BY UNMANNED AERIAL VEHICLE PHOTOGRAMMETRY, Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci., XLI-B1, 917–923, https://doi.org/10.5194/isprs-archives-XLI-B1-917-2016, 2016.

Levene, H.: Robust Tests for Equality of Variances, in: Contributions to Probability and Statistics: Essays in Honor of Harold Hotelling, edited by: Olkin, I., Stanford University Press, Palo Alto, California, 278–292, ISBN 978-0-8047-0596-7, 1960.

Lundberg, A. and Koivusalo, H.: Estimating winter evaporation in boreal forests with operational snow course data, Hydrol. Process., 17, 1479–1493, https://doi.org/10.1002/hyp.1179, 2003.

Lundberg, A., Granlund, N., and Gustafsson, D.: Towards automated “ground truth” snow measurements-a review of operational and new measurement methods for Sweden, Norway, and Finland, Hydrol. Process., 24, 1955–1970, https://doi.org/10.1002/hyp.7658, 2010.

Martínez-Carricondo, P., Agüera-Vega, F., Carvajal-Ramírez, F., Mesas-Carrascosa, F. J., García-Ferrer, A., and Pérez-Porras, F. J.: Assessment of UAV-photogrammetric mapping accuracy based on variation of ground control points, Int. J. Appl. Earth Obs. Geoinf., 72, 1–10, https://doi.org/10.1016/j.jag.2018.05.015, 2018.

Marttila, H., Lohila, A., Ala-Aho, P., Noor, K., Welker, J. M., Croghan, D., Mustonen, K., Meriö, L., Autio, A., Muhic, F., Bailey, H., Aurela, M., Vuorenmaa, J., Penttilä, T., Hyöky, V., Klein, E., Kuzmin, A., Korpelainen, P., Kumpula, T., Rauhala, A. and Kløve, B.: Subarctic catchment water storage and carbon cycling – Leading the way for future studies using integrated datasets at Pallas, Finland, Hydrol. Process., 35, 1–19, https://doi.org/10.1002/hyp.14350, 2021.

Meriö, L.-J., Rauhala, A., Ala-aho, P., Kuzmin, A., Korpelainen, P., Kumpula, T., Kløve, B., and Marttila, H.: Measuring the spatiotemporal variability in snow depth in subarctic environments using UASs – Part 2: Snow processes and snow–canopy interactions, The Cryosphere, 17, 4363–4380, https://doi.org/10.5194/tc-17-4363-2023, 2023.

Mosbrucker, A. R., Major, J. J., Spicer, K. R., and Pitlick, J.: Camera system considerations for geomorphic applications of SfM photogrammetry, Earth Surf. Process. Land., 42, 969–986, https://doi.org/10.1002/esp.4066, 2017.

Nex, F. and Remondino, F.: UAV for 3D mapping applications: A review, Appl. Geomatics, 6, 1–15, https://doi.org/10.1007/s12518-013-0120-x, 2014.

Nolin, A. W.: Recent advances in remote sensing of seasonal snow, J. Glaciol., 56, 1141–1150, https://doi.org/10.3189/002214311796406077, 2010.

O'Connor, J., Smith, M. J., and James, M. R.: Cameras and settings for aerial surveys in the geosciences: Optimising image data, Prog. Phys. Geogr., 41, 325–344, https://doi.org/10.1177/0309133317703092, 2017.

Padró, J. C., Muñoz, F. J., Planas, J., and Pons, X.: Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms, Int. J. Appl. Earth Obs., 75, 130–140, https://doi.org/10.1016/j.jag.2018.10.018, 2019.

Pirinen, P., Simola, H., Aalto, J., Kaukoranta, J., Karlsson, P., and Ruuhela, R.: Tilastoja Suomen ilmastosta 1981–2010 [Climatological statistics of Finland 1981–2010], Finnish Meteorological Institute, Helsinki, 83 pp., ISBN 978-951-697-766-2, 2012.

Ranquist, E. A., Steiner, M., and Argrow, B.: Exploring the Range of Weather Impacts on UAS Operations, in: Proceedings of the 18th Conference on Aviation, Range and Aerospace Meteorology, Seattle, WA, USA, 22–26 January 2017.

Rauhala, A.: Accuracy assessment of UAS photogrammetry with GCP and PPK-assisted georeferencing, in: New Developments and Environmental Applications of Drones – Proceedings of FinDrones 2023, edited by: Westerlund, T., and Queralta, J. P., Springer, Cham, Switzerland, accepted, 2023.

Rauhala, A., Meriö, L. J., Korpelainen, P., and Kuzmin, A.: Unmanned aircraft system (UAS) snow depth mapping at the Pallas Atmosphere-Ecosystem Supersite, Fairdata [data set], https://doi.org/10.23729/43d37797-e8cf-4190-80f1-ff567ec62836, 2022.

Redpath, T. A. N., Sirguey, P., and Cullen, N. J.: Repeat mapping of snow depth across an alpine catchment with RPAS photogrammetry, The Cryosphere, 12, 3477–3497, https://doi.org/10.5194/tc-12-3477-2018, 2018.

Revuelto, J., Alonso-Gonzalez, E., Vidaller-Gayan, I., Lacroix, E., Izagirre, E., Rodríguez-López, G., and López-Moreno, J. I.: Intercomparison of UAV platforms for mapping snow depth distribution in complex alpine terrain, Cold Reg. Sci. Technol., 190, 103344, https://doi.org/10.1016/j.coldregions.2021.103344, 2021.

Sanz-Ablanedo, E., Chandler, J. H., Rodríguez-Pérez, J. R., and Ordóñez, C.: Accuracy of Unmanned Aerial Vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used, Remote Sens., 10, 1606, https://doi.org/10.3390/rs10101606, 2018.

Štroner, M., Urban, R., Křemen, T., and Braun, J.: UAV DTM acquisition in a forested area–comparison of low-cost photogrammetry (DJI Zenmuse P1) and LiDAR solutions (DJI Zenmuse L1), Eur. J. Remote Sens., 56, 2179942, https://doi.org/10.1080/22797254.2023.2179942, 2023.

Stuefer, S. L., Kane, D. L., and Dean, K. M.: Snow Water Equivalent Measurements in Remote Arctic Alaska Watersheds, Water Resour. Res., 56, 1–12, https://doi.org/10.1029/2019WR025621, 2020.

Sturm, M. and Holmgren, J.: An Automatic Snow Depth Probe for Field Validation Campaigns, Water Resour. Res., 54, 9695–9701, https://doi.org/10.1029/2018WR023559, 2018.

Tomaštík, J., Mokroš, M., Surový, P., Grznárová, A., and Merganič, J.: UAV RTK/PPK method-An optimal solution for mapping inaccessible forested areas?, Remote Sens., 11, 721, https://doi.org/10.3390/RS11060721, 2019.

Tonkin, T. N. and Midgley, N. G.: Ground-control networks for image based surface reconstruction: An investigation of optimum survey designs using UAV derived imagery and structure-from-motion photogrammetry, Remote Sens., 8, 16–19, https://doi.org/10.3390/rs8090786, 2016.

Vander Jagt, B., Lucieer, A., Wallace, L., Turner, D., and Durand, M.: Snow Depth Retrieval with UAS Using Photogrammetric Techniques, Geosciences, 5, 264–285, https://doi.org/10.3390/geosciences5030264, 2015.

Veijalainen, N., Lotsari, E., Alho, P., Vehviläinen, B., and Käyhkö, J.: National scale assessment of climate change impacts on flooding in Finland, J. Hydrol., 391, 333–350, https://doi.org/10.1016/j.jhydrol.2010.07.035, 2010.

Westoby, M. J., Brasington, J., Glasser, N. F., Hambrey, M. J., and Reynolds, J. M.: “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications, Geomorphology, 179, 300–314, https://doi.org/10.1016/j.geomorph.2012.08.021, 2012.

Yu, J. J., Kim, D. W., Lee, E. J., and Son, S. W.: Determining the optimal number of ground control points for varying study sites through accuracy evaluation of unmanned aerial system-based 3d point clouds and digital surface models, Drones, 4, 1–19, https://doi.org/10.3390/drones4030049, 2020.

Zhang, H., Aldana-Jague, E., Clapuyt, F., Wilken, F., Vanacker, V., and Van Oost, K.: Evaluating the potential of post-processing kinematic (PPK) georeferencing for UAV-based structure- from-motion (SfM) photogrammetry and surface change detection, Earth Surf. Dynam., 7, 807–827, https://doi.org/10.5194/esurf-7-807-2019, 2019.

Zhang, Z., Glaser, S., Bales, R., Conklin, M., Rice, R., and Marks, D.: Insights into mountain precipitation and snowpack from a basin-scale wireless-sensor network, Water Resour. Res., 53, 6626–6641, https://doi.org/10.1002/2016WR018825, 2017.