the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Correcting errors in seasonal Arctic sea ice prediction of Earth system models with machine learning

Zikang He

Julien Brajard

Xidong Wang

Zheqi Shen

While Earth system models are essential for seasonal Arctic sea ice prediction, they often exhibit significant errors that are challenging to correct. In this study, we integrate a multilayer perceptron (MLP) machine learning (ML) model into the Norwegian Climate Prediction Model (NorCPM) to improve seasonal sea ice predictions. We compare the online and offline error correction approaches. In the online approach, ML corrects errors in the model's instantaneous state during the model simulation, while in the offline approach, ML post-processes and calibrates predictions after the model simulation. Our results show that the ML models effectively learn and correct dynamical model errors in both approaches, leading to improved predictions of Arctic sea ice during the test period (i.e., 2003–2021). Both approaches yield the most significant improvements in the marginal ice zone, where error reductions in sea ice concentration exceed 20 %. These improvements vary seasonally, with the most substantial enhancements occurring in the Atlantic, Siberian, and Pacific regions from September to January. The offline error correction approach consistently outperforms the online error correction approach. This is primarily because the online approach targets only instantaneous model errors on the 15th of each month, while errors can grow during the subsequent 1-month model integration due to interactions among the model components, damping the error correction in monthly averages. Notably, in September, the online approach reduces the error of the pan-Arctic sea ice extent by 50 %, while the offline approach achieves a 75 % error reduction.

- Article

(3235 KB) - Full-text XML

-

Supplement

(8575 KB) - BibTeX

- EndNote

According to satellite observations, throughout all calendar months, Arctic sea ice extent (SIE) has rapidly declined in recent decades (e.g., Serreze et al., 2007; Onarheim et al., 2018; Wang et al., 2022; Heuzé and Jahn, 2024). The most significant reductions have occurred in the summer and autumn (e.g., September, Stroeve et al., 2014). The increased open water leads to growing socioeconomic activities in the Arctic (e.g., fisheries, shipping, and resource extraction). These increased human activities highly demand accurate seasonal predictions of Arctic sea ice conditions (Jung et al., 2016; Wagner et al., 2020). The Sea Ice Outlook, managed by the Sea Ice Prediction Network, produces monthly reports during the Arctic sea ice retreat season. These monthly reports synthesize input from the international research community devoted to enhancing sea ice predictions. Recently, Bushuk et al. (2024) evaluated 17 statistical models, 17 dynamical models, and one heuristic approach in predicting September Arctic sea ice. Overall, they found that dynamical and statistical models are comparable in predicting the pan-Arctic SIE, and dynamical models generally outperform statistical models in predicting the regional SIE and sea ice concentration (SIC, i.e., local quantities). Bushuk et al. (2024) also suggested that improving initialization and model resolution is expected to facilitate predictions.

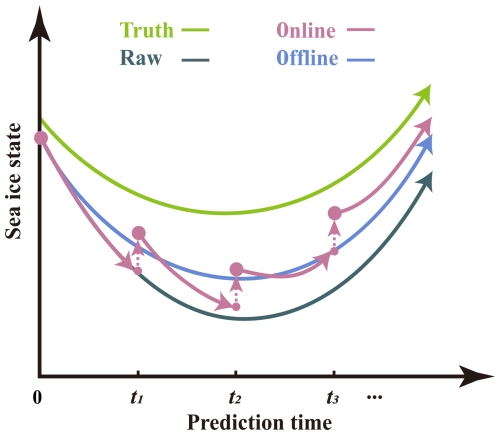

Data assimilation (DA) integrates observations with dynamical models to optimally estimate the state of the climate system (Penny and Hamill, 2017; Carrassi et al., 2018). It has widespread applications in producing reanalysis (Saha et al., 2006; Dee et al., 2011; Laloyaux et al., 2018; Zuo et al., 2019; Hersbach et al., 2020), offering comprehensive, continuous, and dynamically consistent reconstructions of past climate states. Simultaneously, many prediction centers are transitioning to using DA methods to mitigate uncertainties in initial conditions (Wang et al., 2013, 2019; Vitart et al., 2017; Blockley and Peterson, 2018; Kimmritz et al., 2019; Bushuk et al., 2024). The improved quantity and quality of observations across different climate system components and advanced DA methods enable more precise initial conditions for seasonal predictions of Arctic sea ice. Nevertheless, even with perfect initial conditions, prediction errors escalate over time due to the inherent deficiencies of dynamical models in emulating the true climate system (gray and green lines in Fig. 1). This underscores the necessity of dealing with prediction errors.

Figure 1Schema for the online and offline ML-based error correction approaches. The green line represents the “truth”. The gray line represents dynamical prediction without error correction. The purple (blue) line represents prediction with online (offline) ML-based error correction. The purple dashed arrows indicate pauses during the prediction production, facilitating correction to the instantaneous model states.

Machine learning (ML) has recently emerged as a data-driven technique to mitigate dynamical prediction errors. Two prevalent approaches include constructing an ML–dynamical hybrid model (e.g., Brajard et al., 2021; Watt-Meyer et al., 2021) and post-processing/calibrating model outputs (e.g., Yang et al., 2023; Palerme et al., 2024). The former is considered to be online error correction, while the latter refers to offline error correction.

In the context of the online error correction, ML is applied to correct errors in the instantaneous model state (i.e., initial conditions for the following model integration) and sequentially applied to update the instantaneous model state during simulation (e.g., Brajard et al., 2021), referring to an ML-dynamical hybrid model (purple line in Fig. 1). Such online error correction approaches have been investigated in both an idealized framework (e.g., Watson, 2019; Brajard et al., 2021) and real applications (e.g., Watt-Meyer et al., 2021). Watson (2019) examined the tendency error correction approach in the Lorenz 96 model. Brajard et al. (2021) explored the resolvent error correction approach in the two-scale Lorenz model as well as in a low-order coupled ocean–atmosphere model called the Modular Arbitrary-Order Ocean–Atmosphere Model (MAOOAM, De Cruz et al., 2016). Watt-Meyer et al. (2021) demonstrated that the online error correction can improve the short-term prediction skill and accuracy of precipitation simulation, while the dynamical model can run indefinitely without numerical instabilities arising. Gregory et al. (2024) applied ML to correct sea ice errors in an ocean–ice coupled model and demonstrated that ML can effectively reduce sea ice bias in a 5-year simulation. So far, the ML-based online error correction method has not been tested for seasonal sea ice prediction in an Earth system model.

The offline error correction consists of performing post-processing (also called calibration) of the dynamical model predictions (blue line in Fig. 1). ML is trained to predict errors for time-averaged model outputs (e.g., daily or monthly outputs) and applied to correct errors present in raw predictions. The most common error correction methods employed in sea ice prediction (Bushuk et al., 2024) are relatively simple (e.g., correction of the mean error or a linear regression adjustment, Barthelemy et al., 2017). More recently, Palerme et al. (2024) applied ML to improve the skill of sea ice forecasts on a meteorological timescale. Overall, they illustrated that ML-based offline calibration reduced the SIC prediction errors by 41 % and the ice edge distance error by 44 %. Their application is mainly focused on short-term sea ice prediction within 10 d in an ocean–ice coupled model.

In this study, we apply ML to the Norwegian Climate Prediction Model (NorCPM, Wang et al., 2019), a fully coupled Earth system model, for seasonal prediction of Arctic sea ice. We test and compare the ML-based online and offline error correction approaches. In the online approach, we build a hybrid model combining ML and NorCPM to update the instantaneous sea ice state during the production of seasonal predictions. In the offline approach, we use ML to calibrate raw seasonal predictions of Arctic sea ice. The comparison between the two approaches within the same framework delivers new insights for the sea ice prediction community into how to effectively use ML for seasonal Arctic sea ice predictions.

The paper is organized as follows: Sect. 2 presents the dynamical model, data, ML-based error correction approaches, experimental design, and metrics for validation. Section 3 shows the results of different experiments. We finish with the discussion and conclusions in Sect. 4.

2.1 Norwegian Climate Prediction Model

The dynamical model we used is NorCPM (Counillon et al., 2014, 2016; Wang et al., 2016, 2017; Kimmritz et al., 2018, 2019). It combines the Norwegian Earth System Model version 1 (NorESM1, Bentsen et al., 2013) and a deterministic formulation of an advanced flow-dependent DA method called an ensemble Kalman filter (EnKF, Sakov and Oke, 2008).

NorESM1 (Bentsen et al., 2013) is a fully coupled Earth system model used for climate simulations. Its ocean component is the Bergen Layered Ocean Model (BLOM, Bentsen et al., 2013) – an updated version of the isopycnal coordinate ocean model MICOM (Bleck et al., 1995). The sea ice component is the Los Alamos sea ice model version 4 (CICE4, Gent et al., 2011; Holland et al., 2012). The atmospheric component is a variant of the Community Atmosphere Model version 4 (CAM4-Oslo, Kirkevåg et al., 2018). The land component is the Community Land Model (CLM4, Thornton, 2010; Lawrence et al., 2011). Furthermore, the version 7 coupler (CPL7, Craig et al., 2012) is utilized for inter-component communication and interaction. The external forcings follow the protocol of the Coupled Model Intercomparison Project Phase 5 (CMIP5) historical experiment (Taylor et al., 2012).

The atmospheric and land components are situated on the National Center for Atmospheric Research (NCAR) finite-volume 2° grid, featuring a regular 1.9°×2.5° latitude–longitude resolution with 26 hybrid sigma–pressure levels extending to 3 hPa. The ocean and sea ice components utilize NCAR's gx1v6 horizontal grid, which is a nominal 2° resolution curvilinear grid with the northern pole singularity shifted over Greenland (Bethke et al., 2021). This grid is enhanced both meridionally towards the Equator and zonally and meridionally towards the poles. The ocean component comprises 51 isopycnic layers, featuring a bulk mixed layer representation on top with two layers having time-evolving thicknesses and densities.

The sea ice component is equipped with five ice thickness categories to account for the different thermodynamic and dynamic properties of ice with different thicknesses. The volume of snow and ice, the energy content, and the SIC, surface temperature, and volume-weighted mean ice age are determined for each of the ice thickness categories (Bentsen et al., 2013; Kimmritz et al., 2018, 2019).

NorESM1 tends to overproduce thick sea ice, especially in the polar oceans adjacent to the Eurasian continent. This is partly due to factors such as weaker winds across the polar basin and overestimated Arctic cloudiness, which leads to little summer snowmelt. Consequently, the summer SIE in the Arctic has large positive biases, contributing to an underestimation of global temperatures (Bentsen et al., 2013; Bethke et al., 2021).

NorCPM uses the EnKF to update unobserved ocean and sea ice variables by leveraging state-dependent covariance from the simulation ensemble (Kimmritz et al., 2018, 2019). The EnKF allows the assimilation of observations of various types while accounting for observational errors, spatial coverage, and the evolving covariance with the climate state. The EnKF accounts for uncertainties in initial conditions to generate ensemble predictions, which evolve in time and provide time- and space-dependent error estimates.

NorCPM employs anomaly field assimilation (Kimmritz et al., 2019; Wang et al., 2019; Bethke et al., 2021) in which the climatology of the observations is replaced by the model climatology calculated from the ensemble mean of the model historical simulation (without assimilation). While the anomaly field assimilation keeps the model close to its attractor and helps to reduce the model drift during the monthly model integration (Carrassi et al., 2014; Weber et al., 2015), it does not significantly change model biases.

2.2 Data

In this study, we use the reanalysis of NorCPM as the “truth” to assess the improvement achieved by the ML-based error correction approaches. First, this is because NorCPM performs anomaly field assimilation. The large model biases are not corrected by DA (Sect. 2.1). Thus, the analysis increment of the reanalysis used to build the online error correction model (Sect. 2.3) does not take into account model biases. Second, the online error correction approach needs to consistently update SIC in each category, sea surface temperature (SST), and sea surface salinity (SSS) under sea ice, which are often not observed. The reanalysis of NorCPM is a physically consistent construction of the Earth system (Counillon et al., 2016; Kimmritz et al., 2019) and provides a reasonable and physically consistent estimation of these variables. Finally, the reanalysis combining observations with NorESM represents the upper limit of the sea ice predictability of NorCPM.

The reanalysis is available from 1980 to 2021 with 30 ensemble members. The initial states of the reanalysis on 15 January 1980 are taken from a NorESM ensemble run integrated from 1850 to 1980 with CMIP5 historical forcings. In this reanalysis, NorCPM assimilates monthly anomalies of SST, SIC, and subsurface hydrographic profile data in the middle of each month.

From 1980 to 2002, the climatology used for anomaly field assimilation is defined over the period 1980–2010. SST and SIC observations are from HadISST2 (Titchner and Rayner, 2014) and subsurface hydrographic profile data are from EN4.2.1 (Good et al., 2013). The assimilation process contains two steps addressed in Kimmritz et al. (2019): firstly, hydrographic DA updates the ocean state (Wang et al., 2017). Subsequently, SST and SIC DA occur and update the sea ice and ocean states within the ocean mixed layer. From 2003 to 2021, the climatology utilized for anomaly field assimilation is defined from 1982 to 2016. SST and SIC observations are from OISST (Reynolds et al., 2007) and subsurface hydrographic profile data are from EN4.2.1 (Good et al., 2013). Strongly coupled DA is performed to simultaneously update the sea ice and ocean states in a single step.

After each assimilation step, a post-processing step is used to ensure the physical consistency of state variables. For example, the volume of each sea ice category is proportionally adjusted based on the updated SIC (Kimmritz et al., 2018, 2019). The other model components, such as the atmosphere and land, are dynamically adjusted through the coupler during model integration between two assimilation steps.

2.3 Online error correction approach

The online error correction approach is built from the analysis increment of the reanalysis introduced in Sect. 2.2 (Brajard et al., 2021; Gregory et al., 2024) and sequentially applied to update the instantaneous model state in the middle of each month during prediction simulation (purple line in Fig. 1), which is similar to the reanalysis system (Sect. 2.2).

The monthly model integration of the reanalysis (Sect. 2.2) can be described as follows:

where represents the forecasted instantaneous model state at tk, and ℳ represents the dynamical model integration from time tk−1 to tk (Sect. 2.1). During the analysis, DA uses available observations to generate – an updated instantaneous model state and initial conditions for the next monthly model integration from time tk to time tk+1.

The online approach is to emulate the difference between the forecast and the analysis , which corresponds to the opposite of the analysis increment in DA. The error prediction model can be expressed as

where ℳe represents the data-driven model taking the instantaneous model state xf as input and ε represents the predicted model error.

The hybrid model, incorporating the dynamic model and the online error correction model, can be expressed as follows:

where represents the error-corrected instantaneous model state at tl during the prediction.

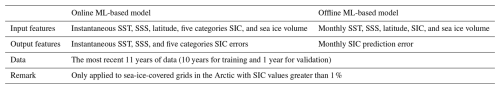

We aim to correct SIC, SST, and SSS errors in the ice-covered area, which are directly associated with the sea ice condition. Considering the seasonality of the error of the sea ice state, we build one error correction model for each calendar month. Also, we employ a running training strategy and use the most recent 11 years of data before the prediction month (the first 10 years for training and the last year for validation). The input feature contains latitude, SST, SSS, five categories of SIC, and five categories of sea ice volume in the middle of the month. The output feature consists of errors in SST, SSS, and five categories of SIC (Table 1). Please refer to Sect. 2.5 for the ML configuration.

Before restarting the model after applying online error correction, it is essential to ensure that the updated variables remain within physical limits (e.g., SIC between 0 % and 100 %) and maintain consistency with non-updated variables. If unphysical values or inconsistencies arise, they can lead to model instability. To prevent these issues, we apply a post-processing method specifically designed for NorCPM (Kimmritz et al., 2018):

-

If SIC in any thickness category falls below 0 % or exceeds 100 %, it is set to 0 % or 100 %, respectively.

-

If the total SIC across all thickness categories exceeds 100 %, SIC values in each category are proportionally scaled to ensure the total does not surpass 100 %.

-

Sea ice volume in each category is adjusted proportionally to changes in SIC while preserving the ice thickness.

This approach ensures physical constraint and model stability after the error correction.

2.4 Offline error correction approach

The offline error correction approach refers to performing post-processing of the dynamical model predictions (blue line in Fig. 1). The ML configuration is the same as the online configuration (Sect. 2.5). The input features are monthly SST, SSS, total SIC, and latitude. The output feature is the error in the monthly SIC. The predicted error is subtracted from the monthly SIC. If the updated monthly SIC falls below 0 % or exceeds 100 %, it is set to 0 % or 100 %, respectively. For more details about the offline error correction approach, please refer to Table 1.

It is worth noting that the offline error correction approach directly targets monthly average model outputs, whereas the online error correction approach addresses instantaneous model errors (Fig. 1) and indirectly changes the monthly model outputs during the production of predictions. Therefore, their input and output features are different (Table 1).

2.5 Machine learning configuration

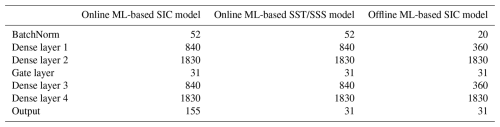

As mentioned in the previous sections, the ML model configurations employed for online and offline error correction approaches share an identical architecture (i.e., the same number of layers and the same number of neurons in each layer) but differ in the input and output variables, resulting in different numbers of trainable parameters (for more details, please refer to Table 1).

The ML model uses the values from a single grid point as input to predict the value at the same grid point, meaning one ML model for all grid points. This simplifies the training process while still enabling the development of efficient models.

The ML architecture used in this study is a multilayer perceptron (MLP), a fully connected neural network well-suited for capturing complex nonlinear relationships in data. MLP offers several advantages, including flexibility in handling diverse input features, efficient training via backpropagation, and strong generalization when properly regularized. Additionally, MLP is computationally more efficient than complex deep learning architectures such as convolutional neural networks (CNNs) and U-Net. It has been successfully applied to error correction in geophysical modeling (e.g., Yang et al., 2023), as it is computationally efficient and requires fewer training data (Jia et al., 2019; Watson, 2019).

The entire MLP architecture consists of seven layers.

-

Input layer: a batch normalization layer (Ioffe, 2017), which helps stabilize and accelerate the training process by normalizing the input features.

-

Second layer: a dense layer with 60 neurons, using the rectified linear unit (ReLU) activation function.

-

Third layer: a dense layer with 30 neurons, also employing the ReLU activation function. This layer shares the same structure as the second layer.

-

Fourth layer: an attention mechanism implemented via a gate layer, which enables the model to focus on important features, thereby enhancing learning efficiency and predictive performance.

-

Fifth layer: a dense layer with 60 neurons and ReLU activation, mirroring the configuration of the second layer.

-

Sixth layer: a dense layer with 30 neurons and ReLU activation, identical to the third layer.

-

Output layer: a dense layer activated by the linear function.

The objective function used in this study is the mean squared error (MSE). Additionally, details regarding the number of parameters for each ML model are provided in Table 2. To reduce the risk of overfitting and improve model generalization, the following strategies are implemented.

-

Batch normalization: the inputs of each layer are normalized to reduce internal covariate shift, thus promoting training stability and generalization.

-

L2 regularization: a penalty is applied to the output layer weights, effectively discouraging overly complex models and reducing the likelihood of overfitting.

-

Early stopping: the validation loss is monitored during training and the training is halted once the validation loss curve does not decline, avoiding overfitting due to the training data.

Table 2Number of parameters of the online and offline ML-based error correction models for each ML model.

To achieve better training results, we further implement the following settings.

-

We adopt a running training strategy, using data from the 11 years preceding the test set to train the ML models. For instance, to develop error correction models for predictions in 2011 (a test set), we train the model using data from 2000 to 2009 and validate it with data from 2010. Similarly, for predictions in 2021, we use data from 2010 to 2019 for training and data from 2020 for validation. This approach ensures that the ML models leverage the most recent data while maintaining a clear separation between training, validation, and test sets. The primary reason for using running training is the pronounced decline trend in Arctic sea ice observed over recent decades, with substantial differences between earlier ice conditions (e.g., the 1980s) and those of recent years (e.g., the 2010s). We also performed sensitivity studies on the length of the running training set (e.g., the most recent 5 years or all years since 1980) and the comparison between the running training and the fixed-period training (1992–2002), which are not shown in the paper. We found that the data from the most recent 11 years lead to the best performance for ML training, and the running training outperforms the fixed-period training.

-

The characteristic of model errors varies with the calendar month. For instance, the model errors mainly appear in the marginal zone in winter but in the entire sea-ice-covered region in summer. We train separately for each calendar month, leading to a distinct ML model for each calendar month. This results in 236 neural network models (from February 2003 to September 2022 based on test months) for the online case. In the offline case, we also consider the start month, resulting in 836 (4 initialized months × 11 lead months × 19 test years) models. Despite the large number of models, the training process is highly efficient due to the simple architecture and low data dimensionality. As a result, training each model is very quick, taking only 1 min on a CPU, making this exhaustive approach computationally affordable.

-

We train and apply error correction models to grid points where the total SIC exceeds 1 %. It avoids adding sea ice into open-water areas and thus dynamical inconsistency. It also means that our correction models can not create ice on a grid point where the model predicted ice-free conditions.

2.6 Hindcast experiments

The standard hindcasts (hereafter referred to as Reference) are initialized from the reanalysis presented in Sect. 2.2 in the middle of January, April, July, and October each year, spanning 1992 to 2021, with a duration of 12 months. From 1992 to 2002, the first 9 ensemble members of the 30-member reanalysis are used to carry out the hindcast experiments, while after 2003, the first 10 ensemble members are used to initialize the hindcast experiments. It is worth noting that these differences (i.e., the different ensemble sizes) would have a minimal impact on the results of this study.

A new set of hindcasts (hereafter referred to as OnlineML), similar to Reference but with the online error correction approach (Sect. 2.3), are initialized from the reanalysis in the middle of January, April, July, and October from 2003 to 2021. In the production of each hindcast, NorCPM pauses in the middle of each lead month and uses the online error correction model to predict the error correction and then update the instantaneous model state.

The offline error correction approach (Sect. 2.4) is applied to post-process the hindcasts of Reference (hereafter referred to as OfflineML).

2.7 Metrics for evaluation

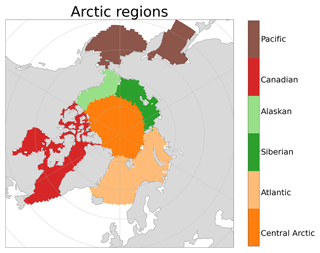

SIE is a commonly used metric in seasonal sea ice prediction (e.g., Bushuk et al., 2024). We evaluate the prediction skill of SIE in the pan-Arctic and six Arctic regions depicted in Fig. 2. These regional definitions adhere to the area definitions provided by Kimmritz et al. (2019), albeit with the consolidation of the original 14 sea areas into six regions that are very similar to the ones used in Bushuk et al. (2024). In this study, the SIE is defined as the total area of all grid points within the region of interest where SIC ≥15 %. SIE is calculated for each ensemble member, and we evaluate the ensemble mean by averaging SIE across all ensemble members.

Figure 2Regional domain definitions for central Arctic, Atlantic, Siberian, Alaskan, Canadian, and Pacific regions are based on sea area definitions in Kimmritz et al. (2019) and are similar to those used in Bushuk et al. (2024). Atlantic region: Greenland, Ice, Norwegian, Barents, and Kara Sea; Siberian region: Laptev and East Siberian Sea; Alaskan region: Chukchi and Beaufort Sea; Canadian region: Canadian archipelago, Hudson Bay, Baffin Bay, and Labrador Sea; Pacific region: Bering Sea and the Sea of Okhotsk.

To evaluate the performance of the ML-based error prediction models, we employ the mean absolute error (MAE), defined as

where Ep denotes the predicted error and Et denotes the “true” error. In more detail, E refers to the SIC error at each grid point over the entire evaluation period. M represents the total number of data points used in the MAE calculation.

To evaluate the sea ice prediction skill, we employ the root mean square error (RMSE) as follows:

where Xp represents the prediction and Xt represents the “truth” (i.e., the reanalysis in this study). In this study, X can refer to either the integrated ice edge error (IIEE) on a pan-Arctic scale, the SIE on a pan-Arctic/regional scale, or the SIC at a specific grid point. N represents the number of hindcasts, spanning 2003 to 2021.

The IIEE is also a crucial metric for sea ice predictions (Goessling et al., 2016). It specifically captures the discrepancies along the ice edge by quantifying the area where the predicted SIC and “true” SIC differ significantly. This makes IIEE particularly valuable for evaluating the spatial accuracy of the ice edge location, offering insight into the performance of models in reproducing the dynamic boundary between ice-covered and open-ocean regions. Following the definition of Goessling et al. (2016), the IIEE is computed as the area where the prediction and the “truth” disagree on the SIC being above or below 15 %:

where A is the area of grid cell, c=1 where SIC is above 15 % and c=0 elsewhere, and subscripts p and t denote the prediction and the “truth”. The definition of the IIEE is equivalent to the so-called symmetric difference between the areas enclosed by the predicted and “true” ice edges.

To evaluate the significance of prediction skill difference, we use a two-tailed Student's t test to compare the IIEE or the RMSE between two predictions.

To estimate the uncertainties in an RMSE value arising from the small ensemble size, we employ the bootstrap method. Specifically, we randomly sample 10 ensemble members with replacement from the ensemble, compute the ensemble mean, and then calculate the RMSE (for either SIC or SIE) based on these resampled data. This process is repeated 10 000 times, producing a distribution of 10 000 RMSE values. The standard deviation of this distribution is then used to quantify the uncertainties associated with the RMSE value.

3.1 Error correction model performance

We first demonstrate the performance of ML-based error correction models in predicting the model errors.

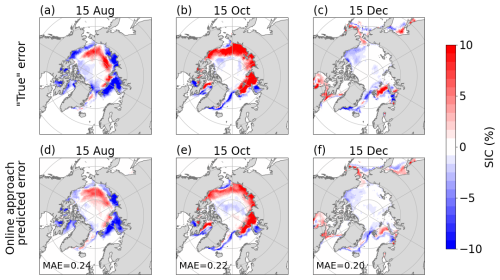

The “true” errors obtained from analysis increments and the errors predicted by the online error correction model are averaged over 2003–2021 and displayed in Fig. 3. The spatial patterns of the “true” error vary significantly across different dates. For instance, on 15 August, errors are predominantly negative across most regions as NorCPM underestimates SIC in this month, with some localized positive errors occurring internally. The average MAE across ice-covered grid points is 0.24 %. On 15 October, the errors are mostly positive as NorCPM overestimates SIC, resulting in an average MAE of 0.22 %. On 15 December, the MAE is 0.20 %, primarily appearing in marginal ice areas, with overall lower magnitudes compared to August and October. Notably, the average error remains below 1 % in all cases.

Figure 3(a–c) The “true” errors of SIC in the middle of the month based on the analysis increments (i.e., the changes thanks to monthly DA in the reanalysis). (d–f) The errors predicted by the online error correction model. These errors are averaged over the period 2003–2021. Values in (d–f) are the MAE between the “true” and predicted errors across space.

For all those months and regions, the online error correction models can correctly predict the spatial pattern of the “true” error (Fig. 3). Also, the magnitude of the “true” error is well reproduced with a slight underestimation.

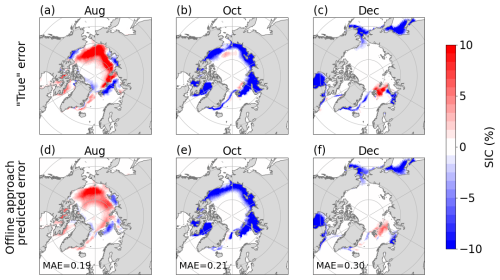

To assess the offline error correction model, we show its performance for hindcasts initialized in July (Fig. 4). The monthly “true” error patterns vary significantly across months. The offline error correction models effectively predict the spatial pattern of the “true” errors (Fig. 4). The predicted error magnitude is similar to that of the “true” error, with only a slight underestimation. Notably, the MAE of the offline error correction approach is higher than that of the online error correction approach in December (0.30 % vs. 0.20 %), which can be attributed to the model divergence since the initialization in July.

Figure 4(a–c) The “true” errors of monthly SIC estimated by the Reference hindcast initialized in July minus the reanalysis. (d–f) The errors predicted by the offline approach. The errors are averaged over the period 2003–2021. Values in (d–f) are the MAE between the “true” and predicted errors across space.

In summary, the above results suggest that the ML-based error correction models in both online and offline scenarios can skillfully predict the large-scale spatial patterns of the SIC error but slightly underestimate its magnitude.

3.2 Application to seasonal predictions

3.2.1 Skill seasonality

This section assesses the three sets of hindcasts initialized in January, April, July, and October from 2003 to 2021. The ensemble hindcasts are initialized with the first 10 members of the reanalyses and predict for 11 months (Sect. 2.6).

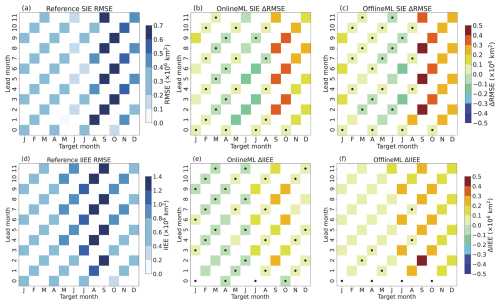

Figure 5 presents a comparative analysis of the RMSE for SIE prediction and the IIEE for ice edge prediction in the pan-Arctic across the three hindcast sets. The Reference hindcast shows higher RMSE in September and October (Fig. 5a), primarily due to several factors that have been documented in Bentsen et al. (2013). NorCPM overestimates the Arctic cloudiness, and its summer-season snowmelt is too slow. In addition, NorCPM has winds across the polar basin that are slightly too weak. These factors lead to sea ice that is too thick in the polar oceans and excessive Arctic SIE, in particular in summer (Bentsen et al., 2013).

Figure 5(a) RMSE of SIE for the Reference hindcast, (b) ΔRMSE between the Reference and OnlineML hindcasts, (c) ΔRMSE between the Reference and OfflineML hindcasts. (d) IIEE of the Reference hindcast, (e) ΔIIEE between the Reference and OnlineML hindcasts, and (f) ΔIIEE between the Reference and OfflineML hindcasts. In (b, c, e) and (f), warm colors (red/yellow) indicate that the OnlineML or OfflineML hindcasts are better than the Reference hindcasts, while cold colors (blue/green) indicate they are worse than the Reference hindcast. The black dots represent regions where the ΔRMSE or ΔIIEE does not pass the 95 % significance test.

Both the OnlineML and OfflineML hindcasts exhibit a small error reduction from January to July and a large error reduction from August to December (Fig. 5b and c). The OnlineML hindcast, in which only SIC, SST, and SSS are corrected without directly adjusting the atmospheric component, shows some improvements, particularly in January and from September to December. In contrast, from February to August, the Reference hindcast already exhibits good performance, leading to no significant differences. Compared to the OnlineML hindcast, the OfflineML hindcast achieves a greater error reduction, particularly in September, where the SIE prediction error is reduced by up to 75 % relative to the Reference hindcast. The primary reason is that the online approach corrects instantaneous model errors (on the 15th day of the month). Still, during the 1-month model integration, the sea ice component dynamically interacts with the other components, leading to error growth. In terms of monthly averaged model outputs, the correction magnitude is damped. In contrast, the offline approach aims to directly post-process monthly outputs without model integration.

The IIEE shows similar results to the RMSE of SIE (Fig. 5d–f). For the Reference hindcast, the IIEE is higher from July to September. The online approach leads to some improvements over the Reference hindcast from July to December, but its error reduction is small or not significant in the other months. In contrast, the offline approach consistently improves performance across nearly all periods and achieves larger error reductions in IIEE than the online approach, particularly from June to January, with the maximum reduction exceeding 0.5×106 km2 compared to the Reference hindcast. By directly correcting monthly mean outputs, the offline approach avoids information loss during the model integration, leading to larger error reduction.

In summary, the Reference hindcast exhibits relatively larger prediction errors from August to October, primarily due to increased model uncertainties associated with atmospheric forcing and sea ice processes. The offline approach outperforms the online approach in reducing both the RMSE of SIE and the IIEE along the ice edge, particularly during high-error months. For example, in September, the RMSE of SIE is reduced by 75 %, and the IIEE is reduced by over 0.5×106 km2 compared to the Reference hindcast.

3.2.2 Skill of seasonal predictions for different regions

The previous section highlighted significant improvements in predictions, primarily evident from September to January, regardless of the initialized month. In this section, we focus on analyzing the hindcasts initialized in July, and we show the performance for different regions and both SIE and SIC; this is largely because summer sea ice prediction serves as a critical climate change indicator, affects ecosystems and human activities, and presents a significant scientific challenge due to its high variability (Fig. 5a). For validation on the other initialization months, please refer to Figs. S1–S4 in the Supplement.

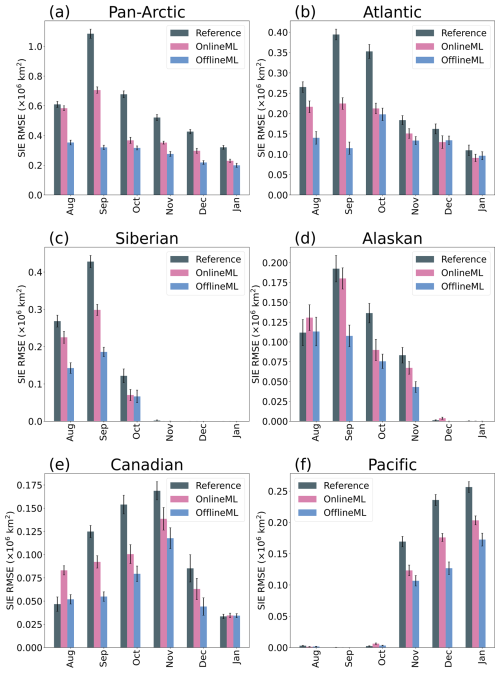

We first investigate the seasonal prediction skill for pan-Arctic and regional SIE defined in Fig. 2. For the pan-Arctic SIE, previously assessed in Fig. 5, both the OnlineML and OfflineML hindcasts reduce the SIE RMSEs (Fig. 6a). The RMSEs in the OnlineML hindcast have a strong seasonality like that in the Reference hindcast: higher in August, September, and October and lower in November, December, and January. The OfflineML hindcast has the lowest RMSEs, in particular an RMSE reduction of about 75 % compared to the Reference hindcast in September.

Figure 6RMSE of SIE in the pan-Arctic and five subregions for the Reference hindcast (gray bar), the OfflineML hindcast (blue bar), and the OnlineML hindcast (purple bar). Error bars represent the uncertainties.

Both error correction approaches reduce the RMSEs for regional SIE, and the offline approach overall outperforms the online approach (Fig. 6b–f). In the Atlantic region (Fig. 6b), significant RMSE reduction is observed for the first 3 months, until October. The OfflineML hindcast has the lowest RMSEs until September and similar RMSEs to the OnlineML hindcast from October. In the Siberian region (Fig. 6c), the RMSE reduction due to error correction is significant only until October but becomes almost zero from November due to the region being fully covered by sea ice. The OfflineML hindcast is significantly better than the OnlineML hindcast until September and similar afterward. In the Alaskan region (Fig. 6d), there is no significant RMSE reduction in August, but we observe significant RMSE reductions from September to November. In December and January, the region is almost fully covered by sea ice, leading to very tiny RMSEs for all three hindcast experiments. In the Canadian region (Fig. 6e), both approaches lead to significant RMSE reductions from September to December and the offline approach outperforms the online approach. In addition, the online approach leads to a significantly larger RMSE in August than that of the Reference hindcast. In the Pacific region, the RMSEs are close to zero from August to October due to very limited sea ice coverage. The two error correction approaches lead to significant RMSE reductions after November, and the offline approach outperforms the online approach in December and January.

Notably, in August, the RMSE of the OnlineML hindcast exceeds that of the Reference hindcast in both the Alaskan and Canadian regions. This is primarily due to the systematic underestimation of SIE by the OnlineML hindcast relative to both the Reference hindcast and the “truth” in these regions (Fig. S5 in the Supplement). The underlying causes of this systematic underestimation, however, warrant further investigation.

The offline approach outperforms the online approach across all regions, primarily because the online correction targets instantaneous model errors (i.e., those on the 15th day of each month). These corrected errors may reemerge through interactions with the other components of the coupled model system, thereby diminishing the overall impact of the online error correction when evaluated using monthly averaged outputs. In contrast, the offline approach directly adjusts the monthly model outputs, which aligns closely with the evaluation metrics used in this study. Moreover, the offline approach does not need to run the dynamical model and is computationally cheaper than the online approach. However, the online approach not only reduces SIC errors but also propagates corrections through the model integration to the other variables (e.g., sea ice thickness and sea ice drift), ensuring physical consistency between the predicted variables.

In summary, while the error correction performance varies by region and target month, overall, both approaches improve the sea ice prediction. In addition, the offline approach is more efficient than the online approach in reducing the SIE RMSE for both pan-Arctic and subregions. These conclusions also hold for seasonal predictions initialized in the other seasons. For details, please refer to Figs. S1–S4.

We take a closer look at the spatial aspects of the offline error correction approach in hindcasts initialized in July (Fig. 7). We specifically focus on identifying local areas where the error correction leads to improvements that may not be evident when examining SIE alone.

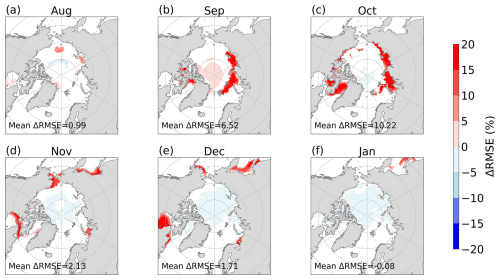

Figure 7Differences in SIC RMSE between the Reference and OfflineML hindcasts initialized from July. Warmer (colder) colors indicate that the OfflineML hindcast outperforms (underperforms) the Reference hindcast. White areas indicate differences that are not statistically significant. The value in each panel represents the average ΔRMSE for statistically significant grid points.

The impact of the error correction on SIC is more pronounced near the ice edge (Fig. 7). In August, only a few grid points in the Siberian and Atlantic regions exhibit improvements (Fig. 7a), with an average improvement of 0.99 %. In September, notable enhancements appear across the central Arctic, Atlantic, Siberian, and Canadian regions (Fig. 7b), with an average improvement of 6.52 %. In October, significant improvements are observed in the Atlantic and Canadian regions, reaching an average of 10.22 %. Additionally, some blue areas emerge in the central Arctic, indicating substantial differences between the Reference hindcast and the OfflineML hindcast, though the magnitude of these differences remains minimal. In November and December, the positive impact of the error correction is primarily concentrated in Hudson Bay and the Sea of Okhotsk. However, noise increases in the central Arctic, and the average improvement declines to 2.13 % in November and 1.71 % in December. The widespread presence of blue in the central Arctic also results in an average improvement of −0.08 % in January.

The major improvements in SIC are evident near the ice edge, which is closely associated with SIE. This spatial distribution highlights how the ML-based error correction approaches can enhance model performance in different regions, particularly during the ice-advance season, where using SIE as a metric might obscure these localized gains. In addition, it is noteworthy that the OfflineML and OnlineML hindcasts exhibit similar error spatial distributions. For specific details about the OnlineML hindcast, please refer to Fig. S6 in the Supplement.

In this study, we apply ML within NorCPM, a fully coupled Earth system model, to improve seasonal sea ice predictions in the Arctic under both online and offline scenarios. In the online error correction approach, ML is utilized to rectify errors in instantaneous model states in the middle of the month that serve as initial conditions for the subsequent model integration. The offline error correction approach involves the post-processing of monthly sea ice predictions.

The approaches proposed in this study integrate ML with a dynamical modeling framework, with the primary objective of reducing the intrinsic prediction errors of the dynamical model itself. Unlike purely data-driven models (e.g., Andersson et al., 2021; Ren et al., 2024; Kim et al., 2025), which are typically designed for statistical prediction of specific sea ice properties, ML here aims to improve the overall performance of the dynamical prediction system that ensures physical consistency among a large number of predicted variables.

Our results demonstrate that both online and offline ML-based error correction models can predict the spatial distribution of errors well, albeit with slight deficiencies in capturing amplitude. By applying the two approaches to seasonal Arctic sea ice predictions initialized from January, April, July, and October, we find that both approaches can reduce SIE and IIEE prediction errors compared to the raw predictions without error correction. The improvements vary with the lead month, with particularly notable enhancements observed in predictions from August to October.

By comparing the two error correction approaches, we find that the offline approach yields smaller errors than the online approach. The online error correction approach corrects instantaneous model errors only on the 15th day of the month, and the effect of this correction gradually weakens during model integration due to the accumulation of errors in the other model components. Consequently, the impact of the correction becomes less evident when computing monthly averaged outputs. Nevertheless, the online error correction can reduce errors in SST and SSS (Figs. S7 and S8 in the Supplement). Moreover, the online correction approach maintains better physical consistency among the predicted variables through dynamical model integration. The offline error correction approach directly corrects the model outputs without requiring model integration. As a result, it is computationally more efficient and easier to integrate into operational sea ice prediction systems than the online approach.

It is important to note that the proposed approaches still have room for improvement. In this study, we only use ocean and sea ice variables as input features. Including atmospheric variables would help to address errors due to both dynamic and thermodynamic processes and further improve the performance. Increasing the frequency of online correction could help enhance its effectiveness (Gregory et al., 2024), but this is challenging in practice since analysis increments in NorCPM are currently available only every month. An alternative strategy is to train hybrid models that combine ML with dynamical models, which has been shown to be effective in other systems (Farchi et al., 2021). However, this approach relies on external constraints to compute the gradient of the dynamical model, which are not available in NorCPM.

Furthermore, the current ML model (MLP) is trained independently at each grid point and thus cannot capture spatial correlations. This limits its ability to correct spatially coherent errors, particularly in regions where NorCPM already performs well and only subtle adjustments are needed. As a result, the hybrid model often struggles to reproduce the reanalysis, which is treated as the “truth” in this study. While it is unrealistic to expect the model to perfectly replicate analysis increments, the discrepancy is closely related to the ML-based model's learning capacity and the nature of the underlying errors. Possible contributing factors include (1) the lack of spatial dependencies due to pointwise training and (2) the tendency of models trained on long-term data to learn systematic biases rather than instantaneous random errors, the latter of which tend to be averaged out over time. Future studies could explore spatially aware architectures, such as CNNs and U-Net, and incorporate additional predictors to capture complex error structures and enhance correction performance (Palerme et al., 2024).

The code and data to plot each figure are available at https://doi.org/10.5281/zenodo.14533027 (He, 2025). For access to the full hindcast dataset, please contact Yiguo Wang (yiguo.wang@nersc.no).

The supplement related to this article is available online at https://doi.org/10.5194/tc-19-3279-2025-supplement.

Conceptualization: ZH, YW, JB. Analysis and visualization: ZH. Interpretation of results: ZH, YW, JB. Writing (original draft): ZH, YW. Writing (reviewing and editing original draft): ZH, YW, JB, XW, ZS.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This study was funded by the National Natural Science Foundation of China (42225602), the National Key R&D Program of China (2022YFE0106400), the Postgraduate Research and Practice Innovation Program of Jiangsu Province (KYCX23_0657), the China Scholarship Council (202206710071), the Special Funds for Creative Research (2022C61540), and the Opening Project of the Key Laboratory of Marine Environmental Information Technology (521037412). Julien Brajard was funded by the project TARDIS funded by the Research Council of Norway (under grant no. 325241). Yiguo Wang was funded by the Norges Forskningsråd (under grant no. 328886) and the Trond Mohn stiftelse (under grand no. BFS2018TMT01). This work also received grants for computer time from the Norwegian program for supercomputer (NN9039K) and storage grants (NS9039K). Zheqi Shen was funded by the National Natural Science Foundation of China (42176003). The authors thank the editor and the three anonymous reviewers for their valuable comments. They also thank Marianne Williams-Kerslake for her valuable assistance in improving the English writing on an earlier version of this paper.

This research has been supported by the National Natural Science Foundation of China (grant nos. 42225602 and 42176003), the National Key Research and Development Program of China (grant no. 2022YFE0106400), the Jiangsu Provincial Department of Education (grant no. KYCX23_0657), the China Scholarship Council (grant no. 202206710071), the Norges Forskningsråd (grant nos. 328886, 325241), and the Trond Mohn stiftelse (grant no. BFS2018TMT01).

This paper was edited by Bin Cheng and reviewed by three anonymous referees.

Andersson, T. R., Hosking, J. S., Pérez-Ortiz, M., Paige, B., Elliott, A., Russell, C., Law, S., Jones, D. C., Wilkinson, J., Phillips, T., Byrne, J., Tietsche, S., Sarojini, B. B., Blanchard-Wrigglesworth, E., Aksenov, Y., Downie, R., and Shuckburgh, E.: Seasonal Arctic sea ice forecasting with probabilistic deep learning, Nat. Commun., 12, 5124, https://doi.org/10.1038/s41467-021-25257-4, 2021. a

Bentsen, M., Bethke, I., Debernard, J. B., Iversen, T., Kirkevåg, A., Seland, Ø., Drange, H., Roelandt, C., Seierstad, I. A., Hoose, C., and Kristjánsson, J. E.: The Norwegian Earth System Model, NorESM1-M – Part 1: Description and basic evaluation of the physical climate, Geosci. Model Dev., 6, 687–720, https://doi.org/10.5194/gmd-6-687-2013, 2013. a, b, c, d, e, f, g

Bethke, I., Wang, Y., Counillon, F., Keenlyside, N., Kimmritz, M., Fransner, F., Samuelsen, A., Langehaug, H., Svendsen, L., Chiu, P.-G., Passos, L., Bentsen, M., Guo, C., Gupta, A., Tjiputra, J., Kirkevåg, A., Olivié, D., Seland, Ø., Solsvik Vågane, J., Fan, Y., and Eldevik, T.: NorCPM1 and its contribution to CMIP6 DCPP, Geosci. Model Dev., 14, 7073–7116, https://doi.org/10.5194/gmd-14-7073-2021, 2021. a, b, c

Blanchard-Wrigglesworth, E., Barthelemy, A., Chevallier, M. and Cullather, R., Fuckar, N., Massonnet, F., Posey, P., Wang, W., Zhang, J., Ardilouze, C., Bitz, C. M., Vernieres, G., Wallcraft, A., Wang, M.: Multi-model seasonal forecast of Arctic sea-ice: forecast uncertainty at pan-Arctic and regional scales, Clim. Dynam., 49, 1399–1410, 2017. a

Bleck, R., Dean, S., O'Keefe, M., and Sawdey, A.: A comparison of data-parallel and message-passing versions of the Miami Isopycnic Coordinate Ocean Model (MICOM), Parallel Comput., 21, 1695–1720, 1995. a

Blockley, E. W. and Peterson, K. A.: Improving Met Office seasonal predictions of Arctic sea ice using assimilation of CryoSat-2 thickness, The Cryosphere, 12, 3419–3438, https://doi.org/10.5194/tc-12-3419-2018, 2018. a

Brajard, J., Carrassi, A., Bocquet, M., and Bertino, L.: Combining data assimilation and machine learning to infer unresolved scale parametrization, Philos. T. Roy. Soc. A, 379, 20200086, https://doi.org/10.1098/rsta.2020.0086, 2021. a, b, c, d, e

Bushuk, M., Ali, S., Bailey, D. A., Bao, Q., Batte, L., Bhatt, U. S., Blanchard-Wrigglesworth, E., Blockley, E., Cawley, G., Chi, J., Counillon, F., Coulombe, P. G., Cullather, R. I., Diebold, F. X., Dirkson, A., Exarchou, E., Goebel, M., Gregory, W., Guemas, V., Hamilton, L., He, B., Horvath, S., Ionita, M., Kay, J. E., Kim, E., Kimura, N., Kondrashov, D., Labe, Z. M., Lee, W., Lee, Y. J., Li, C., Li, X., Lin, Y., Liu, Y., Maslowski, W., Massonnet, F., Meier, W. N., Merryfield, W. J., Myint, H., Navarro, J. C. A., Petty, A., Qiao, F., Schroeder, D., Schweiger, A., Shu, Q., Sigmond, M., Steele, M., Stroeve, J., Sun, N., Tietsche, S., Tsamados, M., Wang, K., Wang, J., Wang, W., Wang, Y., Wang, Y., Williams, J., Yang, Q., Yuan, X., Zhang, J., and Zhang, Y.: Predicting September Arctic sea ice: a multi-model seasonal skill comparison, B. Am. Meteorol. Soc., 105, 105(7), E1170-E1203, https://doi.org/10.1175/BAMS-D-23-0163.1, 2024. a, b, c, d, e, f, g

Carrassi, A., Weber, R. J. T., Guemas, V., Doblas-Reyes, F. J., Asif, M., and Volpi, D.: Full-field and anomaly initialization using a low-order climate model: a comparison and proposals for advanced formulations, Nonlin. Processes Geophys., 21, 521–537, https://doi.org/10.5194/npg-21-521-2014, 2014. a

Carrassi, A., Bocquet, M., Bertino, L., and Evensen, G.: Data assimilation in the geosciences: an overview of methods, issues, and perspectives, WIREs Clim. Change, 9, e535, https://doi.org/10.1002/wcc.535, 2018. a

Counillon, F., Bethke, I., Keenlyside, N., Bentsen, M., Bertino, L., and Zheng, F.: Seasonal-to-decadal predictions with the ensemble Kalman filter and the Norwegian Earth System Model: a twin experiment, Tellus A, 66, 21074, https://doi.org/10.3402/tellusa.v66.21074, 2014. a

Counillon, F., Keenlyside, N., Bethke, I., Wang, Y., Billeau, S., Shen, M. L., and Bentsen, M.: Flow-dependent assimilation of sea surface temperature in isopycnal coordinates with the Norwegian Climate Prediction Model, Tellus A, 68, 32437, https://doi.org/10.3402/tellusa.v68.32437, 2016. a, b

Craig, A. P., Vertenstein, M., and Jacob, R.: A new flexible coupler for earth system modeling developed for CCSM4 and CESM1, Int. J. High Perform. C., 26, 31–42, 2012. a

De Cruz, L., Demaeyer, J., and Vannitsem, S.: The Modular Arbitrary-Order Ocean-Atmosphere Model: MAOOAM v1.0, Geosci. Model Dev., 9, 2793–2808, https://doi.org/10.5194/gmd-9-2793-2016, 2016. a

Dee, D. P., Uppala, S. M., Simmons, A. J., Berrisford, P., Poli, P., Kobayashi, S., Andrae, U., Balmaseda, M. A., Balsamo, G., Bauer, P., Bechtold, P., Beljaars, A. C. M., van de Berg, L., Bidlot, J., Bormann, N., Delsol, C., Dragani, R., Fuentes, M., Geer, A. J., Haimberger, L., Healy, S. B., Hersbach, H., Holm, E. V., Isaksen, L., Kallberg, P., Koehler, M., Matricardi, M., McNally, A. P., Monge-Sanz, B. M., Morcrette, J. J., Park, B. K., Peubey, C., de Rosnay, P., Tavolato, C., Thepaut, J. N., and Vitart, F.: The ERA-Interim reanalysis: configuration and performance of the data assimilation system, Q. J. Roy. Meteor. Soc., 137, 553–597, 2011. a

Farchi, A., Laloyaux, P., Bonavita, M., and Bocquet, M.: Using machine learning to correct model error in data assimilation and forecast applications, Q. J. Roy. Meteor. Soc., 147, 3067–3084, 2021. a

Gent, P. R., Danabasoglu, G., Donner, L. J., Holland, M. M., Hunke, E. C., Jayne, S. R., Lawrence, D. M., Neale, R. B., Rasch, P. J., Vertenstein, M., Worley, P. H., Yang, Z.-L., and Zhang, M.: The community climate system model version 4, J. Climate, 24, 4973–4991, 2011. a

Goessling, H. F., Tietsche, S., Day, J. J., Hawkins, E., and Jung, T.: Predictability of the Arctic sea ice edge, Geophys. Res. Lett., 43, 1642–1650, https://doi.org/10.1002/2015GL067232, 2016. a, b

Good, S. A., Martin, M. J., and Rayner, N. A.: EN4: quality controlled ocean temperature and salinity profiles and monthly objective analyses with uncertainty estimates, J. Geophys. Res.-Oceans, 118, 6704–6716, 2013. a, b

Gregory, W., Bushuk, M., Zhang, Y., Adcroft, A., and Zanna, L.: Machine learning for online sea ice bias correction within global ice-ocean simulations, Geophys. Res. Lett., 51, e2023GL106776, https://doi.org/10.1029/2023GL106776, 2024. a, b, c

He, Z.: Code and data for NorCPM, Zenodo [code and data set], https://doi.org/10.5281/zenodo.14533027, 2025. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horanyi, A., Munoz-Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., De Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R. J., Holm, E., Janiskova, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., de Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thepaut, J.-N.: The ERA5 global reanalysis, Q. J. Roy. Meteor. Soc., 146, 1999–2049, 2020. a

Heuzé, C. and Jahn, A.: The first ice-free day in the Arctic Ocean could occur before 2030, Nat. Commun., 15, 1–10, 2024. a

Holland, M. M., Bailey, D. A., Briegleb, B. P., Light, B., and Hunke, E.: Improved sea ice shortwave radiation physics in CCSM4: the impact of melt ponds and aerosols on Arctic sea ice, J. Climate, 25, 1413–1430, 2012. a

Ioffe, S.: Batch renormalization: Towards reducing minibatch dependence in batch-normalized models, arXiv [preprint], https://doi.org/10.48550/arXiv.1702.03275, 2017. a

Jia, X., Willard, J., Karpatne, A., Read, J., Zwart, J., Steinbach, M., and Kumar, V.: Physics guided RNNs for modeling dynamical systems: A case study in simulating lake temperature profiles, in: Proceedings of the 2019 SIAM international conference on data mining, Calgary, Alberta, Canada, 2–4 May 2019, SIAM, 558–566, https://doi.org/10.1137/1.9781611975673.63, 2019. a

Jung, T., Gordon, N. D., Bauer, P., Bromwich, D. H., Chevallier, M., Day, J. J., Dawson, J., Doblas-Reyes, F., Fairall, C., Goessling, H. F., Holland, M., Inoue, J., Iversen, T., Klebe, S., Lemke, P., Losch, M., Makshtas, A., Mills, B., Nurmi, P., Perovich, D., Reid, P., Renfrew, I. A., Smith, G., Svensson, G., Tolstykh, M., and Yang, Q.: Advancing polar prediction capabilities on daily to seasonal time scales, B. Am. Meteorol. Soc., 97, 1631–1647, 2016. a

Kim, Y. J., Kim, H.-c., Han, D., Stroeve, J., and Im, J.: Long-term prediction of Arctic sea ice concentrations using deep learning: effects of surface temperature, radiation, and wind conditions, Remote Sens. Environ., 318, 114568, https://doi.org/10.1016/j.rse.2024.114568, 2025. a

Kimmritz, M., Counillon, F., Bitz, C., Massonnet, F., Bethke, I., and Gao, Y.: Optimising assimilation of sea ice concentration in an Earth system model with a multicategory sea ice model, Tellus A, 70, 1–23, 2018. a, b, c, d, e

Kimmritz, M., Counillon, F., Smedsrud, L. H., Bethke, I., Keenlyside, N., Ogawa, F., and Wang, Y.: Impact of ocean and sea ice initialisation on seasonal prediction skill in the Arctic, J. Adv. Model. Earth Sy., 11, 4147–4166, 2019. a, b, c, d, e, f, g, h, i, j

Kirkevåg, A., Grini, A., Olivié, D., Seland, Ø., Alterskjær, K., Hummel, M., Karset, I. H. H., Lewinschal, A., Liu, X., Makkonen, R., Bethke, I., Griesfeller, J., Schulz, M., and Iversen, T.: A production-tagged aerosol module for Earth system models, OsloAero5.3 – extensions and updates for CAM5.3-Oslo, Geosci. Model Dev., 11, 3945–3982, https://doi.org/10.5194/gmd-11-3945-2018, 2018. a

Laloyaux, P., de Boisseson, E., Balmaseda, M., Bidlot, J.-R., Broennimann, S., Buizza, R., Dalhgren, P., Dee, D., Haimberger, L., Hersbach, H., Kosaka, Y., Martin, M., Poli, P., Rayner, N., Rustemeier, E., and Schepers, D.: CERA-20C: a coupled reanalysis of the twentieth century, J. Adv. Model. Earth Sy., 10, 1172–1195, 2018. a

Lawrence, D. M., Oleson, K. W., Flanner, M. G., Thornton, P. E., Swenson, S. C., Lawrence, P. J., Zeng, X., Yang, Z.-L., Levis, S., Sakaguchi, K., Bonan, G. B., and Slater, A. G.: Parameterization improvements and functional and structural advances in version 4 of the Community Land Model, J. Adv. Model. Earth Sy., 3, M03001, https://doi.org/10.1029/2011MS00045, 2011. a

Onarheim, I. H., Eldevik, T., Smedsrud, L. H., and Stroeve, J. C.: Seasonal and regional manifestation of Arctic sea ice loss, J. Climate, 31, 4917–4932, 2018. a

Palerme, C., Lavergne, T., Rusin, J., Melsom, A., Brajard, J., Kvanum, A. F., Macdonald Sørensen, A., Bertino, L., and Müller, M.: Improving short-term sea ice concentration forecasts using deep learning, The Cryosphere, 18, 2161–2176, https://doi.org/10.5194/tc-18-2161-2024, 2024. a, b, c

Penny, S. G. and Hamill, T. M.: Coupled data assimilation for integrated earth system analysis and prediction, B. Am. Meteorol. Soc., 98, ES169–ES172, 2017. a

Ren, Y., Li, X., and Wang, Y.: SICNetseason V1.0: a transformer-based deep learning model for seasonal Arctic sea ice prediction by incorporating sea ice thickness data, Geosci. Model Dev., 18, 2665–2678, https://doi.org/10.5194/gmd-18-2665-2025, 2025. a

Reynolds, R. W., Smith, T. M., Liu, C., Chelton, D. B., Casey, K. S., and Schlax, M. G.: Daily high-resolution-blended analyses for sea surface temperature, J. Climate, 20, 5473–5496, 2007. a

Saha, S., Nadiga, S., Thiaw, C., Wang, J., Wang, W., Zhang, Q., Van den Dool, H. M., Pan, H. L., Moorthi, S., Behringer, D., Stokes, D., Pena, M., Lord, S., White, G., Ebisuzaki, W., Peng, P., and Xie, P.: The NCEP climate forecast system, J. Climate, 19, 3483–3517, 2006. a

Sakov, P. and Oke, P. R.: A deterministic formulation of the ensemble Kalman filter: an alternative to ensemble square root filters, Tellus A, 60, 361–371, 2008. a

Serreze, M. C., Holland, M. M., and Stroeve, J.: Perspectives on the Arctic's shrinking sea-ice cover, Science, 315, 1533–1536, 2007. a

Stroeve, J. C., Markus, T., Boisvert, L., Miller, J., and Barrett, A.: Changes in Arctic melt season and implications for sea ice loss, Geophys. Res. Lett., 41, 1216–1225, 2014. a

Taylor, K. E., Stouffer, R. J., and Meehl, G. A.: An overview of CMIP5 and the experiment design, B. Am. Meteorol. Soc., 93, 485–498, 2012. a

Thornton, E.: Technical Description of version 4.0 of the Community Land Model (CLM), NCAR, Climate and Global, Electronic ISSN (2153-2400), https://www.researchgate.net/publication/261439256_Technical_Description_of_version_40_of_the_Community_Land_Model_CLM (last access: 22 August 2025), 2010. a

Titchner, H. A. and Rayner, N. A.: The Met Office Hadley Centre sea ice and sea surface temperature data set, version 2: 1. Sea ice concentrations, J. Geophys. Res.-Atmos., 119, 2864–2889, 2014. a

Vitart, F., Ardilouze, C., Bonet, A., Brookshaw, A., Chen, M., Codorean, C., Déqué, M., Ferranti, L., Fucile, E., Fuentes, M., Hendon, H., Hodgson, J., Kang, H.-S., Kumar, A., Lin, H., Liu, G., Liu, X., Malguzzi, P., Mallas, I., Manoussakis, M., Mastrangelo, D., MacLachlan, C., McLean, P., Minami, A., Mladek, R., Nakazawa, T., Najm, S., Nie, Y., Rixen, M., Robertson, A. W., Ruti, P., Sun, C., Takaya, Y., Tolstykh, M., Venuti, F., Waliser, D., Woolnough, S., Wu, T., Won, D.-J., Xiao, H., Zaripov, R., and Zhang, L.: The subseasonal to seasonal (S2S) prediction project database, B. Am. Meteorol. Soc., 98, 163–173, https://doi.org/10.1175/BAMS-D-16-0017.1, 2017. a

Wagner, P. M., Hughes, N., Bourbonnais, P., Stroeve, J., Rabenstein, L., Bhatt, U., Little, J., Wiggins, H., and Fleming, A.: Sea-ice information and forecast needs for industry maritime stakeholders, Polar Geography, 43, 160–187, 2020. a

Wang, W., Chen, M., and Kumar, A.: Seasonal prediction of Arctic sea ice extent from a coupled dynamical forecast system, Mon. Weather Rev., 141, 1375–1394, 2013. a

Wang, X., Liu, Y., Key, J. R., and Dworak, R.: A new perspective on four decades of changes in Arctic sea ice from satellite observations, Remote Sens.-Basel, 14, 1846, https://doi.org/10.3390/rs14081846, 2022. a

Wang, Y., Counillon, F., and Bertino, L.: Alleviating the bias induced by the linear analysis update with an isopycnal ocean model, Q. J. Roy. Meteor. Soc., 142, 1064–1074, 2016. a

Wang, Y., Counillon, F., Bethke, I., Keenlyside, N., Bocquet, M., and Shen, M.-l.: Optimising assimilation of hydrographic profiles into isopycnal ocean models with ensemble data assimilation, Ocean Model., 114, 33–44, 2017. a, b

Wang, Y., Counillon, F., Keenlyside, N., Svendsen, L., Gleixner, S., Kimmritz, M., Dai, P., and Gao, Y.: Seasonal predictions initialised by assimilating sea surface temperature observations with the EnKF, Clim. Dynam., 53, 5777–5797, 2019. a, b, c

Watson, P. A.: Applying machine learning to improve simulations of a chaotic dynamical system using empirical error correction, J. Adv. Model. Earth Sy., 11, 1402–1417, 2019. a, b, c

Watt-Meyer, O., Brenowitz, N. D., Clark, S. K., Henn, B., Kwa, A., McGibbon, J., Perkins, W. A., and Bretherton, C. S.: Correcting weather and climate models by machine learning nudged historical simulations, Geophys. Res. Lett., 48, e2021GL092555, https://doi.org/10.1029/2021GL092555, 2021. a, b, c

Weber, R. J., Carrassi, A., and Doblas-Reyes, F. J.: Linking the anomaly initialization approach to the mapping paradigm: a proof-of-concept study, Mon. Weather Rev., 143, 4695–4713, 2015. a

Yang, Z., Liu, J., Yang, C.-Y., and Hu, Y.: Correcting nonstationary sea surface temperature bias in NCEP CFSv2 using ensemble-based neural networks, J. Atmos. Ocean. Tech., 40, 885–896, 2023. a, b

Zuo, H., Balmaseda, M. A., Tietsche, S., Mogensen, K., and Mayer, M.: The ECMWF operational ensemble reanalysis–analysis system for ocean and sea ice: a description of the system and assessment, Ocean Sci., 15, 779–808, 2019. a