the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Towards a webcam-based snow cover monitoring network: methodology and evaluation

Céline Portenier

Fabia Hüsler

Stefan Härer

Stefan Wunderle

Snow cover variability has a significant impact on climate and the environment and is of great socioeconomic importance for the European Alps. Terrestrial photography offers a high potential to monitor snow cover variability, but its application is often limited to small catchment scales. Here, we present a semiautomatic procedure to derive snow cover maps from publicly available webcam images in the Swiss Alps and propose a procedure for the georectification and snow classification of such images. In order to avoid the effort of manually setting ground control points (GCPs) for each webcam, we implement a novel registration approach that automatically resolves camera parameters (camera orientation; principal point; field of view, FOV) by using an estimate of the webcams' positions and a high-resolution digital elevation model (DEM). Furthermore, we propose an automatic image-to-image alignment to correct small changes in camera orientation and compare and analyze two recent snow classification methods. The resulting snow cover maps indicate whether a DEM grid is snow-covered, snow-free, or not visible from webcams' positions. GCPs are used to evaluate our novel automatic image registration approach. The evaluation reveals a root mean square error (RMSE) of 14.1 m for standard lens webcams (FOV<48∘) and a RMSE of 36.3 m for wide-angle lens webcams (FOV≥48∘). In addition, we discuss projection uncertainties caused by the mapping of low-resolution webcam images onto the high-resolution DEM. Overall, our results highlight the potential of our method to build up a webcam-based snow cover monitoring network.

- Article

(15290 KB) - Full-text XML

- BibTeX

- EndNote

Snow is an essential natural resource. Because snow has a much higher albedo compared to other natural land surfaces, its areal extent plays an important role in the Earth's energy balance. In alpine regions, snow plays a key role in the hydrologic cycle. It acts as water storage and accounts for a substantial portion of the total runoff. Information about spatial and temporal snow distribution is therefore essential for monitoring water resources and predicting runoff (Jonas et al., 2009), and it is of crucial importance for water supply and hydropower production. In addition, seasonal snow cover not only plays an important role for the development of ecosystems but has a high economic value for winter tourism as well.

Most commonly used methods to monitor snow cover variability are based on in situ measurements and satellite remote sensing. In situ measurements, e.g., from ground-based monitoring networks, provide accurate and long time series of local snow sites and can be used, for example, for long-term trend analyses (e.g., Laternser and Schneebeli, 2003; Marty, 2008; Klein et al., 2016). These measurements, however, might not capture the spatial variability of snow cover. In contrast to in situ measurements, remote sensing data can provide spatially comprehensive information on snow cover extent. In particular, optical remote sensing is widely used to study snow cover variability (e.g., Foppa and Seiz, 2012; Hüsler et al., 2012; Metsämäki et al., 2012; Wunderle et al., 2016). The main limiting factor of optical remote sensing techniques is cloud coverage. According to Dumont and Gascoin (2016), the yearly average of pixels hidden by clouds is about 50 % in the Pyrenees and 60 % in the Austrian Alps. In addition, large uncertainties exist in shadowed or forested areas. Moreover, the sensor resolution (e.g., 250 m or 1.1 km resolution of the MODIS and AVHRR sensor respectively) may limit the capture of small-scale variability of snow cover, especially in complex, mountainous terrain. The emergence of new techniques based on airborne digital photogrammetry and terrestrial photography enables us to extract snow cover information with high spatial and temporal resolutions. Unmanned areal systems (UASs) enable the generation of high-resolution digital surface models that can be used to map the small-scale variability of snow depth (e.g., Bühler et al., 2016; De Michele et al., 2016). However, UASs are often associated with high costs, and their spatial coverage and temporal resolution is limited. In addition, weather constraints due to strong winds or precipitation can restrict the use of UASs, especially at high elevations.

In this work, we suggest the use of publicly available webcam images and present a semiautomatic procedure to generate snow cover maps from such images. This work builds on and extends the master's thesis by Dizerens (2015). We focus on the Swiss Alps, where several thousands of public outdoor webcams are readily available online, resulting in a relatively dense sampling to study snow cover variability over a large area. Webcams are a cost-effective and efficient way to monitor snow cover variability in mountainous regions at high spatiotemporal scales. Most webcams offer images within a 1-hourly to 10 min interval. The spatial resolution depends on the image resolution, a webcam's field of view (FOV), the distance of the terrain to the webcam, and the slope and orientation of the terrain (see Sect. 5 for an in-depth discussion). Webcams may offer detailed analyses of snow cover on steep slopes due to their oblique view on the mountains. Moreover, webcams can provide snow cover information even under cloudy weather conditions as long as cloud cover and fog do not disturb the view on the ground. Therefore, webcams offer an unique potential to complement and evaluate satellite-derived snow information. For instance, Piazzi et al. (2019) have shown that webcam images can be leveraged to assess the consistency of Sentinel-2 snow cover information. However, the areal coverage of webcam-based snow cover information depends on the number of cameras used, their FOV, and their positioning in the field. In addition, public webcams provide images in the visible spectrum only and with varying image quality, which makes an accurate classification of snow cover challenging.

Terrestrial photography is an increasingly used observation method in different research disciplines such as glaciology (e.g., Corripio, 2004; Dumont et al., 2011; Huss et al., 2013; Messerli and Grinsted, 2015) and snow cover studies (e.g., Schmidt et al., 2009; Farinotti et al., 2010; Härer et al., 2013, 2016; Pimentel et al., 2014; Liu et al., 2015; Fedorov et al., 2016; Revuelto et al., 2016; Arslan et al., 2017; Millet et al., 2018). However, most of these studies use single cameras and thus are limited in areal coverage. In particular, they either require known camera parameters (i.e., extrinsic and intrinsic camera parameters such as the camera orientation or the FOV of the camera) or require significant manual user input (e.g., ground control points, GCPs) to georectify terrestrial photography. Since camera parameters are not readily available for public webcams, and manually setting GCPs for a large number of cameras is time-consuming, these methods are of limited application for our purposes. Therefore, we implement a processing scheme that minimizes manual user input by automation. Our georectification approach registers a webcam image with a digital elevation model (DEM). This image-to-DEM registration automatically resolves the required webcam parameters, such as the camera's orientation and its FOV, by using an estimate of the webcam's position only.

In literature, many different snow classification techniques exist to detect snow cover in terrestrial camera images. Some studies determine the snow-covered area using manual interpretation (Farinotti et al., 2010; Liu et al., 2015) or by manually selecting appropriate threshold values for each single image (Schmidt et al., 2009) or for a set of images (Floyd and Weiler, 2008). On the other hand, many automatic approaches exists as well, such as methods applying image clustering techniques (Pimentel et al., 2014; Millet et al., 2018; Rüfenacht et al., 2014), applying other statistical methods (e.g., Salvatori et al., 2011; Härer et al., 2016), or using supervised learning classifiers (Fedorov et al., 2016) to distinguish snow from snow-free areas. The main challenge of these methods is to detect snow cover in shadowing areas or to differentiate between dark, shadowed snow pixel, and other canopy pixels such as bright rock surfaces (Rüfenacht et al., 2014; Härer et al., 2016; Arslan et al., 2017; Manninen and Jääskeläinen, 2018). The study of Härer et al. (2016) tackles the problem of undetected snow cover in shadowing regions. Härer et al. (2016) propose applying the blue-band classification by Salvatori et al. (2011) and subsequently using principal component analysis (PCA) to separate shaded snow cover from sunlit rock surfaces. Recently, Fedorov et al. (2016) propose training machine learning models to classify snow cover in terrestrial camera images. While Fedorov et al. (2016) report superior performance to handcrafted methods on data that are sufficiently similar to the training data, such models do not generalize well to data that deviate significantly from the training data. Moreover, acquiring data suitable for training such models is expensive, since it requires us to label every single pixel in a set of training images by hand. In this study, we test and compare the snow classification approaches proposed by Salvatori et al. (2011) and Härer et al. (2016) within our framework. Combined with an automatic image-to-image alignment to correct small changes in the camera orientation, our procedure can be applied to webcam images to generate snow cover maps with a minimal effort. To assess the accuracy of our automatic snow cover mapping, we analyze and evaluate the components of the processing chain with a focus on automatic image-to-DEM registration.

This work is organized as follows: in Sect. 2, the webcam data, DEM, and orthophoto used in this work are described. In Sect. 3, we present the proposed methods of our procedure. Qualitative examples of snow cover maps and a comparison of the applied snow classification methods are shown in Sect. 4, followed by a detailed evaluation of the mapping accuracy in Sect. 5. Finally, we discuss the advantages and limitations of our procedure (Sect. 6), before concluding in Sect. 7.

2.1 Webcam images

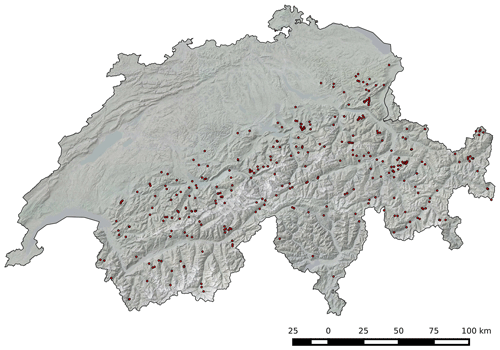

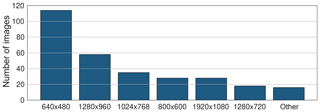

The website http://www.kaikowetter.ch/ (last access: 22 Apri l2020) offers a network of about 520 outdoor webcams observing the current snow conditions in and around Switzerland. Most of these webcams were installed by mountain railway operators, restaurants, hotels, and private citizens. Since November 2011, we have been archiving one image per day of each webcam from this website and have been extending our archive continuously with webcam images from other web sources. To apply our procedure to a given webcam, two requirements have to be fulfilled: the mountain silhouette has to be visible on the webcam image; i.e., it is not obscured by trees or buildings. About 70 % of all the webcams provided by http://www.kaikowetter.ch/ satisfy this requirement. The other approximately 30 % can not be used due to obstacles between silhouette and webcams or since no mountain silhouette is visible at all. In addition, the location of a webcam has to be known. Up to now, we have manually estimated the locations of 297 webcams in the Swiss Alps (see Fig. 1). They are located at elevations ranging from 800 m to 3900 m a.s.l. The pixel resolution of these webcam images ranges from 640 pixels × 480 pixels up to 1920 pixels × 1080 pixels (see Fig. 2).

2.2 Swiss geodata

We use the swissALTI3D DEM and the orthophoto SWISSIMAGE, produced by the Swiss Federal Office of Topography (swisstopo, 2013a, b). The DEM covers Switzerland and Liechtenstein and has a spatial resolution of 2 m. It was created using airborne laser scanning data (below 2000 m a.s.l.) or stereocorrelation of areal photographs (above 2000 m a.s.l.) and features an accuracy of 0.5 m and 1 to 3 m on average, respectively. The orthophoto SWISSIMAGE is composed of digital aerial orthophotographs of Switzerland, featuring a spatial resolution of 0.1 m in the Swiss lowlands and 0.25 m in the Swiss Alps.

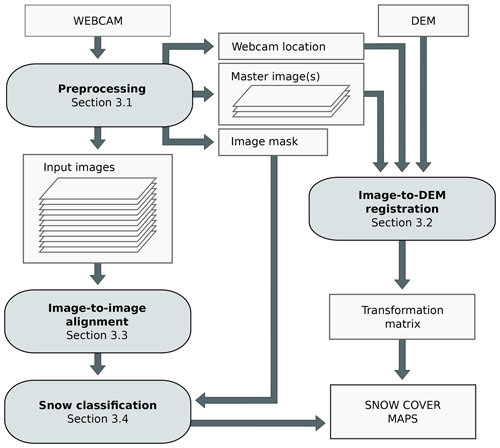

The proposed procedure consists of four major steps: preprocessing, automatic image-to-DEM registration, automatic image-to-image alignment, and automatic snow classification (see Fig. 3 for an overview). In the preprocessing step (Sect. 3.1), manual user input is required to estimate the webcam's location, to select a representative image for image-to-DEM registration (hereafter referred as master image), and to provide an image mask. Second, the selected master image is automatically registered with the DEM to derive the unknown camera parameters, such as orientation and FOV of the webcam (Sect. 3.2). Successful image-to-DEM registration results in a transformation matrix that relates each pixel of the master image to its 3D coordinates. Since an image series of a webcam is usually not perfectly aligned, we automatically align images to the selected master image (Sect. 3.3). This enables the use of the same transformation matrix for all webcam images. Finally, each image is automatically snow-classified (Sect. 3.4). Using the transformation matrix, a georeferenced snow cover map can be generated.

Figure 3Overview of the proposed procedure. It consists of four major steps: preprocessing, automatic image-to-DEM registration, automatic image-to-image alignment, and automatic snow classification. Image-to-DEM registration results in a transformation matrix that is used to project the snow-classified pixels onto a map.

3.1 Preprocessing

First, a webcam's location and its installation height above ground is estimated manually. This is achieved by considering the position of objects visible in the webcam image, the orthophoto SWISSIMAGE, and additional information provided by the webcam owner (e.g., the name of a restaurant where the webcam is mounted). In some cases, touristic photographs and images from Google Street View help to improve the location estimation. As mentioned in Sect. 2, we have estimated the locations of 297 webcams (see Fig. 1). Next, at least one master image per webcam is selected. This image has to be representative for all other images of the same webcam and should feature high contrast between the mountains and the sky for automatic image-to-DEM registration. Under clear-sky conditions, most webcam images are suited to serve as master image. Finally, a so-called input mask can be prepared to define image regions that should be ignored in the snow map generation procedure. Such regions can be trees, buildings, or other fixed infrastructure and are defined on the master image.

3.2 Automatic image-to-DEM registration

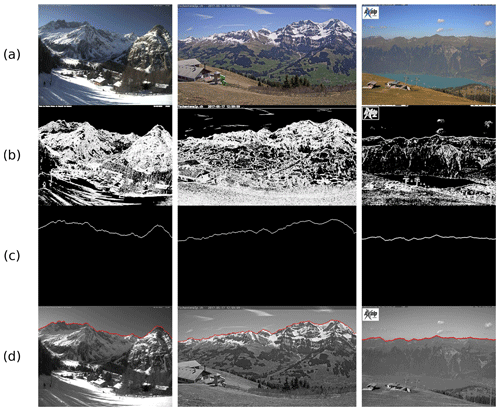

The registration of an image with a DEM requires a common feature space. As in the study of Baboud et al. (2011) and Fedorov et al. (2016), we make use of mountain silhouettes, which are among the most salient structural features in mountainous natural environments. Gaussian filtering and Sobel edge detection are applied to the master image to reduce noise and extract the structural features from the images. Next, the mountain silhouette is automatically detected from the edge image (see Fig. 4). Our silhouette extraction is based on the assumption that the mountain silhouette is the uppermost edge line that spans the full width of the image. It starts at the top left pixel in the edge image and looks for the first edge pixel in the first column. Once a pixel is found, the algorithm iteratively searches in a 7 pixel × 7 pixel neighborhood for other edge pixels until a continuous line is found that spans the full width of the image. If no such edge line is found, the algorithm starts again at the next edge pixel in the first column of the image.

Figure 4Three examples of automatic silhouette extraction. (a) Webcam images, (b) extracted edges using Sobel edge detection, (c) detected mountain silhouettes, and (d) mountain silhouettes (red) superimposed on grayscale webcam images. Webcam images (left to right): La Fouly (© Pays du St. Bernard, #lafouy, #mypsb), Tschentenalp (© Verein Trainingszentrum Adelboden, https://www.adelboden.ch/de/s/home, last access: 23 March 2020), and Axalp (© Sportbahnen Axalp Windegg AG, 3855 Axalp).

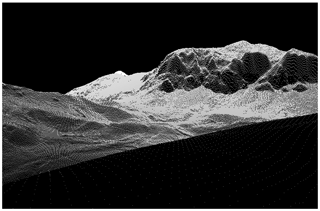

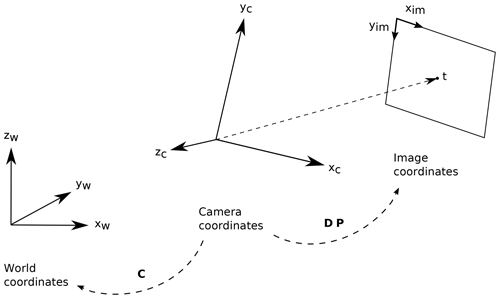

To derive the unknown camera parameters, the extracted mountain silhouette is registered with mountain silhouettes extracted from virtually rendered DEM images. These DEM images are generated by projecting the DEM point cloud from its world coordinate system via a camera coordinate system to an image coordinate system (see Figs. 5 and 6) by using a pinhole camera model. To reduce the computational complexity, only DEM points that are visible from the point of view of the webcam are considered. For this purpose, the viewshed generation module of the Photo Rectification And ClassificaTIon SoftwarE (PRACTISE V.1.0; Härer et al., 2013) is used to generate a 360∘ visibility map from the point of view of the webcam. The projected DEM points p′ of the virtual DEM image are computed by multiplying the visible DEM points p by the inverse of a camera matrix C, a perspective projection matrix P, and a viewport matrix D:

Figure 5Sample rendering of the swissALTI3D digital elevation model (DEM) using a pinhole camera model. DEM data © swisstopo.

Figure 6World, camera, and image coordinate systems and their transformations using camera matrix C, perspective projection matrix P, and viewport matrix D.

The camera matrix C transforms from camera coordinates to world coordinates and is defined by extrinsic camera parameters, i.e., the camera's location and orientation with respect to the known world reference frame. It is given by

where cop is the camera's location and xc, yc, and zc are the three vectors of the camera coordinate system that define its orientation, i.e., the roll, pitch, and yaw angle. The perspective projection matrix P transforms objects into canonic view volume (i.e., a cube) so that the image points are normalized view coordinates in the range . It is defined by intrinsic camera parameters and is given by

where a is the image aspect ratio and “near” and “far” are the distances to a near and a far plane that limit the infinite viewing volume. To finally transform to pixel coordinates , the viewport matrix, given by

is applied. It scales the projected pixels to a certain image size and translates them such that the origin of the image coordinate system is at the upper left corner. Since we use homogeneous coordinates, we apply perspective division to obtain pixel coordinates. Using this camera model, virtual DEM images can be generated by sampling the unknown parameters (i.e., the three orientation vectors xc, yc, and zc of the camera and the FOV).

To estimate the ground truth camera parameters, we propose a silhouette matching procedure. Similar to before, the mountain silhouettes are extracted from the rendered DEM images using the method described above. Given two silhouettes, i.e., the master image silhouette and a silhouette extracted from a sampled DEM rendering, we define a score function based on 2D cross correlation to quantify how well the two silhouettes match:

where w1 is the normalized maximum response of cross correlation, and w2 is the normalized image space offset defined by the distance between the pixel location of the maximum response and the image center. The final score is the weighted sum using a user-defined parameter α. To estimate the camera parameters, we seek for the parameters that maximize this score.

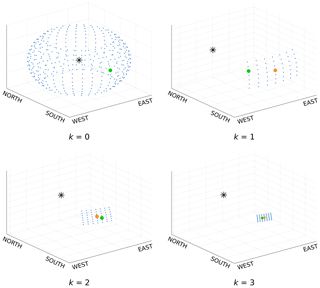

To efficiently search for the best matching silhouette pair, silhouette matching is performed on multiple scales k. On each scale, the algorithm rotates the camera coordinate system horizontally and vertically (see Fig. 7) and searches for the highest score. On scale i, the estimated parameters of scale i−1 are used as initialization and the camera coordinate system is rotated nx times around the z coordinate of the world coordinate system and ny times around the x axis of the camera coordinate system. On scale k=0, the parameters are initialized randomly. The horizontal and vertical rotation steps are called strides sx and sy respectively. On scale k=0, we set an initial stride of sx=360∘∕nx (with nx=20) and sy=90∘∕ny (with ny=12). For all scales k>0, the horizontal and vertical strides are recursively defined by

Figure 7Viewing directions (blue points) of a camera (asterisk) during image-to-DEM registration. The green dots indicate the viewing directions with the best score, and the orange dots indicate the best viewing directions of the previous scale. An example is shown for vertical and horizontal rotations from scale k=0 to k=3.

To approximate the roll angle of the camera, we additionally rotate the x coordinate of the camera matrix on each scale m=5 times around the viewing direction once the image space offset w2 is smaller than 10 pixels. An initial stride of sm=3∘∕m is set and decreases each scale by

Instead of estimating the FOV manually, our procedure can also optimize the FOV of the webcam by first iterating the horizontal FOV of 30 by 5∘ to a FOV of 90∘ in scale k=0. The best matching silhouette pair defines the initial FOV estimate. Once the image space offset w2 is smaller than 20 pixels, the FOV can be estimated more accurately by evaluating different FOVs at each iteration: the FOV is iterated at each viewing direction f=5 times around the initial FOV with an initial stride sFOV=2∘, decreasing each scale by

The weighting parameter α (Eq. 5) is a function of scale k. On scale k=0, we set α=1, such that the final score is mainly determined by the maximum response of cross correlation w1. The normalized image space offset w2 is ignored, since it would mainly correspond to an offset of a wrongly matched silhouette pair. w2 becomes important for scales k>0, once the viewing direction estimate is reasonably accurate. The smaller the distance of the maximum response to the image center, the better the two silhouettes match. Therefore, α is set to a low value (0.1). Once the roll angle and FOV is resolved, both measures, w1 and w2, are set equally (α=0.5), since both the smallest offset and the highest response value have to be estimated.

To find the best score efficiently, the virtual DEM images are rendered with a lower resolution in the first scales. Starting with a width of and height of in scale k=0, the width and height are doubled until the original image size is reached in scale k=3. Experiments have shown that image-to-DEM registration requires around 12 scales until the best matching silhouette pair with an image space offset of 0 is found. This best matching silhouette pair results in a transformation matrix that relates each pixel of the master image to its real 3D coordinates.

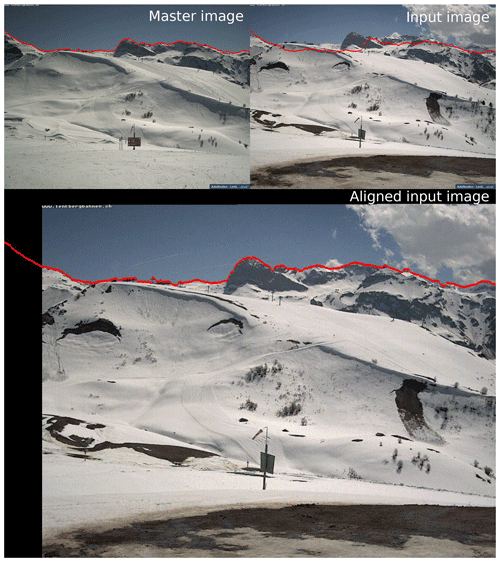

3.3 Automatic image-to-image alignment

Most webcams are exposed to wind that may lead to small changes in the camera orientation. Moreover, for a few webcams, major variations in orientation can occur due to human interaction, intentionally or unintentionally. While small orientation changes may occur every day, we observe major camera movements rarely, at most monthly. Because image-to-DEM registration is computationally expensive and mountain silhouettes cannot be detected on each webcam image due to cloud cover or low contrast conditions, each webcam image is automatically aligned to its master image by solving for a homography H. A homography is a projective transformation between two images with the same camera position but different orientation and is used to relate the two images so that they can be aligned.

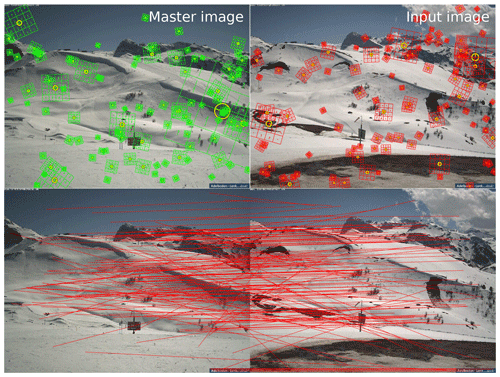

We use the scale-invariant feature transform (SIFT; Lowe, 2004) to detect structural features in a webcam image and its corresponding master image. It transforms an image into a collection of local feature vectors that consist of a SIFT keypoint (image location) and a SIFT descriptor that is highly distinctive and invariant to illumination, position, and scale. After the feature detection, the features are matched across the two images (see Fig. 8). The similarity between two feature vectors is given by their Euclidean distance. Since the number of potential matching features can be quite large, we approximate this distance using an algorithm called “best bin first” (see Lowe, 2004). We use the SIFT implementation from the open-source library VLFeat (Vedaldi and Fulkerson, 2010).

Figure 8SIFT features of a master image and an input image and corresponding matches between all features. To simplify the illustration, we show a subset of 100 randomly selected SIFT features per image. Webcam images: Hahnenmoos, © Skiregion Adelboden-Lenk.

A homography H is a 3×3 matrix. Since scale is arbitrary, H has eight unknown parameters. Therefore, at least four point correspondences (x∕y image coordinates) are needed to solve for H. Since not all matched pairs are correct, the homography is estimated using the best matching feature points. For this purpose, we use the robust fitting model RANdom SAmple Consensus (RANSAC; Fischler and Bolles, 1981). RANSAC randomly selects four pairs of corresponding points to calculate the homography, transforms all points from one image to the other using the found homography, and searches for the solution that has the best agreement with all remaining matching pairs. This best agreement is found by calculating the mapping error between each transformed SIFT point of an input image and its corresponding SIFT point of the master image. To eliminate the bias towards any particular set of points, the best matching image-to-image alignment is achieved by recalculating the homography using all features with a small mapping error of the best homography found by RANSAC. Figure 9 shows an example of an image that is aligned to a corresponding master image.

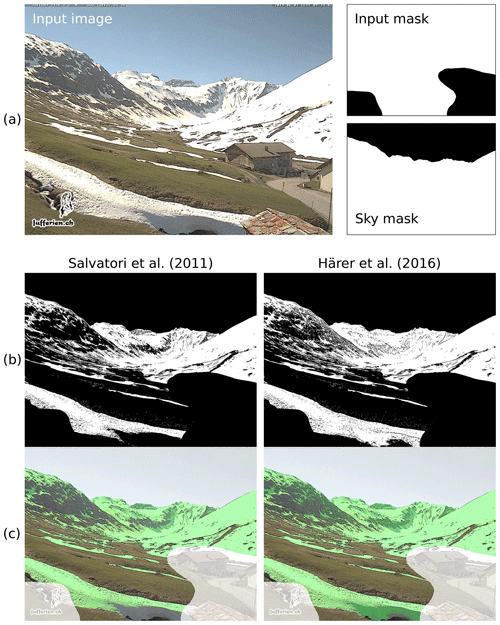

3.4 Automatic snow classification

We perform snow classification experiments using the methods proposed by Salvatori et al. (2011) and Härer et al. (2016). The method by Salvatori et al. (2011) analyzes the blue-band digital number frequency histogram to set a snow threshold DNb. First, the frequency histogram is smoothed using a moving average window of 5. The snow threshold is then automatically selected at the histogram's first local minimum above or equal to the intensity value 127. If no local minimum is found, the snow threshold is set to the value 127. All pixel values equal or higher than this threshold value are classified as snow, whereas lower values are classified as snow-free.

Figure 10Example of a webcam image that is masked for subsequent snow classification using an input mask and a sky mask derived from the extracted mountain silhouette (a). Snow classification is applied using the methods by Salvatori et al. (2011) and Härer et al. (2016). Detected snow is shown (b) in white in the binary output image (black: no snow or masked out) and (c) as a transparent green layer on the original webcam image (white transparent layer: masked region). Webcam image: Juf, © jufferien.ch.

The second method is a snow classification routine included in PRACTISE V.2.1 (Härer et al., 2016). Since the method by Salvatori et al. (2011) only works reasonably well for nonshadowing areas (Härer et al., 2016; Arslan et al., 2017), this routine additionally detects snow in the shaded regions of an image. As a first step, the method of Härer et al. (2016) applies the blue-band classification proposed by Salvatori et al. (2011). In a second step, Härer et al. (2016) refine snow classification using PCA for separating shaded snow cover from sunlit rock surfaces. Standardized RGB values in PCA space (PC score matrix) are calculated by multiplying the standardized RGB values (mean of 0 and standard deviation of 1) with the principal component (PC) coefficient matrix (calculated using singular value decomposition). The PC score matrix is normalized by scaling its values between 0 and 1. The first PC explains the largest variance in the data, but its frequency histogram is essentially identical to the blue-band frequency histogram. Therefore, Härer et al. (2016) use the frequency histograms of the second and third PC (PC2 and PC3) for separating shaded snow cover from other surfaces. The pixels are classified as snow if the following two conditions are fulfilled:

DNh is an additional condition to exclude very dark pixel values in the blue-band channel (values<63) since Härer et al. (2016) identified them as prone to snow misclassification. Moreover, blue-band values with a higher value than DNb are not considered either since they have been already identified as snow cover by the blue-band classification in the first step. As a third and fourth step, the method additionally identifies sunny rocks and calculates snow probability values for all the pixels that were not classified as snow in the first three steps.

We apply this snow classification by classifying all pixels detected in the first and second step as “snow” and the remaining pixel values as “no snow”. A snow classification example is shown in Fig. 10. The snow classification takes as input a webcam image, the corresponding input mask described in Sect. 3.1, and a sky mask where all sky pixels are automatically masked out using the mountain silhouette extracted from the master image (see Fig. 10a). The detected snow pixels by the method of Salvatori et al. (2011) and Härer et al. (2016) are shown in white (Fig. 10b) and as a green transparent layer (Fig. 10c).

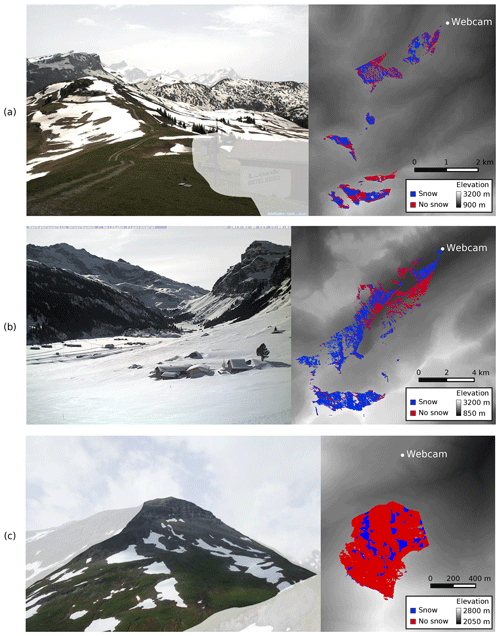

The transformation matrix found for each master image is used to generate a lookup table that relates all visible DEM grid cells to the associated image pixel. For each DEM grid cell in this lookup table, the associated classification result (i.e., “snow” or “no snow” of the classified webcam image pixels) is set, which results in a snow cover map of 2 m spatial resolution. Figure 11 shows three webcam images and resulting snow cover maps in the (a) Lenk, (b) Urnerboden, and (c) Furka Pass regions.

Figure 11Example webcam images and resulting snow cover maps of three webcams: (a) Lenk Betelberg (© Skiregion Adelboden-Lenk), (b) Urnerboden (© Verkehrsverein Urnerboden, Seilbahngenossenschaft Urnerboden-Fisetengrat), and (c) Blauberg Furka Pass (© Armin Rist, Institute of Geography, University of Bern). Snow is classified using the method proposed by Salvatori et al. (2011). The white transparent layer on the webcam images shows the masked regions. The grayscale values of the snow cover maps show the swissALTI3D elevation values of the area that is not visible from the webcam's location (© swisstopo).

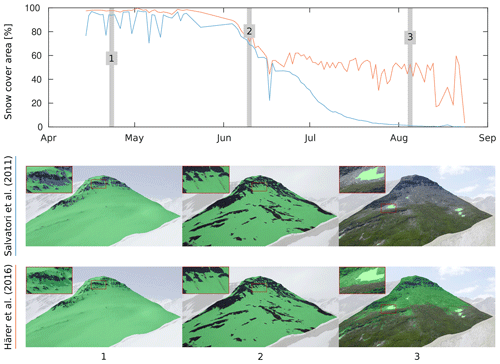

Our procedure facilitates snow cover analyses using public webcams, as long as location of the camera can be estimated and a mountain silhouette is visible in the image. Figure 12 reveals the percentage of snow-covered area on a mountain hill in the Furka Pass region from 14 April to 28 August 2015 and three example images with applied classifications. Webcam images containing fog or adverse cloud cover that impede the view on the terrain were manually removed before processing. The differences caused by the two classification methods are discussed in Sect. 6.

Figure 12Percentage of snow-covered area on a mountain hill in the Furka Pass region from 14 April to 28 August 2015 using the snow classification proposed by Salvatori et al. (2011) (blue line) and Härer et al. (2016) (red line). Webcam images: Blauberg Furka Pass, © Armin Rist, Institute of Geography, University of Bern.

In total, we apply image-to-DEM registration on 50 webcams. Our silhouette extraction technique successfully detects all 50 silhouettes. For five webcams, automatic image-to-DEM registration fails to find the appropriate orientation of the camera. This failure is either caused by heavy lens distortions of the camera system or due to several excerpts of similar-looking mountain silhouettes that lead to a wrong orientation estimate on scale k=0. In this section we evaluate the precision of the mapping between webcam image pixel coordinates and DEM coordinates, which we call mapping accuracy. This accuracy depends on (1) uncertainties caused by the projection of low-resolution webcam images on a high-resolution DEM (projection uncertainty) and (2) the ability of the registration approach to find the correct silhouette pair (registration accuracy).

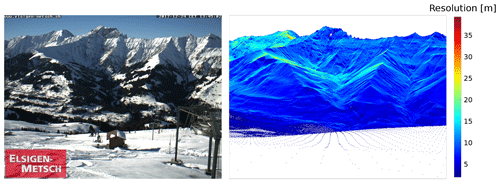

5.1 Projection uncertainty

Depending on the distance of the terrain to the webcam, the slope and aspect of the terrain, and the webcam image resolution and its FOV, an image pixel is mapped onto one or several DEM grid cells. Therefore, image pixels are either upsampled or downsampled to the DEM's pixel resolution (2 m). An approximation of the projected image pixel resolution can be calculated as the root of the number of DEM grids an image pixel is mapped on times the resolution of the DEM (i.e., 2 m), assuming that an image pixel is mapped onto a rectangular region of DEM grids. Figure 13 shows the approximated projected pixel resolution of an example webcam image at Metschalp. The webcam image has an image resolution of 640 pixels × 480 pixels and a horizontal FOV of 47∘. In general, the projected pixel resolution close to the webcam is high and decreases with increasing distance to the webcam position. Moreover, the projected pixel resolution depends on the orientation of the slope with respect to the viewing direction. It is high for slopes orthogonal to the viewing direction and low at grazing angles near silhouettes. The mean projected pixel resolution found for 45 webcams is 4.5 m with a standard deviation of 4.4 m. If only DEM grids within a distance of 20 km to the webcam are considered, the mean projected pixel resolution increases to 2.9 m with a standard deviation of 1.5 m.

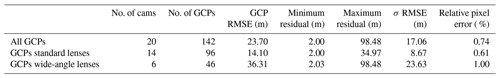

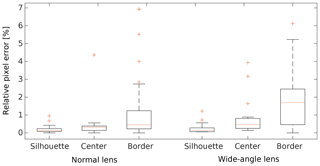

5.2 Registration accuracy

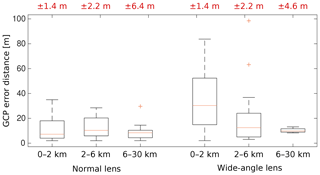

To evaluate the accuracy of our automatic image-to-DEM registration, we select 20 webcams that comprise different areal extents and lens characteristics. Depending on the presence of structural image content, we manually select 5 to 15 GCPs per webcam using the SWISSIMAGE orthophoto. For 142 GCPs in total, we compute relative pixel errors (image space distances) and the root mean square error (RMSE) of the distance between the real and projected GCPs in world coordinates (see Table 1). We differentiate between standard lens webcams (FOV<48∘) and wide-angle lens webcams (FOV≥48∘). The relative pixel error is calculated as the distance between the pixel coordinate of a GCP and its pixel coordinate predicted by the transformation matrix. We report this distance as percentage of image diagonal. It is a measure to calculate the accuracy of our automatic image-to-DEM registration. Results show that the relative pixel error is higher for GCPs of wide-angle webcams than for GCPs of standard lens webcams (1 % and 0.61 %, respectively). This difference is mainly caused by lens distortions, which increase with a larger FOV and therewith lead to a discrepancy of the silhouette matching, mainly at the outer part of the images. This discrepancy is even more prominent when considering the relative pixel error by comparing GCPs at the mountain silhouette, GCPs that are close to the image border (the outer 25 % of the total image width/height), and the remaining GCPs in the center region of the image (see Fig. 14). GCPs at the silhouette indicate how well the image-to-DEM registration matches the two silhouettes. The further away GCPs are from the silhouette and the central part of the image, the more they are affected by the camera model used for image-to-DEM registration. Therefore, GCPs close to the image border are affected the most by effects of lens distortions. The relative pixel error is notably higher for GCPs at the border of the images than the remaining GCPs, especially for wide-angle lens webcams. Not surprisingly, smallest errors are found for GCPs located at the mountain silhouette, since this silhouette is used for image-to-DEM registration.

Table 1Projection error of ground control points (GCPs) in standard lens (FOV<48∘) and wide-angle lens (FOV≥48∘) webcam images.

Figure 14Relative pixel error of ground control points (GCPs) of standard and wide-angle lens webcams. Results are grouped in GCPs located at the mountain silhouette, the center region of the image, and the border region of the image (the outer 25 % of the total image width and height).

The root mean square error (RMSE) of the distance between the real and projected GCPs in world coordinates is shown in Table 1. We find again a significant difference in the registration accuracy between webcams equipped with standard lenses and wide-angle lenses. Registration accuracy reveals an overall RMSE of 23.7 m, with a RMSE of 14.1 m for standard lens webcams and 36.3 m for wide-angle lens webcams. We calculate the GCP error distance in world coordinates by projecting the registered pixels onto a map using the transformation matrix. In Fig. 15, box plots of the error distances between the real and projected GCPs are shown for standard and wide-angle lens webcams. Results are grouped into three categories of GCPs within 0–2, 2–6, and 6–30 km distance to the webcam. Since it is more difficult to set GCPs in low-resolution webcam images, we use large structural features such as mountain peaks to set GCPs far away from the webcams. This ensures that we can select the appropriate pixel where the given GCP is actually located. We use the transformation matrix to project this pixel to world coordinates, and, thus, we assume that this GCP is located in the center of the pixel. However, we have to take into account that this is not necessarily the real position of the GCP within the image pixel. As shown in Sect. 5.1, pixel values are mapped onto a certain area on a map. Therefore, we calculate the projection uncertainty of a GCP as ± the radius of the bounding volume of the DEM grids where the selected image pixel is projected on. We use the median to quantify the projection uncertainty of a group of GCPs. Median projection uncertainties are shown as red numbers on top of Fig. 15. It can be clearly seen that the largest error distances are caused by GCPs of wide-angle lens webcams that are located close to the webcam (0–2 km) and that the errors are generally lower further away from the webcams. For standard lens webcams, there is no considerable difference in the error distance between GPCs within 0–2, 2–6, and 6–30 km distance to the webcam. Even though projection uncertainties are higher for GCPs located further away from the webcams, for both standard lens and wide-angle lens webcams the mapping accuracy of GCPs that are more than 6 km away from the webcam (mean error distances of 8.6 and 10.2 m with uncertainties of ±6.4 and ±4.6 m, respectively) is comparable to the mapping accuracy found for GCPs within 0–6 km distance of normal lens webcams and GCPs within 2–6 km distance of wide-angle lens webcams.

The performance of our automatic image-to-DEM registration procedure is promising. With marginal manual user input, we transform a webcam image into a georeferenced map. With an overall RMSE of about 23.7 m, our method is precise enough to validate or complement satellite-derived snow cover maps and offers snow cover analyses with a high spatiotemporal resolution over a large area. However, projection uncertainties have to be taken into account as well since they may highly differ depending on the selected webcam. We expect a lower performance of our image-to-DEM registration compared to approaches where camera parameters are available or GCPs are used to align an image to a DEM. However, having access to intrinsic and extrinsic camera parameters, or measuring these parameters using GCPs, is infeasible for a reasonably large-scale camera network. The large differences of RMSE between standard lens webcams and wide-angle lens webcams suggest a further improvement of our camera model to account for lens distortions. Given the large amount of webcams, we can also exclude webcams equipped with wide-angle lenses from analyses to notably reduce mapping errors (RMSE of 14.1 m found for 14 webcams equipped with standard lenses; see Table 1). Another solution is to use only the central part of an image if the FOV of the webcam is higher than a certain threshold.

Our method relies on a precise estimation of the webcam location. Especially when a decreasing slope is visible in the near field of the webcam, significant mapping errors may occur. For example, a lower estimate of the installation height may cause a pixel in 10 m distance to be mapped onto the counter slope 2 km away. Therefore, we recommend masking out regions that are on the same slope as the webcam itself or areas close to edges with huge depth differences. Since we did not measure the ground truth location of the selected webcams, a direct evaluation of the estimated location accuracy is not possible. However, we roughly estimate an accuracy of about 5 m by leveraging the orthophoto SWISSIMAGE and prior knowledge about the approximate webcam location (for instance, mounted on a specific wall of a building).

In general, we propose masking out regions that are close to the webcam to avoid large mapping errors as shown in Fig. 15 for webcams with wide-angle lenses. These large mapping errors may be caused by an imprecise location estimation. However, this effect was not observed for standard lens webcams. Hence, the large mapping errors close to the webcam can be attributed to the fact that close GCPs are generally more often located at the outer part of the image where lens distortions increase. In addition, areas closer to a webcam may generally have a larger mapping error as only the mountain silhouette is used for the image-to-DEM registration. Therefore, these areas are additionally affected by the selected camera model used for image-to-DEM registration. Additionally, we propose excluding regions that are far away from the webcam (i.e., >15 or 20 km) to avoid large projection uncertainties and to ensure a high spatial resolution. Moreover, it has to be taken into account that projection uncertainties may strongly increase if the slope and aspect of the DEM grid with respect to the viewing direction is high. For single image pixels, projection uncertainty can be extremely high if the pixel is mapped onto several nonadjacent DEM grids (e.g., if a pixel is projected onto DEM grids on a hill or peak as well as on the DEM grids behind the hill on the counter slope).

For most webcams, an intentional, significant change in their orientation occurs only occasionally, and, therefore, a landscape can be analyzed over a long time period in the case of an available image archive. Our image-to-image alignment enables us to precisely correct small changes in orientation of webcam images and works generally well for images with similar image content. Alignment artifacts from, e.g., logos in the image are eliminated by using RANSAC. Since some errors may occur if the image content differs too much, we propose not to align snowy winter images to snow-free image scenes and vice versa.

The snow classification method proposed by Salvatori et al. (2011) has been frequently used and discussed in recent studies. Many of these studies emphasize the problem of misclassification due to snow in shadowing regions (e.g., Härer et al., 2016; Arslan et al., 2017; Salzano et al., 2019). We have observed the same issue, especially for winter scenes with a low solar zenith angle. The comparison with the snow classification method proposed by Härer et al. (2016) reveals a similar pattern for all the processed webcams: the method by Salvatori et al. (2011) underestimates snow cover, mainly in shadowing areas (see Fig. 12 for an example). For snowy winter scenes, the PCA method by Härer et al. (2016) performs very well and is able to correctly classify snow cover in shadowing areas. However, once less than about 50 % of snow is present in an image, the method overestimates snow cover and classifies rock, trees, or grass as snow (see Fig. 12). This is often observed when no shaded snow cover is present or in the case of strong illumination conditions. As shadows from structural terrain decrease in spring, the method of Salvatori et al. (2011) often only weakly underestimates the snow cover. For rare cases of very low illumination conditions, both methods fail to correctly classify snow. In our framework, we use a combination of both methods to get the best possible snow classification result. However, there is a need for an improved snow classification method. This method should be able to classify snow under varying illumination conditions and ideally can distinguish between snow and clouds or fog.

The differentiation between snow, clouds, and fog currently remains an unsolved problem for RGB images. Even though webcams are often located below the cloud cover, low clouds and fog in front of the landscape are manually removed to not falsify snow classification. Images containing fog and clouds on a substantial part of the image could be automatically removed by comparing the edges of a cloud-free image with edges of a potentially cloud covered image. However, clouds and fog that impede the view on a smaller part of the landscape are difficult to distinguish from snow. A possible method to remove such cloud cover is, for example, to aggregate all the images collected by a webcam in a day as proposed by Fedorov et al. (2016). However, the aggregated images may loose contrast and contain mixed pixel information, which in turn will affect snow classification. Moreover, long-lasting cloudy conditions may remain undetected by this approach, and the aggregation will lower the temporal resolution. Therefore, we consider investigating cloud and fog detection in webcam images for future work.

Since our image-to-DEM registration requires a visible mountain silhouette, it is not suited for webcams that observe flat areas. Moreover, there are geographical limitations since webcams might not be installed in very remote areas. Generally, a large-scale coverage of a region might be only possible in countries with a well-developed infrastructure. Nevertheless, the high number of freely available webcams worldwide combined with our semiautomatic procedure offers a unique potential to complement and evaluate satellite-derived snow cover information. For example, our webcam snow cover maps may facilitate the gap-filling of partly cloud obscured satellite-based snow cover maps or improve snow classification in steep terrain or shadow-affected image scenes.

We present a semiautomatic procedure to derive snow cover maps from freely available webcam images in the Swiss Alps. Our registration approach automatically estimates webcams' parameters, which allows us to relate pixels of a webcam image to their real-world coordinates. Additionally, we use a method for automatic image-to-image alignment and compare two recent snow classification methods. A detailed evaluation of the automatic georectification is carried out and reveals a RMSE of 23.7 m, with a RMSE of 14.1 m for webcams equipped with standard lenses and 36.3 m for webcams equipped with wide-angle lenses. To the best of our knowledge, no other method is able to offer this accuracy on such a high spatiotemporal resolution over a large area. Large accuracy differences between standard lens webcams and webcams equipped with wide-angle lenses suggest the improvement of our camera model to incorporate effects of lens distortions or the use of only the central part of an image to generate more accurate snow cover maps. However, an improvement of RGB snow classification is essential to automatically derive snow cover maps, i.e., to avoid the manual removal of cloudy scenes. Nevertheless, our approach offers snow cover analyses with a high spatiotemporal resolution over a large area with a minimum of manual user input. Our webcam-based snow cover monitoring network could not only serve as a reference for improved validation of satellite-based approaches, but also complement satellite-based snow cover retrieval. As an example, webcam-based snow cover information could be used to improve gap-filling methods to eliminate cloud cover in satellite-based snow cover products. Especially in spring during the snowmelt period, webcams could help to detect snow that may fall and melt within several days during cloudy conditions. In addition, our webcam-based snow cover product can be used to validate Sentinel-2 and Landsat-based snow cover products. We are therefore planning to extend our webcam archive with additional webcams located in the European Alps. Finally, our procedure, in particular the snow/cloud classification, could be improved to enable semioperational processing for a near-real-time service, which could support federal agencies (e.g., MeteoSwiss, WSL-SLF) for their weather forecast activities or avalanche warning.

We downloaded webcam images from http://www.kaikowetter.ch/ (last access: 22 April 2020; Kaikowetter, 2020). Due to licensing reasons, we cannot provide our webcam data set. The swissALTI3D digital elevation model and SWISSIMAGE orthophoto are subject to copyright restrictions and are provided by swisstopo (http://www.swisstopo.ch, last access: 22 April 2020; swisstopo, 2020).

CP developed the code and performed the data analysis with advice from FH and SW. SH provided the MATLAB code of the software PRACTISE. CP wrote the paper with contributions from all co-authors.

The authors declare that they have no conflict of interest.

The digital elevation model swissALTI3D and the orthophoto SWISSIMAGE were obtained from the Federal Office of Topography (swisstopo). The authors acknowledge Kai Kobler for providing updated webcam images on http://www.kaikowetter.ch/ (last access: 22 April 2020) and all webcam owners that provide their images online, in particular Armin Rist and Sara Fischer. Further, we gratefully acknowledge Simon Gascoin and Tiziano Portenier for their constructive comments on the paper.

This paper was edited by Ketil Isaksen and reviewed by Yves Bühler and A. N. Arslan.

Arslan, A. N., Tanis, C. M., Metsämäki, S., Aurela, M., Böttcher, K., Linkosalmi, M., and Peltoniemi, M.: Automated Webcam Monitoring of Fractional Snow Cover in Northern Boreal Conditions, Geosciences, 7, 55, https://doi.org/10.3390/geosciences7030055, 2017. a, b, c, d

Baboud, L., Čadík, M., Eisemann, E., and Seidel, H.: Automatic photo-to-terrain alignment for the annotation of mountain pictures, in: CVPR 2011, 41–48, https://doi.org/10.1109/CVPR.2011.5995727, 2011. a

Bühler, Y., Adams, M. S., Bösch, R., and Stoffel, A.: Mapping snow depth in alpine terrain with unmanned aerial systems (UASs): potential and limitations, The Cryosphere, 10, 1075–1088, https://doi.org/10.5194/tc-10-1075-2016, 2016. a

Corripio, J. G.: Snow surface albedo estimation using terrestrial photography, Int. J. Remote Sens., 25, 5705–5729, https://doi.org/10.1080/01431160410001709002, 2004. a

De Michele, C., Avanzi, F., Passoni, D., Barzaghi, R., Pinto, L., Dosso, P., Ghezzi, A., Gianatti, R., and Della Vedova, G.: Using a fixed-wing UAS to map snow depth distribution: an evaluation at peak accumulation, The Cryosphere, 10, 511–522, https://doi.org/10.5194/tc-10-511-2016, 2016. a

Dizerens, C.: Georectification and snow classification of webcam images: potential for complementing satellite-derrived snow maps over Switzerland, Master's thesis, Faculty of Science, University of Bern, Switzerland, 2015. a

Dumont, M. and Gascoin, S.: 4 – Optical Remote Sensing of Snow Cover, in: Land Surface Remote Sensing in Continental Hydrology, edited by: Baghdadi, N. and Zribi, M., 115–137, Elsevier, https://doi.org/10.1016/B978-1-78548-104-8.50004-8, 2016. a

Dumont, M., Sirguey, P., Arnaud, Y., and Six, D.: Monitoring spatial and temporal variations of surface albedo on Saint Sorlin Glacier (French Alps) using terrestrial photography, The Cryosphere, 5, 759–771, https://doi.org/10.5194/tc-5-759-2011, 2011. a

Farinotti, D., Magnusson, J., Huss, M., and Bauder, A.: Snow accumulation distribution inferred from time-lapse photography and simple modelling, Hydrol. Process., 24, 2087–2097, https://doi.org/10.1002/hyp.7629, 2010. a, b

Fedorov, R., Camerada, A., Fraternali, P., and Tagliasacchi, M.: Estimating Snow Cover From Publicly Available Images, IEEE T. Multimedia, 18, 1187–1200, https://doi.org/10.1109/TMM.2016.2535356, 2016. a, b, c, d, e, f

Fischler, M. A. and Bolles, R. C.: Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography, Commun. ACM, 24, 381–395, https://doi.org/10.1145/358669.358692, 1981. a

Floyd, W. and Weiler, M.: Measuring snow accumulation and ablation dynamics during rain-on-snow events: innovative measurement techniques, Hydrol. Process., 22, 4805–4812, https://doi.org/10.1002/hyp.7142, 2008. a

Foppa, N. and Seiz, G.: Inter-annual variations of snow days over Switzerland from 2000–2010 derived from MODIS satellite data, The Cryosphere, 6, 331–342, https://doi.org/10.5194/tc-6-331-2012, 2012. a

Härer, S., Bernhardt, M., Corripio, J. G., and Schulz, K.: PRACTISE – Photo Rectification And ClassificaTIon SoftwarE (V.1.0), Geosci. Model Dev., 6, 837–848, https://doi.org/10.5194/gmd-6-837-2013, 2013. a, b

Härer, S., Bernhardt, M., and Schulz, K.: PRACTISE – Photo Rectification And ClassificaTIon SoftwarE (V.2.1), Geosci. Model Dev., 9, 307–321, https://doi.org/10.5194/gmd-9-307-2016, 2016. a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p, q, r, s

Hüsler, F., Jonas, T., Wunderle, S., and Albrecht, S.: Validation of a modified snow cover retrieval algorithm from historical 1-km {AVHRR} data over the European Alps, Remote Sens. Environ., 121, 497–515, https://doi.org/10.1016/j.rse.2012.02.018, 2012. a

Huss, M., Sold, L., Hoelzle, M., Stokvis, M., Salzmann, N., Farinotti, D., and Zemp, M.: Towards remote monitoring of sub-seasonal glacier mass balance, Ann. Glaciol., 54, 75–83, https://doi.org/10.3189/2013AoG63A427, 2013. a

Jonas, T., Marty, C., and Magnusson, J.: Estimating the snow water equivalent from snow depth measurements in the Swiss Alps, J. Hydrol., 378, 161–167, https://doi.org/10.1016/j.jhydrol.2009.09.021, 2009. a

Kaikowetter: Information Wetterstation von Kai Kobler, Kaiko's Wetterpage, available at: http://www.kaikowetter.ch/, last access: 22 April 2020. a

Klein, G., Vitasse, Y., Rixen, C., Marty, C., and Rebetez, M.: Shorter snow cover duration since 1970 in the Swiss Alps due to earlier snowmelt more than to later snow onset, Clim. Change, 139, 637–649, https://doi.org/10.1007/s10584-016-1806-y, 2016. a

Laternser, M. and Schneebeli, M.: Long-term snow climate trends of the Swiss Alps (1931–99), Int. J. Climatol., 23, 733–750, https://doi.org/10.1002/joc.912, 2003. a

Liu, J.-F., Chen, R.-S., and Wang, G.: Snowline and snow cover monitoring at high spatial resolution in a mountainous river basin based on a time-lapse camera at a daily scale, J. Mt. Sci., 12, 60–69, https://doi.org/10.1007/s11629-013-2842-y, 2015. a, b

Lowe, D. G.: Distinctive Image Features from Scale-Invariant Keypoints, Int. J. Comput. Vis., 60, 91–110, https://doi.org/10.1023/B:VISI.0000029664.99615.94, 2004. a, b

Manninen, T. and Jääskeläinen, E.: The Effect of Boreal Forest Canopy on Snow Covered Terrain Broadband Albedo, Geophysica, 53, 9–29, 2018. a

Marty, C.: Regime shift of snow days in Switzerland, Geophys. Res. Lett., 35, 12, https://doi.org/10.1029/2008GL033998, 2008. a

Messerli, A. and Grinsted, A.: Image georectification and feature tracking toolbox: ImGRAFT, Geosci. Instrum. Method. Data Syst., 4, 23–34, https://doi.org/10.5194/gi-4-23-2015, 2015. a

Metsämäki, S., Mattila, O.-P., Pulliainen, J., Niemi, K., Luojus, K., and Böttcher, K.: An optical reflectance model-based method for fractional snow cover mapping applicable to continental scale, Remote Sens. Environ., 123, 508–521, https://doi.org/10.1016/j.rse.2012.04.010, 2012. a

Millet, P., Huwald, H., and Weijs, S. V.: Extracting High Resolution Snow Distribution Information with Inexpensive Autonomous Cameras, in: HIC 2018. 13th Int. Conf. Hydroinformatics, edited by: Loggia, G. L., Freni, G., Puleo, V., and Marchis, M. D., Vol. 3 of EPiC Series in Engineering, 1397–1405, EasyChair, https://doi.org/10.29007/93gh, 2018. a, b

Piazzi, G., Tanis, C. M., Kuter, S., Simsek, B., Puca, S., Toniazzo, A., Takala, M., Akyürek, Z., Gabellani, S., and Arslan, A. N.: Cross-Country Assessment of H-SAF Snow Products by Sentinel-2 Imagery Validated against In-Situ Observations and Webcam Photography, Geosciences, 9, 3, https://doi.org/10.3390/geosciences9030129, 2019. a

Pimentel, R., Pérez-Palazón, M. J., Herrero, J., and Polo, M. J.: Monitoring Snow Cover Area In Semiarid Regions Using Terrestrial Photography, Int. Conf. Hydroinformatics, Paper 378, 2014. a, b

Revuelto, J., Jonas, T., and López-Moreno, J.-I.: Backward snow depth reconstruction at high spatial resolution based on time-lapse photography, Hydrol. Process., 30, 2976–2990, https://doi.org/10.1002/hyp.10823, 2016. a

Rüfenacht, D., Brown, M., Beutel, J., and Süsstrunk, S.: Temporally consistent snow cover estimation from noisy, irregularly sampled measurements, in: 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Vol. 2, 275–283, available at: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7294942&isnumber=7294881 (last access: 22 April 2020), 2014. a, b

Salvatori, R., Plini, P., Giusto, M., Valt, M., Salzano, R., Montagnoli, M., Cagnati, A., Crepaz, G., and Sigismondi, D.: Snow cover monitoring with images from digital camera systems, Ital. J. Remote Sens., 43, 137–145, https://doi.org/10.5721/ItJRS201143211, 2011. a, b, c, d, e, f, g, h, i, j, k, l, m, n

Salzano, R., Salvatori, R., Valt, M., Giuliani, G., Chatenoux, B., and Ioppi, L.: Automated Classification of Terrestrial Images: The Contribution to the Remote Sensing of Snow Cover, Geosciences, 9, 2, https://doi.org/10.3390/geosciences9020097, 2019. a

Schmidt, S., Weber, B., and Winiger, M.: Analyses of seasonal snow disappearance in an alpine valley from micro- to meso-scale (Loetschental, Switzerland), Hydrol. Process., 23, 1041–1051, https://doi.org/10.1002/hyp.7205, 2009. a, b

swisstopo: swissALTI3D, The high precision digital elevation model of Switzerland, available at: https://shop.swisstopo.admin.ch/en/products/height_models/alti3D (last access: 27 March 2019), 2013a. a

swisstopo: SWISSIMAGE, The Digital Color Orthophotomosaic of Switzerland, available at: https://shop.swisstopo.admin.ch/en/products/images/ortho_images/SWISSIMAGE (last access: 27 March 2019), 2013b. a

swisstopo: Federal Office of Topography swisstopo, available at: http://www.swisstopo.ch, last access: 22 April 2020. a

Vedaldi, A. and Fulkerson, B.: Vlfeat: An Open and Portable Library of Computer Vision Algorithms, in: Proc. 18th ACM Int. Conf. Multimed., 1469–1472, ACM, New York, NY, USA, https://doi.org/10.1145/1873951.1874249, 2010. a

Wunderle, S., Gross, T., and Hüsler, F.: Snow Extent Variability in Lesotho Derived from MODIS Data (2000–2014), Remote Sens., 8, 6, https://doi.org/10.3390/rs8060448, 2016. a